| Revision as of 03:56, 8 July 2018 editJarble (talk | contribs)Autopatrolled, Extended confirmed users149,694 editsm →See also: linking← Previous edit | Latest revision as of 05:19, 31 July 2024 edit undoGreenC bot (talk | contribs)Bots2,555,770 edits Move 1 url. Wayback Medic 2.5 per WP:URLREQ#ieee.org | ||

| (40 intermediate revisions by 19 users not shown) | |||

| Line 1: | Line 1: | ||

| {{short description|Determining the position and orientation of a robot by analyzing associated camera images}} | |||

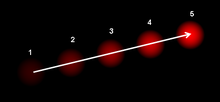

| ] vector of a moving object in a video sequence |

] vector of a moving object in a video sequence]] | ||

| In ] and ], '''visual odometry''' is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the ]s.<ref name=Maimone2007>{{cite journal | In ] and ], '''visual odometry''' is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the ]s.<ref name=Maimone2007>{{cite journal | ||

| Line 10: | Line 11: | ||

| | pages = 169–186 | | pages = 169–186 | ||

| | url = http://www-robotics.jpl.nasa.gov/publications/Mark_Maimone/rob-06-0081.R4.pdf | | url = http://www-robotics.jpl.nasa.gov/publications/Mark_Maimone/rob-06-0081.R4.pdf | ||

| | |

| access-date = 2008-07-10 | ||

| | doi = 10.1002/rob.20184 | | doi = 10.1002/rob.20184 | ||

| |citeseerx=10.1.1.104.3110 |s2cid=17544166 }}</ref> | |||

| ⚫ | }}</ref> | ||

| ==Overview== | ==Overview== | ||

| In ], ] is the use of data from the movement of actuators to estimate change in position over time through devices such as ]s to measure wheel rotations. While useful for many wheeled or tracked vehicles, traditional odometry techniques cannot be applied to ]s with non-standard locomotion methods, such as legged |

In ], ] is the use of data from the movement of actuators to estimate change in position over time through devices such as ]s to measure wheel rotations. While useful for many wheeled or tracked vehicles, traditional odometry techniques cannot be applied to ]s with non-standard locomotion methods, such as ]s. In addition, odometry universally suffers from precision problems, since wheels tend to slip and slide on the floor creating a non-uniform distance traveled as compared to the wheel rotations. The error is compounded when the vehicle operates on non-smooth surfaces. Odometry readings become increasingly unreliable as these errors accumulate and compound over time. | ||

| Visual odometry is the process of determining equivalent odometry information using sequential camera images to estimate the distance traveled. Visual odometry allows for enhanced navigational accuracy in robots or vehicles using any type of locomotion on any surface. | Visual odometry is the process of determining equivalent odometry information using sequential camera images to estimate the distance traveled. Visual odometry allows for enhanced navigational accuracy in robots or vehicles using any type of locomotion on any{{Citation needed|date=January 2021|reason=Water included? Maybe "solid"?}} surface. | ||

| ==Types |

==Types== | ||

| There are various types of VO. | There are various types of VO. | ||

| ===Monocular and |

===Monocular and stereo=== | ||

| Depending on the camera setup, VO can be categorized as Monocular VO (single camera), Stereo VO (two camera in stereo setup). | |||

| ] | ] | ||

| ===Feature |

===Feature-based and direct method=== | ||

| Traditional VO's visual information is obtained by |

Traditional VO's visual information is obtained by the feature-based method, which extracts the image feature points and tracks them in the image sequence. Recent developments in VO research provided an alternative, called the direct method, which uses pixel intensity in the image sequence directly as visual input. There are also hybrid methods. | ||

| ===Visual |

===Visual inertial odometry=== | ||

| If an ] (IMU) is used within the VO system, it is commonly referred to as Visual Inertial Odometry (VIO). | If an ] (IMU) is used within the VO system, it is commonly referred to as Visual Inertial Odometry (VIO). | ||

| Line 37: | Line 38: | ||

| # Acquire input images: using either ]s.,<ref name=ChhaniyaraS>{{cite conference | # Acquire input images: using either ]s.,<ref name=ChhaniyaraS>{{cite conference | ||

| | |

|author = Chhaniyara, Savan | ||

| |author2 = KASPAR ALTHOEFER | |||

| |author3 = LAKMAL D. SENEVIRATNE | |||

| | |

|year = 2008 | ||

| | |

|title = Visual Odometry Technique Using Circular Marker Identification For Motion Parameter Estimation | ||

| | |

|conference = The Eleventh International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines | ||

| | |

|book-title = Advances in Mobile Robotics: Proceedings of the Eleventh International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines, Coimbra, Portugal | ||

| | |

|volume = 11 | ||

| | |

|publisher = World Scientific, 2008 | ||

| | |

|url = http://eproceedings.worldscinet.com/9789812835772/9789812835772_0128.html | ||

| | |

|conference-url = https://books.google.com/books?id=8L7izBmmCuQC&q=savan+chhaniyara&pg=PA1069 | ||

| |access-date = 2010-01-22 | |||

| |archive-date = 2012-02-24 | |||

| |archive-url = https://web.archive.org/web/20120224015522/http://eproceedings.worldscinet.com/9789812835772/9789812835772_0128.html | |||

| |url-status = dead | |||

| }}</ref><ref name=Nister:2004p211 /> ]s,<ref name=Nister:2004p211>{{cite conference | }}</ref><ref name=Nister:2004p211 /> ]s,<ref name=Nister:2004p211>{{cite conference | ||

| |author1=Nister, D |author2=Naroditsky, O. |author3=Bergen, J | conference = Computer Vision and Pattern Recognition, 2004. CVPR 2004. | |author1=Nister, D |author2=Naroditsky, O. |author3=Bergen, J | conference = Computer Vision and Pattern Recognition, 2004. CVPR 2004. | ||

| | title = Visual Odometry | | title = Visual Odometry | ||

| | pages = I–652 |

| pages = I–652 – I–659 Vol.1 | ||

| | volume = 1 | | volume = 1 | ||

| |date=Jan 2004 | |date=Jan 2004 | ||

| | doi = 10.1109/CVPR.2004.1315094 | | doi = 10.1109/CVPR.2004.1315094 | ||

| | url = http://ieeexplore.ieee.org/search/srchabstract.jsp?arnumber=1315094&isnumber=29133&punumber=9183&k2dockey=1315094@ieeecnfs | |||

| }}</ref><ref name=Comport10 /> or ]s.<ref name=ScaramuzzaIEEE-TRO08>{{cite journal | }}</ref><ref name=Comport10 /> or ]s.<ref name=ScaramuzzaIEEE-TRO08>{{cite journal | ||

| | author = Scaramuzza, D. | | author = Scaramuzza, D. | ||

| |author2=Siegwart, R. | |author2=Siegwart, R. | ||

| |s2cid=13894940 | |||

| |date=October 2008 | |||

| | title = Appearance-Guided Monocular Omnidirectional Visual Odometry for Outdoor Ground Vehicles | | title = Appearance-Guided Monocular Omnidirectional Visual Odometry for Outdoor Ground Vehicles | ||

| | journal = IEEE Transactions on Robotics | | journal = IEEE Transactions on Robotics | ||

| ⚫ | |volume=24 | ||

| | pages = 1–12 | |||

| |issue=5 | |||

| | url = http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=4625958&isnumber=4359257 | |||

| | |

| pages = 1015–1026 | ||

| |doi=10.1109/TRO.2008.2004490 | |||

| ⚫ | }}</ref><ref name=Corke>{{cite conference | ||

| |hdl=20.500.11850/14362 | |||

| |hdl-access=free | |||

| ⚫ | }}</ref><ref name=Corke>{{cite conference | ||

| | author = Corke, P. |author2=Strelow, D. |author3=Singh, S. | | author = Corke, P. |author2=Strelow, D. |author3=Singh, S. | ||

| | title = Omnidirectional visual odometry for a planetary rover | | title = Omnidirectional visual odometry for a planetary rover | ||

| ⚫ | | book-title = Intelligent Robots and Systems, 2004.(IROS 2004). Proceedings. 2004 IEEE/RSJ International Conference on | ||

| | conference = | |||

| ⚫ | | |

||

| | volume = 4 | | volume = 4 | ||

| |doi=10.1109/IROS.2004.1390041 }}</ref> | |||

| ⚫ | | |

||

| | url = http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=1390041 | |||

| | conferenceurl = | |||

| }}</ref> | |||

| # Image correction: apply ] techniques for lens distortion removal, etc. | # Image correction: apply ] techniques for lens distortion removal, etc. | ||

| # ]: define interest operators, and match features across frames and construct ] field. | # ]: define interest operators, and match features across frames and construct ] field. | ||

| ## Use correlation to establish correspondence of two images, and no long term feature tracking. | |||

| ## ] and correlation. | ## ] and correlation. | ||

| ##* Use correlation, not long term ], to establish ] of two images. | |||

| ##Construct optical flow field (]). | ##Construct optical flow field (]). | ||

| # Check flow field vectors for potential tracking errors and remove outliers.<ref name=Campbell>{{cite conference | # Check flow field vectors for potential tracking errors and remove outliers.<ref name=Campbell>{{cite conference | ||

| | author = Campbell, J. |author2=Sukthankar, R. |author3=Nourbakhsh, I. |author4=Pittsburgh, I.R. | | author = Campbell, J. |author2=Sukthankar, R. |author3=Nourbakhsh, I. |author4=Pittsburgh, I.R. | ||

| | title = Techniques for evaluating optical flow for visual odometry in extreme terrain | | title = Techniques for evaluating optical flow for visual odometry in extreme terrain | ||

| ⚫ | | book-title = Intelligent Robots and Systems, 2004.(IROS 2004). Proceedings. 2004 IEEE/RSJ International Conference on | ||

| | conference = | |||

| ⚫ | | |

||

| | volume = 4 | | volume = 4 | ||

| |doi=10.1109/IROS.2004.1389991 }}</ref> | |||

| | publisher = | |||

| ⚫ | # Estimation of the camera motion from the optical flow.<ref name=Sunderhauf2005>{{cite book | ||

| | url = http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=1389991 | |||

| |author = Sunderhauf, N. | |||

| | conferenceurl = | |||

| |author2 = Konolige, K. | |||

| }}</ref> | |||

| |author3 = Lacroix, S. | |||

| ⚫ | # Estimation of the camera motion from the optical flow.<ref name=Sunderhauf2005>{{cite |

||

| | |

|author4 = Protzel, P. | ||

| | |

|year = 2005 | ||

| | |

|chapter = Visual odometry using sparse bundle adjustment on an autonomous outdoor vehicle | ||

| | |

|editor1 = Levi |editor2=Schanz |editor3=Lafrenz |editor4=Avrutin | ||

| |title = Tagungsband Autonome Mobile Systeme 2005 | |||

| ⚫ | | |

||

| |series = Reihe Informatik aktuell | |||

| ⚫ | | |

||

| ⚫ | |publisher = Springer Verlag | ||

| ⚫ | | |

||

| ⚫ | |pages = 157–163 | ||

| ⚫ | }}</ref><ref name=Konolige2006>{{cite |

||

| ⚫ | |url = http://www.tu-chemnitz.de/etit/proaut/index.download.df493a7bc2c27263f7d8ff467ea84879.pdf | ||

| ⚫ | |access-date = 2008-07-10 | ||

| |archive-url = https://web.archive.org/web/20090211031719/http://www.tu-chemnitz.de/etit/proaut/index.download.df493a7bc2c27263f7d8ff467ea84879.pdf | |||

| |archive-date = 2009-02-11 | |||

| |url-status = dead | |||

| ⚫ | }}</ref><ref name=Konolige2006>{{cite book | ||

| | author = Konolige, K. | | author = Konolige, K. | ||

| |author2=Agrawal, M. |author3=Bolles, R.C. |author4=Cowan, C. |author5=Fischler, M. |author6= Gerkey, B.P. | |author2=Agrawal, M. |author3=Bolles, R.C. |author4=Cowan, C. |author5=Fischler, M. |author6= Gerkey, B.P. | ||

| |title=Experimental Robotics |chapter=Outdoor Mapping and Navigation Using Stereo Vision |volume=39 |pages=179–190 |doi=10.1007/978-3-540-77457-0_17 |series=Springer Tracts in Advanced Robotics |date=2008 |isbn=978-3-540-77456-3 }}</ref><ref name=Olson2002>{{cite journal | |||

| | year = 2006 | |||

| | title = Outdoor mapping and navigation using stereo vision | |||

| | journal = Proc. of the Intl. Symp. on Experimental Robotics (ISER) | |||

| | url = http://www.springerlink.com/index/g442h0p7n313w1g2.pdf | |||

| | accessdate = 2008-07-10 | |||

| ⚫ | }}</ref><ref name= |

||

| | author = Olson, C.F. |author2=Matthies, L. |author3=Schoppers, M. |author4=Maimone, M.W. | | author = Olson, C.F. |author2=Matthies, L. |author3=Schoppers, M. |author4=Maimone, M.W. | ||

| | year = 2002 | | year = 2002 | ||

| Line 110: | Line 116: | ||

| | journal = Robotics and Autonomous Systems | | journal = Robotics and Autonomous Systems | ||

| | volume = 43 | | volume = 43 | ||

| | pages = 215–229 | |issue=4 | pages = 215–229 | ||

| | url = http://faculty.washington.edu/cfolson/papers/pdf/ras03.pdf | | url = http://faculty.washington.edu/cfolson/papers/pdf/ras03.pdf | ||

| | |

| access-date = 2010-06-06 | ||

| | doi=10.1016/s0921-8890(03)00004-6}}</ref><ref name=Cheng2006>{{cite journal | | doi=10.1016/s0921-8890(03)00004-6}}</ref><ref name=Cheng2006>{{cite journal | ||

| | author = Cheng, Y. |author2=Maimone, M.W. |author3=Matthies, L. | | author = Cheng, Y. |author2=Maimone, M.W. |author3=Matthies, L. | ||

| | year = 2006 | |s2cid=15149330 | year = 2006 | ||

| | title = Visual Odometry on the Mars Exploration Rovers | | title = Visual Odometry on the Mars Exploration Rovers | ||

| | journal = IEEE Robotics and Automation Magazine | | journal = IEEE Robotics and Automation Magazine | ||

| Line 121: | Line 127: | ||

| | issue = 2 | | issue = 2 | ||

| | pages = 54–62 | | pages = 54–62 | ||

| | url = http://ieeexplore.ieee.org/iel5/100/31467/101109RA2006CHENG.pdf?arnumber=101109RA2006CHENG | |||

| | accessdate = 2008-07-10 | |||

| | doi = 10.1109/MRA.2006.1638016 | | doi = 10.1109/MRA.2006.1638016 | ||

| }}</ref> | |citeseerx=10.1.1.297.4693 }}</ref> | ||

| ## Choice 1: ] for state estimate distribution maintenance. | ## Choice 1: ] for state estimate distribution maintenance. | ||

| ## Choice 2: find the geometric and 3D properties of the features that minimize a ] based on the re-projection error between two adjacent images. This can be done by mathematical minimization or ]. | ## Choice 2: find the geometric and 3D properties of the features that minimize a ] based on the re-projection error between two adjacent images. This can be done by mathematical minimization or ]. | ||

| Line 131: | Line 135: | ||

| An alternative to feature-based methods is the "direct" or appearance-based visual odometry technique which minimizes an error directly in sensor space and subsequently avoids feature matching and extraction.<ref name=Comport10>{{cite journal | An alternative to feature-based methods is the "direct" or appearance-based visual odometry technique which minimizes an error directly in sensor space and subsequently avoids feature matching and extraction.<ref name=Comport10>{{cite journal | ||

| | author = Comport, A.I. |author2=Malis, E. |author3=Rives, P. | | author = Comport, A.I. |author2=Malis, E. |author3=Rives, P. | ||

| | title = Real-time Quadrifocal Visual Odometry | |s2cid=15139693 | title = Real-time Quadrifocal Visual Odometry | ||

| | journal= International Journal of Robotics Research |

| journal= International Journal of Robotics Research | ||

| | number = 2–3 | |||

| | number = 2-3 | |||

| | pages = 245–266 | | pages = 245–266 | ||

| | volume = 29 | | volume = 29 | ||

| | year = 2010 | | year = 2010 | ||

| | url = http://ijr.sagepub.com/content/29/2-3/245.abstract | |||

| | doi = 10.1177/0278364909356601 | | doi = 10.1177/0278364909356601 | ||

| | editor = F. Chaumette |editor2=P. Corke |editor3=P. Newman | | editor = F. Chaumette |editor2=P. Corke |editor3=P. Newman | ||

| }}</ref><ref>{{Cite |

|citeseerx=10.1.1.720.3113 }}</ref><ref>{{Cite conference| last1 = Engel | first1 = Jakob | last2 = Schöps | first2 = Thomas | last3 = Cremers | first3 = Daniel |title= LSD-SLAM: Large-Scale Direct Monocular SLAM |book-title=Computer Vision | year = 2014 |editor1=Fleet D. |editor2=Pajdla T. |editor3=Schiele B. |editor4=Tuytelaars T. | conference= European Conference on Computer Vision 2014 | url = https://vision.in.tum.de/_media/spezial/bib/engel14eccv.pdf |doi=10.1007/978-3-319-10605-2_54 |volume=8690 |series=Lecture Notes in Computer Science}}</ref><ref>{{Cite conference | last1 = Engel | first1 = Jakob | last2 = Sturm | first2 = Jürgen | last3 = Cremers | first3 = Daniel | title= Semi-Dense Visual Odometry for a Monocular Camera |date=2013 | book-title = IEEE International Conference on Computer Vision (ICCV) | url = https://vision.in.tum.de/_media/spezial/bib/engel2013iccv.pdf |doi=10.1109/ICCV.2013.183 |citeseerx=10.1.1.402.6918}}</ref> | ||

| Another method, coined 'visiodometry' estimates the planar roto-translations between images using ] instead of extracting features.<ref name=ZamanICRA>{{cite conference | Another method, coined 'visiodometry' estimates the planar roto-translations between images using ] instead of extracting features.<ref name=ZamanICRA>{{cite conference | ||

| Line 146: | Line 149: | ||

| | year = 2007 | | year = 2007 | ||

| | title = High Precision Relative Localization Using a Single Camera | | title = High Precision Relative Localization Using a Single Camera | ||

| ⚫ | | book-title = Robotics and Automation, 2007.(ICRA 2007). Proceedings. 2007 IEEE International Conference on | ||

| | conference = | |||

| | doi = 10.1109/ROBOT.2007.364078 | |||

| ⚫ | | |

||

| ⚫ | }}</ref><ref name=ZamanJRAS>{{cite journal | ||

| ⚫ | | |

||

| | publisher = | |||

| | url = http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=4209696&userType=inst | |||

| | conferenceurl = | |||

| }}</ref><ref name=ZamanJRAS>{{cite journal | |||

| | author = Zaman, M. | | author = Zaman, M. | ||

| | year = 2007 | | year = 2007 | ||

| | title = High resolution relative localisation using two cameras | | title = High resolution relative localisation using two cameras | ||

| | journal = Journal of Robotics and Autonomous Systems |

| journal = Journal of Robotics and Autonomous Systems | ||

| | |

| volume = 55 | ||

| ⚫ | | issue = 9 | ||

| | pages = 685–692 | | pages = 685–692 | ||

| | doi = 10.1016/j.robot.2007.05.008 | |||

| ⚫ | | |

||

| ⚫ | }}</ref> | ||

| | accessdate = | |||

| }}</ref> | |||

| ==Egomotion== | ==Egomotion== | ||

| ]]] | |||

| '''Egomotion''' is defined as the 3D motion of a camera within an environment.<ref name="irani">{{cite journal | '''Egomotion''' is defined as the 3D motion of a camera within an environment.<ref name="irani">{{cite journal | ||

| | author = Irani, M. |author2=Rousso, B. |author3=Peleg S. | | author = Irani, M. |author2=Rousso, B. |author3=Peleg S. | ||

| Line 171: | Line 171: | ||

| | pages = 21–23 | | pages = 21–23 | ||

| |date=June 1994 | |date=June 1994 | ||

| | |

| access-date = 7 June 2010 | ||

| }}</ref> In the field of ], egomotion refers to estimating a camera's motion relative to a rigid scene.<ref>{{cite journal | }}</ref> In the field of ], egomotion refers to estimating a camera's motion relative to a rigid scene.<ref>{{cite journal | ||

| | author = Burger, W. | | author = Burger, W. | ||

| |author2=Bhanu, B. | |author2=Bhanu, B. | ||

| |s2cid=206418830 | |||

| | title = Estimating 3D egomotion from perspective image sequence | | title = Estimating 3D egomotion from perspective image sequence | ||

| | url = http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=61704 | |||

| | journal = IEEE Transactions on Pattern Analysis and Machine Intelligence | | journal = IEEE Transactions on Pattern Analysis and Machine Intelligence | ||

| | volume = 12 | | volume = 12 | ||

| Line 182: | Line 182: | ||

| | pages = 1040–1058 | | pages = 1040–1058 | ||

| |date=Nov 1990 | |date=Nov 1990 | ||

| | accessdate = 7 June 2010 | |||

| | doi=10.1109/34.61704 | | doi=10.1109/34.61704 | ||

| }}</ref> An example of egomotion estimation would be estimating a car's moving position relative to lines on the road or street signs being observed from the car itself. The estimation of egomotion is important in ] applications.<ref>{{cite journal | }}</ref> An example of egomotion estimation would be estimating a car's moving position relative to lines on the road or street signs being observed from the car itself. The estimation of egomotion is important in ] applications.<ref>{{cite journal | ||

| | author = Shakernia, O. |author2=Vidal, R. |author3=Shankar, S. | | author = Shakernia, O. |author2=Vidal, R. |author3=Shankar, S. | ||

| | title = Omnidirectional Egomotion Estimation From Back-projection Flow | |s2cid=5494756 | title = Omnidirectional Egomotion Estimation From Back-projection Flow | ||

| | url = http://cis.jhu.edu/~rvidal/publications/OMNIVIS03-backflow.pdf | | url = http://cis.jhu.edu/~rvidal/publications/OMNIVIS03-backflow.pdf | ||

| | journal = Conference on Computer Vision and Pattern Recognition Workshop | | journal = Conference on Computer Vision and Pattern Recognition Workshop | ||

| Line 192: | Line 191: | ||

| | pages = 82 | | pages = 82 | ||

| | year = 2003 | | year = 2003 | ||

| | |

| access-date = 7 June 2010 | ||

| |doi=10.1109/CVPRW.2003.10074 |citeseerx=10.1.1.5.8127 }}</ref> | |||

| }}</ref> | |||

| ===Overview=== | ===Overview=== | ||

| The goal of estimating the egomotion of a camera is to determine the 3D motion of that camera within the environment using a sequence of images taken by the camera.<ref>{{cite journal|author=Tian, T. |author2=Tomasi, C. |author3=Heeger, D. |title=Comparison of Approaches to Egomotion Computation |url=http://www.cs.duke.edu/~tomasi/papers/tian/tianCvpr96.pdf |journal=IEEE Computer Society Conference on Computer Vision and Pattern Recognition |pages=315 |year=1996 | |

The goal of estimating the egomotion of a camera is to determine the 3D motion of that camera within the environment using a sequence of images taken by the camera.<ref>{{cite journal|author=Tian, T. |author2=Tomasi, C. |author3=Heeger, D. |title=Comparison of Approaches to Egomotion Computation |url=http://www.cs.duke.edu/~tomasi/papers/tian/tianCvpr96.pdf |journal=IEEE Computer Society Conference on Computer Vision and Pattern Recognition |pages=315 |year=1996 |access-date=7 June 2010 |url-status=dead |archive-url=https://web.archive.org/web/20080808123021/http://www.cs.duke.edu/%7Etomasi/papers/tian/tianCvpr96.pdf |archive-date=August 8, 2008 }}</ref> The process of estimating a camera's motion within an environment involves the use of visual odometry techniques on a sequence of images captured by the moving camera.<ref name="milella" /> This is typically done using ] to construct an ] from two image frames in a sequence<ref name="irani" /> generated from either single cameras or stereo cameras.<ref name="milella">{{cite journal | ||

| | author = Milella, A. | | author = Milella, A. | ||

| |author2=Siegwart, R. | | author2 = Siegwart, R. | ||

| | title = Stereo-Based Ego-Motion Estimation Using Pixel Tracking and Iterative Closest Point | |||

| | url = http://asl.epfl.ch/aslInternalWeb/ASL/publications/uploadedFiles/21_amilella_EgoMotion_rev_publication.pdf | | url = http://asl.epfl.ch/aslInternalWeb/ASL/publications/uploadedFiles/21_amilella_EgoMotion_rev_publication.pdf | ||

| | journal = IEEE International Conference on Computer Vision Systems | | journal = IEEE International Conference on Computer Vision Systems | ||

| | pages = 21 | | pages = 21 | ||

| |date=January 2006 | | date = January 2006 | ||

| | |

| access-date = 7 June 2010 | ||

| | archive-url = https://web.archive.org/web/20100917151342/http://asl.epfl.ch/aslInternalWeb/ASL/publications/uploadedFiles/21_amilella_EgoMotion_rev_publication.pdf | |||

| | archive-date = 17 September 2010 | |||

| | url-status = dead | |||

| }}</ref> Using stereo image pairs for each frame helps reduce error and provides additional depth and scale information.<ref name="olson">{{cite journal | }}</ref> Using stereo image pairs for each frame helps reduce error and provides additional depth and scale information.<ref name="olson">{{cite journal | ||

| | author = Olson, C. F. | | author = Olson, C. F. | ||

| Line 215: | Line 217: | ||

| | pages = 215–229 | | pages = 215–229 | ||

| | url = http://faculty.washington.edu/cfolson/papers/pdf/ras03.pdf | | url = http://faculty.washington.edu/cfolson/papers/pdf/ras03.pdf | ||

| | |

| access-date = 7 June 2010 | ||

| | doi=10.1016/s0921-8890(03)00004-6 | | doi=10.1016/s0921-8890(03)00004-6 | ||

| }}</ref><ref>Sudin Dinesh, Koteswara Rao, K. |

}}</ref><ref>Sudin Dinesh, Koteswara Rao, K.; Unnikrishnan, M.; Brinda, V.; Lalithambika, V.R.; Dhekane, M.V. "". IEEE International Conference on Emerging Trends in Communication, Control, Signal Processing & Computing Applications (C2SPCA), 2013</ref> | ||

| Features are detected in the first frame, and then matched in the second frame. This information is then used to make the optical flow field for the detected features in those two images. The optical flow field illustrates how features diverge from a single point, the ''focus of expansion''. The focus of expansion can be detected from the optical flow field, indicating the direction of the motion of the camera, and thus providing an estimate of the camera motion. | Features are detected in the first frame, and then matched in the second frame. This information is then used to make the optical flow field for the detected features in those two images. The optical flow field illustrates how features diverge from a single point, the ''focus of expansion''. The focus of expansion can be detected from the optical flow field, indicating the direction of the motion of the camera, and thus providing an estimate of the camera motion. | ||

| Line 231: | Line 233: | ||

| ==References== | ==References== | ||

| {{Reflist|30em}} | {{Reflist|30em}} | ||

| {{Computer vision footer}} | |||

| ] | ] | ||

Latest revision as of 05:19, 31 July 2024

Determining the position and orientation of a robot by analyzing associated camera images

In robotics and computer vision, visual odometry is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the Mars Exploration Rovers.

Overview

In navigation, odometry is the use of data from the movement of actuators to estimate change in position over time through devices such as rotary encoders to measure wheel rotations. While useful for many wheeled or tracked vehicles, traditional odometry techniques cannot be applied to mobile robots with non-standard locomotion methods, such as legged robots. In addition, odometry universally suffers from precision problems, since wheels tend to slip and slide on the floor creating a non-uniform distance traveled as compared to the wheel rotations. The error is compounded when the vehicle operates on non-smooth surfaces. Odometry readings become increasingly unreliable as these errors accumulate and compound over time.

Visual odometry is the process of determining equivalent odometry information using sequential camera images to estimate the distance traveled. Visual odometry allows for enhanced navigational accuracy in robots or vehicles using any type of locomotion on any surface.

Types

There are various types of VO.

Monocular and stereo

Depending on the camera setup, VO can be categorized as Monocular VO (single camera), Stereo VO (two camera in stereo setup).

Feature-based and direct method

Traditional VO's visual information is obtained by the feature-based method, which extracts the image feature points and tracks them in the image sequence. Recent developments in VO research provided an alternative, called the direct method, which uses pixel intensity in the image sequence directly as visual input. There are also hybrid methods.

Visual inertial odometry

If an inertial measurement unit (IMU) is used within the VO system, it is commonly referred to as Visual Inertial Odometry (VIO).

Algorithm

Most existing approaches to visual odometry are based on the following stages.

- Acquire input images: using either single cameras., stereo cameras, or omnidirectional cameras.

- Image correction: apply image processing techniques for lens distortion removal, etc.

- Feature detection: define interest operators, and match features across frames and construct optical flow field.

- Feature extraction and correlation.

- Use correlation, not long term feature tracking, to establish correspondence of two images.

- Construct optical flow field (Lucas–Kanade method).

- Feature extraction and correlation.

- Check flow field vectors for potential tracking errors and remove outliers.

- Estimation of the camera motion from the optical flow.

- Choice 1: Kalman filter for state estimate distribution maintenance.

- Choice 2: find the geometric and 3D properties of the features that minimize a cost function based on the re-projection error between two adjacent images. This can be done by mathematical minimization or random sampling.

- Periodic repopulation of trackpoints to maintain coverage across the image.

An alternative to feature-based methods is the "direct" or appearance-based visual odometry technique which minimizes an error directly in sensor space and subsequently avoids feature matching and extraction.

Another method, coined 'visiodometry' estimates the planar roto-translations between images using Phase correlation instead of extracting features.

Egomotion

Egomotion is defined as the 3D motion of a camera within an environment. In the field of computer vision, egomotion refers to estimating a camera's motion relative to a rigid scene. An example of egomotion estimation would be estimating a car's moving position relative to lines on the road or street signs being observed from the car itself. The estimation of egomotion is important in autonomous robot navigation applications.

Overview

The goal of estimating the egomotion of a camera is to determine the 3D motion of that camera within the environment using a sequence of images taken by the camera. The process of estimating a camera's motion within an environment involves the use of visual odometry techniques on a sequence of images captured by the moving camera. This is typically done using feature detection to construct an optical flow from two image frames in a sequence generated from either single cameras or stereo cameras. Using stereo image pairs for each frame helps reduce error and provides additional depth and scale information.

Features are detected in the first frame, and then matched in the second frame. This information is then used to make the optical flow field for the detected features in those two images. The optical flow field illustrates how features diverge from a single point, the focus of expansion. The focus of expansion can be detected from the optical flow field, indicating the direction of the motion of the camera, and thus providing an estimate of the camera motion.

There are other methods of extracting egomotion information from images as well, including a method that avoids feature detection and optical flow fields and directly uses the image intensities.

See also

References

- Maimone, M.; Cheng, Y.; Matthies, L. (2007). "Two years of Visual Odometry on the Mars Exploration Rovers" (PDF). Journal of Field Robotics. 24 (3): 169–186. CiteSeerX 10.1.1.104.3110. doi:10.1002/rob.20184. S2CID 17544166. Retrieved 2008-07-10.

- Chhaniyara, Savan; KASPAR ALTHOEFER; LAKMAL D. SENEVIRATNE (2008). "Visual Odometry Technique Using Circular Marker Identification For Motion Parameter Estimation". Advances in Mobile Robotics: Proceedings of the Eleventh International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines, Coimbra, Portugal. The Eleventh International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines. Vol. 11. World Scientific, 2008. Archived from the original on 2012-02-24. Retrieved 2010-01-22.

- ^ Nister, D; Naroditsky, O.; Bergen, J (Jan 2004). Visual Odometry. Computer Vision and Pattern Recognition, 2004. CVPR 2004. Vol. 1. pp. I–652 – I–659 Vol.1. doi:10.1109/CVPR.2004.1315094.

- ^ Comport, A.I.; Malis, E.; Rives, P. (2010). F. Chaumette; P. Corke; P. Newman (eds.). "Real-time Quadrifocal Visual Odometry". International Journal of Robotics Research. 29 (2–3): 245–266. CiteSeerX 10.1.1.720.3113. doi:10.1177/0278364909356601. S2CID 15139693.

- Scaramuzza, D.; Siegwart, R. (October 2008). "Appearance-Guided Monocular Omnidirectional Visual Odometry for Outdoor Ground Vehicles". IEEE Transactions on Robotics. 24 (5): 1015–1026. doi:10.1109/TRO.2008.2004490. hdl:20.500.11850/14362. S2CID 13894940.

- Corke, P.; Strelow, D.; Singh, S. "Omnidirectional visual odometry for a planetary rover". Intelligent Robots and Systems, 2004.(IROS 2004). Proceedings. 2004 IEEE/RSJ International Conference on. Vol. 4. doi:10.1109/IROS.2004.1390041.

- Campbell, J.; Sukthankar, R.; Nourbakhsh, I.; Pittsburgh, I.R. "Techniques for evaluating optical flow for visual odometry in extreme terrain". Intelligent Robots and Systems, 2004.(IROS 2004). Proceedings. 2004 IEEE/RSJ International Conference on. Vol. 4. doi:10.1109/IROS.2004.1389991.

- Sunderhauf, N.; Konolige, K.; Lacroix, S.; Protzel, P. (2005). "Visual odometry using sparse bundle adjustment on an autonomous outdoor vehicle". In Levi; Schanz; Lafrenz; Avrutin (eds.). Tagungsband Autonome Mobile Systeme 2005 (PDF). Reihe Informatik aktuell. Springer Verlag. pp. 157–163. Archived from the original (PDF) on 2009-02-11. Retrieved 2008-07-10.

- Konolige, K.; Agrawal, M.; Bolles, R.C.; Cowan, C.; Fischler, M.; Gerkey, B.P. (2008). "Outdoor Mapping and Navigation Using Stereo Vision". Experimental Robotics. Springer Tracts in Advanced Robotics. Vol. 39. pp. 179–190. doi:10.1007/978-3-540-77457-0_17. ISBN 978-3-540-77456-3.

- Olson, C.F.; Matthies, L.; Schoppers, M.; Maimone, M.W. (2002). "Rover navigation using stereo ego-motion" (PDF). Robotics and Autonomous Systems. 43 (4): 215–229. doi:10.1016/s0921-8890(03)00004-6. Retrieved 2010-06-06.

- Cheng, Y.; Maimone, M.W.; Matthies, L. (2006). "Visual Odometry on the Mars Exploration Rovers". IEEE Robotics and Automation Magazine. 13 (2): 54–62. CiteSeerX 10.1.1.297.4693. doi:10.1109/MRA.2006.1638016. S2CID 15149330.

- Engel, Jakob; Schöps, Thomas; Cremers, Daniel (2014). "LSD-SLAM: Large-Scale Direct Monocular SLAM" (PDF). In Fleet D.; Pajdla T.; Schiele B.; Tuytelaars T. (eds.). Computer Vision. European Conference on Computer Vision 2014. Lecture Notes in Computer Science. Vol. 8690. doi:10.1007/978-3-319-10605-2_54.

- Engel, Jakob; Sturm, Jürgen; Cremers, Daniel (2013). "Semi-Dense Visual Odometry for a Monocular Camera" (PDF). IEEE International Conference on Computer Vision (ICCV). CiteSeerX 10.1.1.402.6918. doi:10.1109/ICCV.2013.183.

- Zaman, M. (2007). "High Precision Relative Localization Using a Single Camera". Robotics and Automation, 2007.(ICRA 2007). Proceedings. 2007 IEEE International Conference on. doi:10.1109/ROBOT.2007.364078.

- Zaman, M. (2007). "High resolution relative localisation using two cameras". Journal of Robotics and Autonomous Systems. 55 (9): 685–692. doi:10.1016/j.robot.2007.05.008.

- ^ Irani, M.; Rousso, B.; Peleg S. (June 1994). "Recovery of Ego-Motion Using Image Stabilization" (PDF). IEEE Computer Society Conference on Computer Vision and Pattern Recognition: 21–23. Retrieved 7 June 2010.

- Burger, W.; Bhanu, B. (Nov 1990). "Estimating 3D egomotion from perspective image sequence". IEEE Transactions on Pattern Analysis and Machine Intelligence. 12 (11): 1040–1058. doi:10.1109/34.61704. S2CID 206418830.

- Shakernia, O.; Vidal, R.; Shankar, S. (2003). "Omnidirectional Egomotion Estimation From Back-projection Flow" (PDF). Conference on Computer Vision and Pattern Recognition Workshop. 7: 82. CiteSeerX 10.1.1.5.8127. doi:10.1109/CVPRW.2003.10074. S2CID 5494756. Retrieved 7 June 2010.

- Tian, T.; Tomasi, C.; Heeger, D. (1996). "Comparison of Approaches to Egomotion Computation" (PDF). IEEE Computer Society Conference on Computer Vision and Pattern Recognition: 315. Archived from the original (PDF) on August 8, 2008. Retrieved 7 June 2010.

- ^ Milella, A.; Siegwart, R. (January 2006). "Stereo-Based Ego-Motion Estimation Using Pixel Tracking and Iterative Closest Point" (PDF). IEEE International Conference on Computer Vision Systems: 21. Archived from the original (PDF) on 17 September 2010. Retrieved 7 June 2010.

- Olson, C. F.; Matthies, L.; Schoppers, M.; Maimoneb M. W. (June 2003). "Rover navigation using stereo ego-motion" (PDF). Robotics and Autonomous Systems. 43 (9): 215–229. doi:10.1016/s0921-8890(03)00004-6. Retrieved 7 June 2010.

- Sudin Dinesh, Koteswara Rao, K.; Unnikrishnan, M.; Brinda, V.; Lalithambika, V.R.; Dhekane, M.V. "Improvements in Visual Odometry Algorithm for Planetary Exploration Rovers". IEEE International Conference on Emerging Trends in Communication, Control, Signal Processing & Computing Applications (C2SPCA), 2013