| Revision as of 05:50, 30 September 2007 view sourceSmackBot (talk | contribs)3,734,324 editsm Date/fix the maintenance tags or gen fixes← Previous edit | Latest revision as of 16:13, 20 December 2024 view source Interstellarity (talk | contribs)Extended confirmed users20,394 edits →Market share: Most popular search engines with over 1% market share. I have excluded search engines with less than that.Tag: 2017 wikitext editor | ||

| Line 1: | Line 1: | ||

| {{short description|Software system for finding relevant information on the Web}} | |||

| ] is the world's most popular search engine.]] | |||

| {{About|searching the World Wide Web}} | |||

| '''Web ]s''' provide an interface to search for information on the ]. Information may consist of web pages, images and other types of files. | |||

| {{pp|small=yes}}{{Use dmy dates|date=December 2024}} | |||

| {{Full citations needed|date=July 2021}} | |||

| ] ] when the user is typing in the ].]] | |||

| A '''search engine''' is a ] that provides ]s to ]s and other relevant information on ] in response to a user's ]. The user ] a query within a ] or a ], and the ] are often a list of hyperlinks, accompanied by textual summaries and images. Users also have the option of limiting the search to a specific type of results, such as images, videos, or news. | |||

| For a search provider, its ] is part of a ] system that can encompass many ]s throughout the world. The speed and accuracy of an engine's response to a query is based on a complex system of ] that is continuously updated by automated ]s. This can include ] the ] and ]s stored on ]s, but some content is ] to crawlers. | |||

| Some search engines also mine data available in newsgroups, databases, or ]. Unlike ], which are maintained by human editors, search engines operate algorithmically or are a mixture of algorithmic and human input. | |||

| There have been many search engines since the dawn of the Web in the 1990s, but ] became the dominant one in the 2000s and has remained so. It currently has a 90% global market share. Other search engines with a smaller market share include ] at 4%, ] at 2%, and ] at 1%. Other search engines not listed have less than a 3% market share.<ref>{{Cite web |title=Search Engine Market Share Worldwide {{!}} StatCounter Global Stats |url=http://gs.statcounter.com/search-engine-market-share |access-date=19 February 2024 |website=StatCounter}}</ref><ref name="NMS">{{cite web | url=https://www.similarweb.com/engines/ | title=Search Engine Market Share Worldwide | access-date=19 February 2024 | website=Similarweb Top search engines}}</ref> The business of ]s improving their visibility in ], known as ] and ], has thus largely focused on Google. | |||

| ==History of popular Web search engines== | |||

| The very first tool used for searching on the Internet was ].<ref name=LeidenUnivSE> | |||

| "Internet History - Search Engines" (from ]), | |||

| Universiteit Leiden, Netherlands, September 2001, web: | |||

| . | |||

| </ref> | |||

| The name stands for "archive" without the "v". It was created in 1990 by ], a student at ] in Montreal. The program downloaded the directory listings of all the files located on public anonymous FTP (]) sites, creating a searchable database of file names; however, Archie did not index the contents of these files. | |||

| == History == | |||

| The rise of ] (created in 1991 by ] at the ]) led to two new search programs, ] and ]. Like Archie, they searched the file names and titles stored in Gopher index systems. Veronica ('''V'''ery '''E'''asy '''R'''odent-'''O'''riented '''N'''et-wide '''I'''ndex to '''C'''omputerized '''A'''rchives) provided a keyword search of most Gopher menu titles in the entire Gopher listings. Jughead ('''J'''onzy's '''U'''niversal '''G'''opher '''H'''ierarchy '''E'''xcavation '''A'''nd '''D'''isplay) was a tool for obtaining menu information from specific Gopher servers. While the name of the search engine "]" was not a reference to the ] series, "]" and "]" are characters in the series, thus referencing their predecessor. | |||

| {{further|Timeline of web search engines}} | |||

| {|class="bordered infobox" | |||

| <!-- Keep this list limited to notable engines (i.e., those that already have Misplaced Pages articles) to avoid link spam --> | |||

| {| class="wikitable sortable" style="margin-left:1em; float:right; width:25em" | |||

| |+ Timeline (]) <!--Note: "Launch" refers only to web availability of original crawl-based web search engine results.--> | |||

| |- | |- | ||

| ! |

! scope="col" | Year | ||

| ! scope="col" | Engine | |||

| ! style="width: 13em;" scope="col" | Current status | |||

| |- | |- | ||

| |rowspan="4" |1993 | |||

| | colspan = "3" | Note: "Launch" refers only to web <br>availability of original crawl-based<br> web search engine results. | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |- | ||

| |] | |||

| !|Year | |||

| |{{Site inactive}} | |||

| !|Engine | |||

| !|Event | |||

| |- | |- | ||

| |] | |||

| ||1993 | |||

| |{{Site inactive}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| | rowspan="3" |1994 | |||

| |{{Site inactive}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| | rowspan="4" |1994 | |||

| ||] | |||

| |] | |||

| ||Launch | |||

| |{{Site active}} | |||

| |- | |- | ||

| |] | |||

| |{{Site inactive}}, redirects to Disney | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| || | |||

| |{{Site active}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| | rowspan="2" |1995 | |||

| |{{Site inactive}}, redirects to Disney | |||

| ||] | |||

| ||Launch (part of ]) | |||

| |- | |- | ||

| | rowspan="7" |1995 | |||

| ||] | |||

| |] | |||

| ||Launch | |||

| |{{Site active}}, initially a search function for ] | |||

| |- | |- | ||

| |] | |||

| | rowspan="3" |1996 | |||

| |{{Site active}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| |{{Site active}} | |||

| ||Founded | |||

| |- | |- | ||

| |] | |||

| ||] | |||

| |{{Site inactive}} | |||

| ||Founded | |||

| |- | |- | ||

| |] | |||

| || | |||

| |{{Site active}} | |||

| ||] | |||

| ||Founded | |||

| |- | |- | ||

| |] | |||

| ||1997 | |||

| |{{Site active}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| ||1998 | |||

| |{{Site inactive}}, acquired by Yahoo! in 2003, since 2013 redirects to Yahoo! | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| |rowspan="4"| |

|rowspan="4" |1996 | ||

| |] | |||

| |{{Site inactive}}, incorporated into ] in 2000 | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| |{{Site active}} | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| |{{Site inactive}} (used ] search technology) | |||

| ||Founded | |||

| |- | |- | ||

| |] | |||

| |{{Site active}} (rebranded ask.com) | |||

| ||Founded | |||

| |- | |- | ||

| |rowspan=" |

| rowspan="4" |1997 | ||

| |] | |||

| |{{Site active}} (rebranded ] since 1999) | |||

| ||Founded | |||

| |- | |- | ||

| |] | |||

| |rowspan="1"|2003 | |||

| |{{Site active}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| | rowspan="1" |2004 | |||

| |{{Site inactive}} | |||

| ||] | |||

| ||Final launch | |||

| |- | |- | ||

| |] | |||

| || | |||

| |{{Site active}} | |||

| ||] | |||

| ||Launch | |||

| |- | |- | ||

| | rowspan=" |

| rowspan="4" |1998 | ||

| |] | |||

| |{{Site active}} | |||

| ||Final launch | |||

| |- | |- | ||

| |] | |||

| |{{Site active}} as Startpage.com | |||

| || Launch | |||

| |- | |- | ||

| |] | |||

| |{{Site active}} as Bing | |||

| || Launch | |||

| |- | |- | ||

| |] | |||

| | rowspan= "6" |2006 | |||

| |{{Site inactive}} (merged with NATE) | |||

| ||] | |||

| ||Founded | |||

| |- | |- | ||

| | rowspan="4" |1999 | |||

| ||] | |||

| |] | |||

| ||Founded | |||

| |{{Site inactive}} (URL redirected to Yahoo!) | |||

| |- | |- | ||

| |] | |||

| |{{Site inactive}}, rebranded Yellowee (was redirecting to justlocalbusiness.com) | |||

| || Launch | |||

| |- | |- | ||

| | |

|] | ||

| |{{Site active}} | |||

| ||Launch | |||

| |- | |- | ||

| |] | |||

| || ] | |||

| |{{Site inactive}} (redirect to Ask.com) | |||

| || Beta Launch | |||

| |- | |- | ||

| | rowspan="3" |2000 | |||

| ||] | |||

| |] | |||

| ||Beta Launch | |||

| |{{Site active}} | |||

| |- | |- | ||

| |] | |||

| | rowspan= "3" |2007 | |||

| |{{Site inactive}} | |||

| ||] | |||

| ||Launched | |||

| |- | |- | ||

| |] | |||

| |{{Site inactive}} | |||

| ||Launched | |||

| |- | |- | ||

| ||2001 | |||

| ||] | |||

| |] | |||

| ||Launched | |||

| |{{Site inactive}} | |||

| |- | |- | ||

| | rowspan="1" |2003 | |||

| | | |||

| |] | |||

| |{{Site active}} | |||

| ||Launched | |||

| |- | |||

| | rowspan="4" |2004 | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} (redirect to DuckDuckGo) | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |rowspan="2" |2005 | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site active}}, Google Search | |||

| |- | |||

| | rowspan="6" |2006 | |||

| |] | |||

| |{{Site inactive}}, merged with ] | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} as Bing, rebranded MSN Search | |||

| |- | |||

| | rowspan="4" |2007 | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site active}}, Google Search | |||

| |- | |||

| | rowspan="8" |2008 | |||

| |] | |||

| |{{Site inactive}} (redirects to Bing) | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} (redirects to Ecosia) | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| | rowspan="6" |2009 | |||

| |] | |||

| |{{Site active}}, rebranded Live Search | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}}, sister engine of Ixquick | |||

| |- | |||

| | rowspan="4" |2010 | |||

| |] | |||

| |{{Site inactive}}, sold to IBM | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] (English) | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| ||2011 | |||

| |] | |||

| |{{Site active}}, ] | |||

| |- | |||

| ||2012 | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| ||2013 | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| | rowspan="3" |2014 | |||

| |] | |||

| |{{Site active}}, Kurdish / Sorani | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| | rowspan="2" |2015 | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| |] | |||

| |{{Site inactive}} | |||

| |- | |||

| ||2016 | |||

| |] | |||

| |{{Site active}}, Google Search | |||

| |- | |||

| |2017 | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |2018 | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |2020 | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| | rowspan="3" |2021 | |||

| |] | |||

| |{{Site active}} | |||

| |- | |||

| |Queye | |||

| |{{Site active}} | |||

| |- | |- | ||

| |] | |||

| |{{Site active}} | |||

| |} | |} | ||

| ===Pre-1990s=== | |||

| The first Web search engine was Wandex, a now-defunct index collected by the ], a ] developed by Matthew Gray at ] in 1993. Another very early search engine, ], also appeared in 1993, and still runs today. ] (released in early 1994) used a crawler to find web pages for searching, but search was limited to the title of web pages only. One of the first "full text" crawler-based search engine was ], which came out in 1994. Unlike its predecessors, it let users search for any word in any webpage, which became the standard for all major search engines since. It was also the first one to be widely known by the public. Also in 1994 ] (which started at ]) was launched, and became a major commercial endeavor. For a more detailed history of early search engines, see <ref name=SearchEngineWatchHist> | |||

| In 1945, ] described an information retrieval system that would allow a user to access a great expanse of information, all at a single desk.<ref>{{Cite web |last=Bush |first=Vannevar |date=1945-07-01 |title=As We May Think |url=https://www.theatlantic.com/magazine/archive/1945/07/as-we-may-think/303881/ |access-date=2024-02-22 |website=The Atlantic |language=en|archive-url=https://web.archive.org/web/20120822132632/http://www.theatlantic.com/magazine/archive/1945/07/as-we-may-think/303881/4/|archive-date=2012-08-22}}</ref> He called it a ]. He described the system in an article titled "]" that was published in ].<ref>{{Cite web|title=Search Engine History.com|url=http://www.searchenginehistory.com/|access-date=2020-07-02|website=www.searchenginehistory.com}}</ref> The memex was intended to give a user the capability to overcome the ever-increasing difficulty of locating information in ever-growing centralized indices of scientific work. Vannevar Bush envisioned libraries of research with connected annotations, which are similar to modern ]s.<ref>{{Cite web|title=Penn State WebAccess Secure Login|url=https://webaccess.psu.edu/?cosign-scripts.libraries.psu.edu&https%3A%2F%2Fscripts.libraries.psu.edu%2Fscripts%2Fezproxyauth.php%3Furl=ezp.2aHR0cHM6Ly9pZWVleHBsb3JlLmllZWUub3JnL3N0YW1wL3N0YW1wLmpzcD90cD0mYXJudW1iZXI9ODUwOTU1MA--|access-date=2020-07-02|website=webaccess.psu.edu|archive-date=2022-01-22|archive-url=https://web.archive.org/web/20220122194212/https://webaccess.psu.edu/?cosign-scripts.libraries.psu.edu&https%3A%2F%2Fscripts.libraries.psu.edu%2Fscripts%2Fezproxyauth.php%3Furl=ezp.2aHR0cHM6Ly9pZWVleHBsb3JlLmllZWUub3JnL3N0YW1wL3N0YW1wLmpzcD90cD0mYXJudW1iZXI9ODUwOTU1MA--|url-status=dead}}</ref> | |||

| "Search Engine Birthdays" (from ]), | |||

| Chris Sherman, September 2003, web: | |||

| . | |||

| </ref>. | |||

| ] eventually became a crucial component of search engines through algorithms such as ] and ].<ref>{{Cite web|title=The Quest for Correct Information on the Web: Hyper Search Engines|first1=Massimo|last1=Marchiori|work=Proceedings of the Sixth International World Wide Web Conference (WWW6)|date=1997|url=http://www.w3.org/People/Massimo/papers/WWW6/Overview.html|access-date=2021-01-10}}</ref><ref name="AnatomyOfSearch">{{cite web|title=The Anatomy of a Large-Scale Hypertextual Web Search Engine|last1=Brin|first1=Sergey|last2=Page|first2=Larry|work=Proceedings of the Seventh International World Wide Web Conference (WWW7)|date=1998|url=http://ilpubs.stanford.edu:8090/361/1/1998-8.pdf|access-date=2021-01-10|archive-date=2017-07-13|archive-url=https://web.archive.org/web/20170713070157/http://ilpubs.stanford.edu:8090/361/1/1998-8.pdf|url-status=dead}}</ref> | |||

| Soon after, many search engines appeared and vied for popularity. These included ], ], ], ], and ]. In some ways, they competed with popular directories such as ]. Later, the directories integrated or added on search engine technology for greater functionality. | |||

| ===1990s: Birth of search engines=== | |||

| Search engines were also known as some of the brightest stars in the Internet investing frenzy that occurred in the late 1990s. Several companies entered the market spectacularly, receiving record gains during their ]s. Some have taken down their public search engine, and are marketing enterprise-only editions, such as Northern Light. | |||

| The first internet search engines predate the debut of the Web in December 1990: ] user search dates back to 1982,{{ref RFC|812}} and the ] multi-network user search was first implemented in 1989.<ref>{{cite web|url=http://www.cnri.reston.va.us/home/koe/iwooos-full.html|title=Knowbot programming: System support for mobile agents|work=cnri.reston.va.us}}</ref> The first well documented search engine that searched content files, namely ] files, was ], which debuted on 10 September 1990.<ref>{{cite web|url=https://groups.google.com/forum/#!msg/comp.archives/LWVA50W8BKk/wyRbF_lDc6cJ|title= An Internet archive server server (was about Lisp)|last=Deutsch|first=Peter|date=September 11, 1990|website=groups.google.com|access-date=2017-12-29}}</ref> | |||

| Prior to September 1993, the ] was entirely indexed by hand. There was a list of ]s edited by ] and hosted on the ] ]. One snapshot of the list in 1992 remains,<ref>{{cite web|url=http://www.w3.org/History/19921103-hypertext/hypertext/DataSources/WWW/Servers.html |title=World-Wide Web Servers |publisher=W3C |access-date=2012-05-14}}</ref> but as more and more web servers went online the central list could no longer keep up. On the ] site, new servers were announced under the title "What's New!".<ref>{{cite web|url=http://home.mcom.com/home/whatsnew/whats_new_0294.html |title=What's New! February 1994 |publisher=Mosaic Communications Corporation! |access-date=2012-05-14}}</ref> | |||

| ===Google=== | |||

| Around 2001, the Google search engine rose to prominence. Its success was based in part on the concept of link popularity and PageRank. The number of other websites and webpages that link to a given page is taken into consideration with PageRank, on the premise that good or desirable pages are linked to more than others. The PageRank of linking pages and the number of links on these pages contribute to the PageRank of the linked page. This makes it possible for Google to order its results by how many websites link to each found page. Google's minimalist user interface is very popular with users, and has since spawned a number of imitators. | |||

| The first tool used for searching content (as opposed to users) on the ] was ].<ref name=LeidenUnivSE>{{cite web |url-status=dead |work=Internet History |title=Search Engines |author1=Search Engine Watch |author-link1=Search Engine Watch |publisher=Universiteit Leiden |location=Netherlands |date=September 2001 |url=http://www.internethistory.leidenuniv.nl/index.php3?c=7 |archive-url=https://web.archive.org/web/20090413030108/http://www.internethistory.leidenuniv.nl/index.php3?c=7 |archive-date=2009-04-13 }}</ref> The name stands for "archive" without the "v".<ref name="2020/09/21pcmag"/> It was created by ],<ref name="2020/09/21pcmag">{{cite web | title = Archie | url = https://www.pcmag.com/encyclopedia/term/archie | publisher=]| access-date = 2020-09-20 }}</ref><ref>{{cite web | author = Alexandra Samuel| title = Meet Alan Emtage, the Black Technologist Who Invented ARCHIE, the First Internet Search Engine| date = 21 February 2017| url = https://daily.jstor.org/alan-emtage-first-internet-search-engine/ |publisher= ]| access-date = 2020-09-20 }}</ref><ref>{{cite web | author = loop news barbados | title = Alan Emtage- a Barbadian you should know | url = http://www.loopnewsbarbados.com/content/alan-emtage-barbadian-you-should-know | publisher = loopnewsbarbados.com | access-date = 2020-09-21 | archive-date = 2020-09-23 | archive-url = https://web.archive.org/web/20200923065914/http://www.loopnewsbarbados.com/content/alan-emtage-barbadian-you-should-know | url-status = dead }}</ref><ref>{{cite web | author = Dino Grandoni, Alan Emtage | title = Alan Emtage: The Man Who Invented The World's First Search Engine (But Didn't Patent It)| date = April 2013| url = https://www.huffingtonpost.co.uk/entry/alan-emtage-search-engine_n_2994090?ri18n=true&guccounter=1&guce_referrer=aHR0cHM6Ly9jb25zZW50LnlhaG9vLmNvbS8&guce_referrer_sig=AQAAABveQefuoczW_8_bxwbOgluVTUPvIfv5s_OP1jMgUJd8MCwKc148lvXb7HAHXY48P_Be6wXMW0LKlLRfQzJNalLpuwnp7F6NpbyDC2BG10OveS2qtubkO0PhJ8-juP3M2a9K2ygbWuoUhOCvO-1NA6-YQKA8BtdZEcsfUUI_M-8S | publisher= ].co.uk|access-date = 2020-09-21 }}</ref> ] student at ] in ], Canada. The program downloaded the directory listings of all the files located on public anonymous FTP (]) sites, creating a searchable ] of file names; however, ] did not index the contents of these sites since the amount of data was so limited it could be readily searched manually. | |||

| Google and most other web engines utilize not only PageRank but more than 150 criteria to determine relevancy.<ref name=brin>Sergey Brin and Lawrence Page. The Anatomy of a Large-Scale Hypertextual Web Search Engine. Stanford University. 1998.</ref> The algorithm "remembers" where it has been and indexes the number of cross-links and relates these into groupings. PageRank is based on citation analysis that was developed in the 1950s by Eugene Garfield at the University of Pennsylvania. Google's founders cite Garfield's work in their original paper. In this way virtual communities of webpages are found. Teoma's search technology uses a communities approach in its ranking algorithm. ] has worked on similar technology. Web link analysis was first developed by Jon Kleinberg and his team while working on the CLEVER project at IBM's Almaden Research Center. Google is currently the most popular search engine. | |||

| The rise of ] (created in 1991 by ] at the ]) led to two new search programs, ] and ]. Like Archie, they searched the file names and titles stored in Gopher index systems. Veronica (Very Easy Rodent-Oriented Net-wide Index to Computerized Archives) provided a keyword search of most Gopher menu titles in the entire Gopher listings. Jughead (Jonzy's Universal Gopher Hierarchy Excavation And Display) was a tool for obtaining menu information from specific Gopher servers. While the name of the search engine "]" was not a reference to the ] series, "]" and "]" are characters in the series, thus referencing their predecessor. | |||

| ===Yahoo! Search=== | |||

| The two founders of Yahoo!, David Filo and Jerry Yang, Ph.D. candidates in Electrical Engineering at Stanford University, started their guide in a campus trailer in February 1994 as a way to keep track of their personal interests on the Internet. Before long they were spending more time on their home-brewed lists of favourite links than on their doctoral dissertations. Eventually, Jerry and David's lists became too long and unwieldy, and they broke them out into categories. When the categories became too full, they developed subcategories ... and the core concept behind Yahoo! was born. In 2002, Yahoo! acquired Inktomi and in 2003, Yahoo! acquired Overture, which owned AlltheWeb and AltaVista. Despite owning its own search engine, Yahoo! initially kept using Google to provide its users with search results on its main website Yahoo.com. However, in 2004, Yahoo! launched its own search engine based on the combined technologies of its acquisitions and providing a service that gave pre-eminence to the Web search engine over the directory. | |||

| In the summer of 1993, no search engine existed for the web, though numerous specialized catalogs were maintained by hand. ] at the ] wrote a series of ] scripts that periodically mirrored these pages and rewrote them into a standard format. This formed the basis for ], the web's first primitive search engine, released on September 2, 1993.<ref name="Announcement html">{{cite web |url= http://groups.google.com/group/comp.infosystems.www/browse_thread/thread/2176526a36dc8bd3/2718fd17812937ac?hl=en&lnk=gst&q=Oscar+Nierstrasz#2718fd17812937ac|title=Searchable Catalog of WWW Resources (experimental)|author=Oscar Nierstrasz|date=2 September 1993|author-link=Oscar Nierstrasz}}</ref> | |||

| ===Microsoft=== | |||

| The most recent major search engine is ] (evolved into ]), owned by ], which previously relied on others for its search engine listings. In 2004, it debuted a beta version of its own results, powered by its own ] (called ]). In early 2005 , it started showing its own results live, and ceased using results from ], now owned by ]. In 2006, Microsoft migrated to a new search platform - ], retiring the "MSN Search" name in the process. | |||

| In June 1993, Matthew Gray, then at ], produced what was probably the first ], the ]-based ], and used it to generate an index called "Wandex". The purpose of the Wanderer was to measure the size of the World Wide Web, which it did until late 1995. The web's second search engine ] appeared in November 1993. Aliweb did not use a ], but instead depended on being notified by ] of the existence at each site of an index file in a particular format. | |||

| ===Baidu=== | |||

| Due to the difference between ] and the ], the Chinese search market did not boom until the introduction of ] in 2000.{{Fact|date=March 2007}} | |||

| ] (created in December 1993<ref>{{cite web |url=http://archive.ncsa.uiuc.edu/SDG/Software/Mosaic/Docs/old-whats-new/whats-new-1293.html |archive-url=https://web.archive.org/web/20010620073530/http://archive.ncsa.uiuc.edu/SDG/Software/Mosaic/Docs/old-whats-new/whats-new-1293.html |archive-date=2001-06-20 |title=Archive of NCSA what's new in December 1993 page |date=2001-06-20 |access-date=2012-05-14 |url-status=dead }}</ref> by ]) used a ] to find web pages and to build its index, and used a ] as the interface to its query program. It was thus the first ] resource-discovery tool to combine the three essential features of a web search engine (crawling, indexing, and searching) as described below. Because of the limited resources available on the platform it ran on, its indexing and hence searching were limited to the titles and headings found in the ]s the crawler encountered. | |||

| ==Top Providers== | |||

| For statistics see ] | |||

| One of the first "all text" crawler-based search engines was ], which came out in 1994. Unlike its predecessors, it allowed users to search for any word in any ], which has become the standard for all major search engines since. It was also the search engine that was widely known by the public. Also, in 1994, ] (which started at ]) was launched and became a major commercial endeavor. | |||

| ==Challenges faced by Web search engines== | |||

| * The Web is growing much faster than any present-technology search engine can possibly index see distributed web crawling. In 2006, some users found major search-engines became slower to index new webpages. Time to index in ], slowing down in Dec-2005 & Jan-2006:(18-Jan-2006). | |||

| * Many webpages are updated frequently, which forces the search engine to revisit them periodically. | |||

| The first popular search engine on the Web was ].<ref>{{cite web |title=What is first mover? |url=https://searchcio.techtarget.com/definition/first-mover |website=SearchCIO |publisher=] |access-date=5 September 2019 |date=September 2005}}</ref> The first product from ], founded by ] and ] in January 1994, was a ] called ]. In 1995, a search function was added, allowing users to search Yahoo! Directory.<ref>{{cite book |last1=Oppitz |first1=Marcus |last2=Tomsu |first2=Peter |title=Inventing the Cloud Century: How Cloudiness Keeps Changing Our Life, Economy and Technology |date=2017 |publisher=Springer |isbn=9783319611617 |page=238 |url=https://books.google.com/books?id=vrEvDwAAQBAJ&pg=PA238}}</ref><ref>{{cite web |title=Yahoo! Search |url=https://www.yahoo.com/search.html |archive-url=https://web.archive.org/web/19961128070718/http://www.yahoo.com/search.html |url-status=dead |archive-date=28 November 1996 |website=Yahoo! |access-date=5 September 2019 |date=28 November 1996}}</ref> It became one of the most popular ways for people to find web pages of interest, but its search function operated on its web directory, rather than its full-text copies of web pages. | |||

| * The Web search queries one can make are currently limited to searching for key words, which may result in many Type I and type II errors positives, especially using the default whole-page search. Better results might be achieved by using a Proximity search (text) option with a search-bracket to limit matches within a paragraph or phrase, rather than matching random words scattered across large pages. Another alternative is using human operators to do the researching for the user with organic search engines. | |||

| * Dynamically generated sites may be slow or difficult to index, or may result in excessive results, perhaps generating 500 times more webpages than average. Example: for a dynamic webpage which changes content based on entries inserted from a database, a search-engine might be requested to index 50,000 static webpages for 50,000 different parameter values passed to that dynamic webpage. The indexing is numerous in the dynamic webpages, they can also be shown by logical thinking: if one parameter-value generates 1 indexed webpage, 10 generate 10, and 1,000 parameter-values generate 1,000 webpages, etc. Also, some dictionary-page websites are indexed using dynamic pages: for example, search for page-counts of URLs containing variations of "dictionary.*" and observe the page-totals reported by the search-engines, perhaps in excess of 50,000 pages. | |||

| Soon after, a number of search engines appeared and vied for popularity. These included ], ], ], ], ], and ]. Information seekers could also browse the directory instead of doing a keyword-based search. | |||

| * Many dynamically generated websites are not indexable by search engines; this phenomenon is known as the ]. There are list of search engines that specialize in crawling the ] by crawling sites that have dynamic content, require forms to be filled out, or are password protected. | |||

| *Relevancy: Sometimes the engine can't get what the person is looking for. It may give you unwanted irrelevant sites, ], or ]. | |||

| * Some search-engines do not rank results by relevance, but by the amount of money the matching websites pay. | |||

| * In 2006, hundreds of generated websites used tricks to manipulate a search-engine to display them in the higher results for numerous keywords. This can lead to some search engine results being polluted with ] which contain little or no information about the matching phrases. The more relevant webpages are pushed further down in the results list, perhaps by 500 entries or more. For example, many ] create websites containing random sequences of high-traffic keywords, often with misspellings in order to attract a higher ranking on a search engine. | |||

| * Secure pages content hosted on HTTPS URLs pose a challenge for crawlers which either can't browse the content for technical reasons or won't index it for privacy reasons. | |||

| In 1996, ] developed the ] site-scoring ] for search engines results page ranking<ref>Greenberg, Andy, , ''Forbes'' magazine, October 5, 2009</ref><ref>Yanhong Li, "Toward a Qualitative Search Engine", ''IEEE Internet Computing'', vol. 2, no. 4, pp. 24–29, July/Aug. 1998, {{doi|10.1109/4236.707687}}</ref><ref name="rankdex">, ''rankdex.com''</ref> and received a US patent for the technology.<ref>USPTO, , US Patent number: 5920859, Inventor: Yanhong Li, Filing date: Feb 5, 1997, Issue date: Jul 6, 1999</ref> It was the first search engine that used ]s to measure the quality of websites it was indexing,<ref>{{cite web |title=Baidu Vs Google: The Twins Of Search Compared |url=https://fourweekmba.com/baidu-vs-google/ |website=FourWeekMBA |access-date=16 June 2019 |date=18 September 2018}}</ref> predating the very similar algorithm patent filed by ] two years later in 1998.<ref>{{cite web |last1=Altucher |first1=James |title=10 Unusual Things About Google |url=https://www.forbes.com/sites/jamesaltucher/2011/03/18/10-unusual-things-about-google-also-the-worst-vc-decision-i-ever-made/ |date=March 18, 2011 |website=] |access-date=16 June 2019}}</ref> ] referenced Li's work in some of his U.S. patents for PageRank.<ref name="patent">{{cite web |title = Method for node ranking in a linked database |url = https://patents.google.com/patent/US6285999 |publisher = Google Patents |access-date = 19 October 2015 |url-status = live |archive-url = https://web.archive.org/web/20151015185034/http://www.google.com/patents/US6285999 |archive-date = 15 October 2015 }}</ref> Li later used his Rankdex technology for the ] search engine, which was founded by him in China and launched in 2000. | |||

| ==How Web search engines work== | |||

| A search engine operates, in the following order | |||

| In 1996, ] was looking to give a single search engine an exclusive deal as the featured search engine on Netscape's web browser. There was so much interest that instead, Netscape struck deals with five of the major search engines: for $5 million a year, each search engine would be in rotation on the Netscape search engine page. The five engines were Yahoo!, Magellan, Lycos, Infoseek, and Excite.<ref>{{Cite web|url=http://files.shareholder.com/downloads/YHOO/701084386x0x27155/9a3b5ed8-9e84-4cba-a1e5-77a3dc606566/YHOO_News_1997_7_8_General.pdf|title=Yahoo! And Netscape Ink International Distribution Deal|access-date=2009-08-12|archive-url=https://web.archive.org/web/20131116112021/http://files.shareholder.com/downloads/YHOO/701084386x0x27155/9a3b5ed8-9e84-4cba-a1e5-77a3dc606566/YHOO_News_1997_7_8_General.pdf|archive-date=2013-11-16|url-status=dead}}</ref><ref>{{cite news |date=1 April 1996|title=Browser Deals Push Netscape Stock Up 7.8% |newspaper=Los Angeles Times |url=https://www.latimes.com/archives/la-xpm-1996-04-01-fi-53780-story.html }}</ref> | |||

| ] adopted the idea of selling search terms in 1998 from a small search engine company named ]. This move had a significant effect on the search engine business, which went from struggling to one of the most profitable businesses in the Internet.{{Citation needed|date=January 2024}} | |||

| Search engines were also known as some of the brightest stars in the Internet investing frenzy that occurred in the late 1990s.<ref>{{cite journal |last=Gandal |first=Neil |year=2001 |title=The dynamics of competition in the internet search engine market |journal=International Journal of Industrial Organization |volume=19 |issue=7 |pages=1103–1117 |doi=10.1016/S0167-7187(01)00065-0 |url= http://www.escholarship.org/uc/item/0h17g08v |issn=0167-7187}}</ref> Several companies entered the market spectacularly, receiving record gains during their ]s. Some have taken down their public search engine and are marketing enterprise-only editions, such as Northern Light. Many search engine companies were caught up in the ], a speculation-driven market boom that peaked in March 2000. | |||

| ===2000s–present: Post dot-com bubble=== | |||

| Around 2000, ] rose to prominence.<ref>{{cite web|url=https://www.google.com/about/company/history/ |title=Our history in depth |access-date=2012-10-31 |url-status=deviated |archive-url= https://web.archive.org/web/20121101210037/https://www.google.com/about/company/history/ |archive-date= November 1, 2012 }}</ref> The company achieved better results for many searches with an algorithm called ], as was explained in the paper ''Anatomy of a Search Engine'' written by ] and ], the later founders of Google.<ref name="AnatomyOfSearch" /> This ] ranks web pages based on the number and PageRank of other web sites and pages that link there, on the premise that good or desirable pages are linked to more than others. Larry Page's patent for PageRank cites ]'s earlier ] patent as an influence.<ref name="patent"/><ref name="rankdex"/> Google also maintained a minimalist interface to its search engine. In contrast, many of its competitors embedded a search engine in a ]. In fact, the Google search engine became so popular that spoof engines emerged such as ]. | |||

| By 2000, ] was providing search services based on Inktomi's search engine. Yahoo! acquired Inktomi in 2002, and ] (which owned ] and AltaVista) in 2003. Yahoo! switched to Google's search engine until 2004, when it launched its own search engine based on the combined technologies of its acquisitions. | |||

| ] first launched MSN Search in the fall of 1998 using search results from Inktomi. In early 1999, the site began to display listings from ], blended with results from Inktomi. For a short time in 1999, MSN Search used results from AltaVista instead. In 2004, ] began a transition to its own search technology, powered by its own ] (called ]). | |||

| Microsoft's rebranded search engine, ], was launched on June 1, 2009. On July 29, 2009, Yahoo! and Microsoft finalized a deal in which ] would be powered by Microsoft Bing technology. | |||

| {{As of|2019|post=,}} active search engine crawlers include those of Google, ], Baidu, Bing, ], ], ] and ]. | |||

| == Approach == | |||

| {{anchor|Workings}} | |||

| {{main|Search engine technology}} | |||

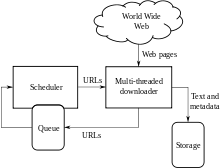

| A search engine maintains the following processes in near real time:<ref>{{cite web |url=https://www.techtarget.com/whatis/definition/search-engine |title=Definition – search engine |website=Techtarget |access-date=1 June 2023}}</ref> | |||

| # ] | # ] | ||

| # ] | # ] | ||

| # ]<ref name=Jawadekar2011>{{citation |year=2011 |author=Jawadekar, Waman S |title=Knowledge Management: Text & Cases |chapter=8. Knowledge Management: Tools and Technology |chapter-url=https://books.google.com/books?id=XmGx4J9daUMC&pg=PA278 |page=278 |place=New Delhi |publisher=Tata McGraw-Hill Education Private Ltd |isbn=978-0-07-07-0086-4 |access-date=November 23, 2012 }}</ref> | |||

| # ] | |||

| Web search engines get their information by ] from site to site. The "spider" checks for the standard filename '']'', addressed to it. The robots.txt file contains directives for search spiders, telling it which pages to crawl and which pages not to crawl. After checking for robots.txt and either finding it or not, the spider sends certain information back to be ] depending on many factors, such as the titles, page content, ], ] (CSS), headings, or its ] in HTML ]. After a certain number of pages crawled, amount of data indexed, or time spent on the website, the spider stops crawling and moves on. "o web crawler may actually crawl the entire reachable web. Due to infinite websites, spider traps, spam, and other exigencies of the real web, crawlers instead apply a crawl policy to determine when the crawling of a site should be deemed sufficient. Some websites are crawled exhaustively, while others are crawled only partially".<ref>Dasgupta, Anirban; Ghosh, Arpita; Kumar, Ravi; Olston, Christopher; Pandey, Sandeep; and Tomkins, Andrew. ''The Discoverability of the Web''. http://www.arpitaghosh.com/papers/discoverability.pdf</ref> | |||

| Indexing means associating words and other definable tokens found on web pages to their domain names and ]-based fields. The associations are made in a public database, made available for web search queries. A query from a user can be a single word, multiple words or a sentence. The index helps find information relating to the query as quickly as possible.<ref name=Jawadekar2011/> Some of the techniques for indexing, and ] are trade secrets, whereas web crawling is a straightforward process of visiting all sites on a systematic basis. | |||

| Between visits by the ''spider'', the ] version of the page (some or all the content needed to render it) stored in the search engine working memory is quickly sent to an inquirer. If a visit is overdue, the search engine can just act as a ] instead. In this case, the page may differ from the search terms indexed.<ref name=Jawadekar2011/> The cached page holds the appearance of the version whose words were previously indexed, so a cached version of a page can be useful to the website when the actual page has been lost, but this problem is also considered a mild form of ]. | |||

| <!-- perhaps at web cache: | |||

| , and Google's handling of it increases ] by satisfying ] that the search terms will be on the returned webpage. This satisfies the ], since the user normally expects that the search terms will be on the returned pages. Increased search relevance makes these cached pages very useful as they may contain data that may no longer be available elsewhere.{{Citation needed|date=November 2012}} | |||

| --> | |||

| ] | |||

| Typically when a user enters a ] into a search engine it is a few ].<ref>Jansen, B. J., Spink, A., and Saracevic, T. 2000. . 36(2), 207–227.</ref> The ] already has the names of the sites containing the keywords, and these are instantly obtained from the index. The real processing load is in generating the web pages that are the search results list: Every page in the entire list must be ] according to information in the indexes.<ref name=Jawadekar2011/> Then the top search result item requires the lookup, reconstruction, and markup of the '']'' showing the context of the keywords matched. These are only part of the processing each search results web page requires, and further pages (next to the top) require more of this post-processing. | |||

| Beyond simple keyword lookups, search engines offer their own ]- or command-driven operators and search parameters to refine the search results. These provide the necessary controls for the user engaged in the feedback loop users create by ''filtering'' and ''weighting'' while refining the search results, given the initial pages of the first search results. | |||

| For example, from 2007 the Google.com search engine has allowed one to ''filter'' by date by clicking "Show search tools" in the leftmost column of the initial search results page, and then selecting the desired date range.<ref>{{cite web|last1=Chitu|first1=Alex|title=Easy Way to Find Recent Web Pages|url=http://googlesystem.blogspot.com/2007/08/easy-way-to-find-recent-web-pages.html|website=Google Operating System|access-date=22 February 2015|date=August 30, 2007}}</ref> It is also possible to ''weight'' by date because each page has a modification time. Most search engines support the use of the ]s AND, OR and NOT to help end users refine the ]. Boolean operators are for literal searches that allow the user to refine and extend the terms of the search. The engine looks for the words or phrases exactly as entered. Some search engines provide an advanced feature called ], which allows users to define the distance between keywords.<ref name=Jawadekar2011/> There is also ] where the research involves using statistical analysis on pages containing the words or phrases you search for. | |||

| The usefulness of a search engine depends on the ] of the ''result set'' it gives back. While there may be millions of web pages that include a particular word or phrase, some pages may be more relevant, popular, or authoritative than others. Most search engines employ methods to ] the results to provide the "best" results first. How a search engine decides which pages are the best matches, and what order the results should be shown in, varies widely from one engine to another.<ref name=Jawadekar2011/> The methods also change over time as Internet usage changes and new techniques evolve. There are two main types of search engine that have evolved: one is a system of predefined and hierarchically ordered keywords that humans have programmed extensively. The other is a system that generates an "]" by analyzing texts it locates. This first form relies much more heavily on the computer itself to do the bulk of the work. | |||

| Most Web search engines are commercial ventures supported by ] revenue and thus some of them allow advertisers to ] in search results for a fee. Search engines that do not accept money for their search results make money by running ] alongside the regular search engine results. The search engines make money every time someone clicks on one of these ads.<ref>{{cite web|title=how search engine works?|url=http://globalforumonline.com/detail/how-does-search-engine-works/|publisher= GFO | access-date = 26 June 2018}}</ref> | |||

| === Local search === | |||

| ] is the process that optimizes the efforts of local businesses. They focus on change to make sure all searches are consistent. It is important because many people determine where they plan to go and what to buy based on their searches.<ref>{{Cite web|url=https://www.searchenginejournal.com/local-seo/what-is-local-seo-why-local-search-is-important/|title=What Is Local SEO & Why Local Search Is Important|website=Search Engine Journal|language=en|access-date=2020-04-26}}</ref> | |||

| ==Market share== | |||

| {{As of|2022|01|post=,}} ] is by far the world's most used search engine, with a market share of 90%, and the world's other most used search engines were ] at 4%, ] at 2%, ] at 1%. Other search engines not listed have less than a 3% market share.<ref name="NMS" /> In 2024, Google's dominance was ruled an illegal monopoly in a case brought by the US Department of Justice.<ref>{{cite web | last=Kerr | first=Dara | title=U.S. v. Google: As landmark 'monopoly power' trial closes, here's what to look for | website=] | date=2024-05-02 | url=https://www.npr.org/2024/05/02/1248152695/google-doj-monopoly-trial-antitrust-closing-arguments}}</ref> | |||

| <graph>{ | |||

| "version": 2, | |||

| "width": 650, | |||

| "height": 260, | |||

| "data": [ | |||

| { | |||

| "name": "table", | |||

| "values": [ | |||

| { | |||

| "x": "Google 90.6%", | |||

| "y": 92.01 | |||

| }, | |||

| { | |||

| "x": "Bing 3.25%", | |||

| "y": 3.25 | |||

| }, | |||

| { | |||

| "x": "Yahoo! 3.12%", | |||

| "y": 3.12 | |||

| }, | |||

| { | |||

| "x": "Baidu 1.17%", | |||

| "y": 1.17 | |||

| }, | |||

| { | |||

| "x": "Yandex 1.06%", | |||

| "y": 1.06 | |||

| }, | |||

| { | |||

| "x": "DuckDuckGo 0.68%", | |||

| "y": 0.68 | |||

| } | |||

| ] | |||

| } | |||

| ], | |||

| "scales": [ | |||

| { | |||

| "name": "x", | |||

| "type": "ordinal", | |||

| "range": "width", | |||

| "zero": false, | |||

| "domain": { | |||

| "data": "table", | |||

| "field": "x" | |||

| } | |||

| }, | |||

| { | |||

| "name": "y", | |||

| "type": "linear", | |||

| "range": "height", | |||

| "nice": true, | |||

| "domain": { | |||

| "data": "table", | |||

| "field": "y" | |||

| } | |||

| } | |||

| ], | |||

| "axes": [ | |||

| { | |||

| "type": "x", | |||

| "scale": "x" | |||

| }, | |||

| { | |||

| "type": "y", | |||

| "scale": "y" | |||

| } | |||

| ], | |||

| "marks": [ | |||

| { | |||

| "type": "rect", | |||

| "from": { | |||

| "data": "table" | |||

| }, | |||

| "properties": { | |||

| "enter": { | |||

| "x": { | |||

| "scale": "x", | |||

| "field": "x" | |||

| }, | |||

| "y": { | |||

| "scale": "y", | |||

| "field": "y" | |||

| }, | |||

| "y2": { | |||

| "scale": "y", | |||

| "value": 0 | |||

| }, | |||

| "fill": { | |||

| "value": "steelblue" | |||

| }, | |||

| "width": { | |||

| "scale": "x", | |||

| "band": "true", | |||

| "offset": -1 | |||

| } | |||

| } | |||

| } | |||

| } | |||

| ], | |||

| "padding": { | |||

| "top": 30, | |||

| "bottom": 30, | |||

| "left": 50, | |||

| "right": 50 | |||

| } | |||

| }</graph> | |||

| === Russia and East Asia === | |||

| {{update section|date=December 2023}} | |||

| In Russia, ] has a market share of 62.6%, compared to Google's 28.3%. Yandex is the second most used search engine on smartphones in Asia and Europe.<ref>{{cite web|url=http://www.liveinternet.ru/stat/ru/searches.html?slice=ru;period=week|title=Live Internet - Site Statistics|publisher=Live Internet|access-date=2014-06-04}}</ref> In China, Baidu is the most popular search engine.<ref>{{cite news |url=https://www.theguardian.com/world/2014/jun/03/chinese-technology-companies-huawei-dominate-world|title=The Chinese technology companies poised to dominate the world |newspaper=The Guardian |author=Arthur, Charles |date=2014-06-03 |access-date=2014-06-04}}</ref> South Korea-based search portal ] is used for 62.8% of online searches in the country.<ref>{{cite news|url=https://blogs.wsj.com/korearealtime/2014/05/21/how-naver-hurts-companies-productivity/|title=How Naver Hurts Companies' Productivity |newspaper=The Wall Street Journal |date=2014-05-21|access-date=2014-06-04}}</ref> ] and ] are the most popular choices for Internet searches in Japan and Taiwan, respectively.<ref>{{cite web |title=Age of Internet Empires |url=https://geography.oii.ox.ac.uk/age-of-internet-empires/ |publisher=Oxford Internet Institute |access-date=15 August 2019}}</ref> China is one of few countries where Google is not in the top three web search engines for market share. Google was previously more popular in China, but withdrew significantly after a disagreement with the government over censorship and a cyberattack. Bing, however, is in the top three web search engines with a market share of 14.95%. Baidu is top with 49.1% of the market share.<ref>{{Cite web |url=https://www.theatlantic.com/technology/archive/2016/01/why-google-quit-china-and-why-its-heading-back/424482/|title=Why Google Quit China—and Why It's Heading Back |last=Waddell|first=Kaveh|date=2016-01-19|website=The Atlantic|language=en-US|access-date=2020-04-26}}</ref>{{Failed verification|date=April 2024|reason=The statement "But Bing is in top three web search engine with a market share of 14.95%. Baidu is on top with 49.1% market share." has nothing to do with any of the linked article's content.}} | |||

| ===Europe=== | |||

| Most countries' markets in the European Union are dominated by Google, except for the ], where ] is a strong competitor.<ref>{{cite web | last=Kissane | first=Dylan | title=Seznam Takes on Google in the Czech Republic | website=DOZ | date=2015-08-05 | url=https://www.doz.com/search-engine/seznam-search-engine}}</ref> | |||

| The search engine ] is based in ], ], where it attracts most of its 50 million monthly registered users from. | |||

| == Search engine bias == | |||

| Although search engines are programmed to rank websites based on some combination of their popularity and relevancy, empirical studies indicate various political, economic, and social biases in the information they provide<ref>Segev, El (2010). , Oxford: Chandos Publishing.</ref><ref name=vaughan-thelwall>{{cite journal|last=Vaughan|first=Liwen|author2=Mike Thelwall |title=Search engine coverage bias: evidence and possible causes|journal=Information Processing & Management|year=2004|volume=40|issue=4|pages=693–707|doi=10.1016/S0306-4573(03)00063-3|citeseerx=10.1.1.65.5130|s2cid=18977861 }}</ref> and the underlying assumptions about the technology.<ref>Jansen, B. J. and Rieh, S. (2010) . Journal of the American Society for Information Sciences and Technology. 61(8), 1517–1534.</ref> These biases can be a direct result of economic and commercial processes (e.g., companies that advertise with a search engine can become also more popular in its ] results), and political processes (e.g., the removal of search results to comply with local laws).<ref>Berkman Center for Internet & Society (2002), , Harvard Law School.</ref> For example, Google will not surface certain ] websites in France and Germany, where ] is illegal. | |||

| Biases can also be a result of social processes, as search engine algorithms are frequently designed to exclude non-normative viewpoints in favor of more "popular" results.<ref>{{cite journal|last=Introna|first=Lucas|author2=Helen Nissenbaum |title=Shaping the Web: Why the Politics of Search Engines Matters|journal=The Information Society|year=2000|volume=16|issue=3|pages=169–185|doi=10.1080/01972240050133634|citeseerx=10.1.1.24.8051|s2cid=2111039|author2-link=Helen Nissenbaum}}</ref> Indexing algorithms of major search engines skew towards coverage of U.S.-based sites, rather than websites from non-U.S. countries.<ref name=vaughan-thelwall /> | |||

| ] is one example of an attempt to manipulate search results for political, social or commercial reasons. | |||

| Several scholars have studied the cultural changes triggered by search engines,<ref>{{Cite book|title = Google and the Culture of Search|url = https://archive.org/details/googlecultureofs0000hill|url-access = registration|publisher = Routledge|date = 2012-10-12|isbn = 9781136933066|first1 = Ken|last1 = Hillis|first2 = Michael|last2 = Petit|first3 = Kylie|last3 = Jarrett}}</ref> and the representation of certain controversial topics in their results, such as ],<ref>{{Cite book|volume = 14|publisher = Springer Berlin Heidelberg|date = 2008-01-01|isbn = 978-3-540-75828-0|pages = 151–175|series = Information Science and Knowledge Management|doi = 10.1007/978-3-540-75829-7_10|first = P.|last = Reilly| title=Web Search | chapter='Googling' Terrorists: Are Northern Irish Terrorists Visible on Internet Search Engines? |s2cid = 84831583|editor-first = Prof Dr Amanda|editor-last = Spink|editor-first2 = Michael|editor-last2 = Zimmer|bibcode = 2008wsis.book..151R}}</ref> ],<ref>], "", The New York Times, Dec. 29, 2017. Retrieved November 14, 2018.</ref> and ].<ref>{{cite journal|url = http://firstmonday.org/ojs/index.php/fm/article/view/5597|title = Google chemtrails: A methodology to analyze topic representation in search engines|journal = First Monday|volume = 20|issue = 7|last = Ballatore|first = A|doi = 10.5210/fm.v20i7.5597|year = 2015 | doi-access=free }}</ref> | |||

| == Customized results and filter bubbles == | |||

| There has been concern raised that search engines such as Google and Bing provide customized results based on the user's activity history, leading to what has been termed echo chambers or ]s by ] in 2011.<ref>{{Cite book |last=Pariser |first=Eli |url=https://www.worldcat.org/oclc/682892628 |title=The filter bubble : what the Internet is hiding from you |date=2011 |publisher=Penguin Press |isbn=978-1-59420-300-8 |location=New York |oclc=682892628}}</ref> The argument is that search engines and social media platforms use ]s to selectively guess what information a user would like to see, based on information about the user (such as location, past click behaviour and search history). As a result, websites tend to show only information that agrees with the user's past viewpoint. According to ] users get less exposure to conflicting viewpoints and are isolated intellectually in their own informational bubble. Since this problem has been identified, competing search engines have emerged that seek to avoid this problem by not tracking or "bubbling" users, such as ]. However many scholars have questioned Pariser's view, finding that there is little evidence for the filter bubble.<ref>{{Cite journal|title = In Worship of an Echo|journal = IEEE Internet Computing|date = 2014-07-01|issn = 1089-7801|pages = 79–83|volume = 18|issue = 4|doi = 10.1109/MIC.2014.71|first = K.|last = O'Hara|s2cid = 37860225|doi-access = free}}</ref><ref name=":0">{{Cite journal |last=Bruns |first=Axel |date=2019-11-29 |title=Filter bubble |url=https://policyreview.info/node/1426 |journal=Internet Policy Review |language=en |volume=8 |issue=4 |doi=10.14763/2019.4.1426 |s2cid=211483210 |issn=2197-6775|doi-access=free |hdl=10419/214088 |hdl-access=free }}</ref><ref name=":1">{{Cite journal |last1=Haim |first1=Mario |last2=Graefe |first2=Andreas |last3=Brosius |first3=Hans-Bernd |date=2018 |title=Burst of the Filter Bubble? |journal=Digital Journalism |volume=6 |issue=3 |pages=330–343 |doi=10.1080/21670811.2017.1338145 |s2cid=168906316 |issn=2167-0811|doi-access=free }}</ref> On the contrary, a number of studies trying to verify the existence of filter bubbles have found only minor levels of personalisation in search,<ref name=":1" /> that most people encounter a range of views when browsing online, and that Google news tends to promote mainstream established news outlets.<ref>{{Cite journal |last1=Nechushtai |first1=Efrat |last2=Lewis |first2=Seth C. |date=2019 |title=What kind of news gatekeepers do we want machines to be? Filter bubbles, fragmentation, and the normative dimensions of algorithmic recommendations |url=https://linkinghub.elsevier.com/retrieve/pii/S0747563218303650 |journal=Computers in Human Behavior |language=en |volume=90 |pages=298–307 |doi=10.1016/j.chb.2018.07.043|s2cid=53774351 }}</ref><ref name=":0" /> | |||

| == Religious search engines == | |||

| The global growth of the Internet and electronic media in the ] and ] world during the last decade has encouraged Islamic adherents in ] and ], to attempt their own search engines, their own filtered search portals that would enable users to perform ]. More than usual ''safe search'' filters, these Islamic web portals categorizing websites into being either "]" or "]", based on interpretation of ]. ] came online in September 2011. ] came online in July 2013. These use ] filters on the collections from ] and ] (and others).<ref>{{cite web |url=http://news.msn.com/science-technology/new-islam-approved-search-engine-for-muslims |title=New Islam-approved search engine for Muslims |publisher=News.msn.com |access-date=2013-07-11 |archive-url=https://web.archive.org/web/20130712023215/http://news.msn.com/science-technology/new-islam-approved-search-engine-for-muslims |archive-date=2013-07-12 |url-status=dead }}</ref> | |||

| While lack of investment and slow pace in technologies in the Muslim world has hindered progress and thwarted success of an Islamic search engine, targeting as the main consumers Islamic adherents, projects like ] (a Muslim lifestyle site) received millions of dollars from investors like Rite Internet Ventures, and it also faltered. Other religion-oriented search engines are Jewogle, the Jewish version of Google,<ref>{{Cite web | url=http://www.jewogle.com/faq/ | title=Jewogle - FAQ | access-date=2019-02-06 | archive-date=2019-02-07 | archive-url=https://web.archive.org/web/20190207015631/http://www.jewogle.com/faq/ | url-status=dead }}</ref> and Christian search engine SeekFind.org. SeekFind filters sites that attack or degrade their faith.<ref>{{cite web|url=http://allchristiannews.com/halalgoogling-muslims-get-their-own-sin-free-google-should-christians-have-christian-google/|title=Halalgoogling: Muslims Get Their Own "sin free" Google; Should Christians Have Christian Google? - Christian Blog|work=Christian Blog|date=2013-07-25|access-date=2014-09-13|archive-date=2014-09-13|archive-url=https://web.archive.org/web/20140913102516/http://allchristiannews.com/halalgoogling-muslims-get-their-own-sin-free-google-should-christians-have-christian-google/|url-status=dead}}</ref> | |||

| == Search engine submission == | |||

| Web search engine submission is a process in which a webmaster submits a website directly to a search engine. While search engine submission is sometimes presented as a way to promote a website, it generally is not necessary because the major search engines use web crawlers that will eventually find most web sites on the Internet without assistance. They can either submit one web page at a time, or they can submit the entire site using a ], but it is normally only necessary to submit the ] of a web site as search engines are able to crawl a well designed website. There are two remaining reasons to submit a web site or web page to a search engine: to add an entirely new web site without waiting for a search engine to discover it, and to have a web site's record updated after a substantial redesign. | |||

| Some search engine submission software not only submits websites to multiple search engines, but also adds links to websites from their own pages. This could appear helpful in increasing a website's ], because external links are one of the most important factors determining a website's ranking. However, John Mueller of ] has stated that this "can lead to a tremendous number of unnatural links for your site" with a negative impact on site ranking.<ref name="CanBeHarmful">{{cite news |last=Schwartz |first=Barry |author-link=Barry Schwartz (technologist) |url=https://www.seroundtable.com/search-engine-submission-google-15906.html |title=Google: Search Engine Submission Services Can Be Harmful |work=] |date=2012-10-29 |access-date=2016-04-04 }}</ref> | |||

| == Comparison to social bookmarking == | |||

| {{trim|{{#section-h:Social bookmarking|Comparison with search engines}}}} | |||

| == Technology == | |||

| {{cleanup split|Search engine technology#Web search engines|21=section|date=November 2022}} | |||

| ===Archie=== | |||

| The first web search engine was ], created in 1990<ref name="intelligent-technologies">{{cite book|author1=Priti Srinivas Sajja|author2=Rajendra Akerkar|title=Intelligent technologies for web applications|date=2012|publisher=CRC Press|location=Boca Raton|isbn=978-1-4398-7162-1|page=87|url=https://books.google.com/books?id=HqXxoWK7tucC&q=the+University+of+Nevada+System+Computing+Services+group+developed+Veronica.&pg=PA87|accessdate=3 June 2014}}</ref> by ], a student at ] in Montreal. The author originally wanted to call the program "archives", but had to shorten it to comply with the Unix world standard of assigning programs and files short, cryptic names such as grep, cat, troff, sed, awk, perl, and so on. | |||

| The primary method of storing and retrieving files was via the ] (FTP). This was (and still is) a system that specified a common way for computers to exchange files over the Internet. It works like this: Some administrator decides that he wants to make files available from his computer. He sets up a program on his computer, called an FTP server. When someone on the Internet wants to retrieve a file from this computer, he or she connects to it via another program called an FTP client. Any FTP client program can connect with any FTP server program as long as the client and server programs both fully follow the specifications set forth in the FTP protocol. | |||

| Initially, anyone who wanted to share a file had to set up an FTP server in order to make the file available to others. Later, "anonymous" FTP sites became repositories for files, allowing all users to post and retrieve them. | |||

| Even with archive sites, many important files were still scattered on small FTP servers. These files could be located only by the Internet equivalent of word of mouth: Somebody would post an e-mail to a message list or a discussion forum announcing the availability of a file. | |||

| Archie changed all that. It combined a script-based data gatherer, which fetched site listings of anonymous FTP files, with a regular expression matcher for retrieving file names matching a user query. (4) In other words, Archie's gatherer scoured FTP sites across the Internet and indexed all of the files it found. Its regular expression matcher provided users with access to its database.<ref name="wileyhistory">{{cite web|title=A History of Search Engines|url=http://www.wiley.com/legacy/compbooks/sonnenreich/history.html|publisher=Wiley|accessdate=1 June 2014}}</ref> | |||

| ===Veronica=== | |||

| In 1993, the University of Nevada System Computing Services group developed ].<ref name="intelligent-technologies"/> It was created as a type of searching device similar to Archie but for Gopher files. Another Gopher search service, called Jughead, appeared a little later, probably for the sole purpose of rounding out the comic-strip triumvirate. Jughead is an acronym for Jonzy's Universal Gopher Hierarchy Excavation and Display, although, like Veronica, it is probably safe to assume that the creator backed into the acronym. Jughead's functionality was pretty much identical to Veronica's, although it appears to be a little rougher around the edges.<ref name="wileyhistory"/> | |||

| ===The Lone Wanderer=== | |||

| The ], developed by Matthew Gray in 1993<ref>{{cite book|author1=Priti Srinivas Sajja|author2=Rajendra Akerkar|title=Intelligent technologies for web applications|date=2012|publisher=CRC Press|location=Boca Raton|isbn=978-1-4398-7162-1|page=86|url=https://books.google.com/books?id=HqXxoWK7tucC&q=the+University+of+Nevada+System+Computing+Services+group+developed+Veronica.&pg=PA87|accessdate=3 June 2014}}</ref> was the first robot on the Web and was designed to track the Web's growth. Initially, the Wanderer counted only Web servers, but shortly after its introduction, it started to capture URLs as it went along. The database of captured URLs became the Wandex, the first web database. | |||

| Matthew Gray's Wanderer created quite a controversy at the time, partially because early versions of the software ran rampant through the Net and caused a noticeable netwide performance degradation. This degradation occurred because the Wanderer would access the same page hundreds of times a day. The Wanderer soon amended its ways, but the controversy over whether robots were good or bad for the Internet remained. | |||

| In response to the Wanderer, Martijn Koster created Archie-Like Indexing of the Web, or ALIWEB, in October 1993. As the name implies, ALIWEB was the HTTP equivalent of Archie, and because of this, it is still unique in many ways. | |||

| ALIWEB does not have a web-searching robot. Instead, webmasters of participating sites post their own index information for each page they want listed. The advantage to this method is that users get to describe their own site, and a robot does not run about eating up Net bandwidth. The disadvantages of ALIWEB are more of a problem today. The primary disadvantage is that a special indexing file must be submitted. Most users do not understand how to create such a file, and therefore they do not submit their pages. This leads to a relatively small database, which meant that users are less likely to search ALIWEB than one of the large bot-based sites. This Catch-22 has been somewhat offset by incorporating other databases into the ALIWEB search, but it still does not have the mass appeal of search engines such as Yahoo! or Lycos.<ref name="wileyhistory"/> | |||

| ===Excite=== | |||

| ], initially called Architext, was started by six Stanford undergraduates in February 1993. Their idea was to use statistical analysis of word relationships in order to provide more efficient searches through the large amount of information on the Internet. | |||

| Their project was fully funded by mid-1993. Once funding was secured. they released a version of their search software for webmasters to use on their own web sites. At the time, the software was called Architext, but it now goes by the name of Excite for Web Servers.<ref name="wileyhistory"/> | |||

| Excite was the first serious commercial search engine which launched in 1995.<ref>{{cite web|title=The Major Search Engines|url=http://www.pccua.edu/kholland/major_search_engines.htm|accessdate=1 June 2014|date=21 January 2014|archive-date=5 June 2014|archive-url=https://web.archive.org/web/20140605052335/http://www.pccua.edu/kholland/major_search_engines.htm|url-status=dead}}</ref> It was developed in Stanford and was purchased for $6.5 billion by @Home. In 2001 Excite and @Home went bankrupt and ] bought Excite for $10 million. | |||

| Some of the first analysis of web searching was conducted on search logs from Excite<ref>Jansen, B. J., Spink, A., Bateman, J., and Saracevic, T. 1998. . SIGIR Forum, 32(1), 5 -17.</ref><ref>Jansen, B. J., Spink, A., and Saracevic, T. 2000. . Information Processing & Management. 36(2), 207–227.</ref> | |||

| ===Yahoo!=== | |||

| In April 1994, two Stanford University Ph.D. candidates, ] and ], created some pages that became rather popular. They called the collection of pages ] Their official explanation for the name choice was that they considered themselves to be a pair of yahoos. | |||

| As the number of links grew and their pages began to receive thousands of hits a day, the team created ways to better organize the data. In order to aid in data retrieval, Yahoo! (www.yahoo.com) became a searchable directory. The search feature was a simple database search engine. Because Yahoo! entries were entered and categorized manually, Yahoo! was not really classified as a search engine. Instead, it was generally considered to be a searchable directory. Yahoo! has since automated some aspects of the gathering and classification process, blurring the distinction between engine and directory. | |||

| The Wanderer captured only URLs, which made it difficult to find things that were not explicitly described by their URL. Because URLs are rather cryptic to begin with, this did not help the average user. Searching Yahoo! or the Galaxy was much more effective because they contained additional descriptive information about the indexed sites. | |||

| ===Lycos=== | |||

| At Carnegie Mellon University during July 1994, Michael Mauldin, on leave from CMU, developed the ] search engine. | |||

| ===Types of web search engines=== | |||

| Search engines on the web are sites enriched with facility to search the content stored on other sites. There is difference in the way various search engines work, but they all perform three basic tasks.<ref>{{cite book|author1=Priti Srinivas Sajja|author2=Rajendra Akerkar|title=Intelligent technologies for web applications|date=2012|publisher=CRC Press|location=Boca Raton|isbn=978-1-4398-7162-1|page=85|url=https://books.google.com/books?id=HqXxoWK7tucC&q=the+University+of+Nevada+System+Computing+Services+group+developed+Veronica.&pg=PA87|accessdate=3 June 2014}}</ref> | |||

| # Finding and selecting full or partial content based on the keywords provided. | |||

| # Maintaining index of the content and referencing to the location they find | |||

| # Allowing users to look for words or combinations of words found in that index. | |||

| The process begins when a user enters a query statement into the system through the interface provided. | |||

| {| class="wikitable" | |||

| |- | |||

| ! Type | |||

| ! Example | |||

| ! Description | |||

| |- | |||

| | Conventional | |||

| | librarycatalog | |||

| | Search by keyword, title, author, etc. | |||

| |- | |||

| | Text-based | |||

| | Google, Bing, Yahoo! | |||

| | Search by keywords. Limited search using queries in natural language. | |||

| |- | |||

| | ] | |||

| | Google, Bing, Yahoo! | |||

| | Search by keywords. Limited search using queries in natural language. | |||

| |- | |||

| | ] | |||

| | QBIC, WebSeek, SaFe | |||

| | ] (shapes, colors,..) | |||

| |- | |||

| | Q/A | |||

| | ], NSIR | |||

| | Search in (restricted) natural language | |||

| |- | |||

| | Clustering Systems | |||

| | Vivisimo, Clusty | |||

| | | |||

| |- | |||

| | Research Systems | |||

| | Lemur, Nutch | |||

| | | |||

| |} | |||

| There are basically three types of search engines: Those that are powered by robots (called ]s; ants or spiders) and those that are powered by human submissions; and those that are a hybrid of the two. | |||

| Web search engines work by storing information about a large number of ]s, which they retrieve from the WWW itself. These pages are retrieved by a ] (sometimes also known as a spider) — an automated Web browser which follows every link it sees. Exclusions can be made by the use of ]. The contents of each page are then analyzed to determine how it should be ] (for example, words are extracted from the titles, headings, or special fields called ]). Data about web pages are stored in an index database for use in later queries. Some search engines, such as ], store all or part of the source page (referred to as a ]) as well as information about the web pages, whereas others, such as ], store every word of every page they find. This cached page always holds the actual search text since it is the one that was actually indexed, so it can be very useful when the content of the current page has been updated and the search terms are no longer in it. This problem might be considered to be a mild form of ], and Google's handling of it increases ] by satisfying ] that the search terms will be on the returned webpage. This satisfies the ] since the user normally expects the search terms to be on the returned pages. Increased search relevance makes these cached pages very useful, even beyond the fact that they may contain data that may no longer be available elsewhere. | |||

| Crawler-based search engines are those that use automated software agents (called crawlers) that visit a Web site, read the information on the actual site, read the site's meta tags and also follow the links that the site connects to performing indexing on all linked Web sites as well. The crawler returns all that information back to a central depository, where the data is indexed. The crawler will periodically return to the sites to check for any information that has changed. The frequency with which this happens is determined by the administrators of the search engine. | |||

| When a user enters a ] into a search engine (typically by using ]s), the engine examines its ] and provides a listing of best-matching web pages according to its criteria, usually with a short summary containing the document's title and sometimes parts of the text. Most search engines support the use of the ] AND, OR and NOT to further specify the ]. Some search engines provide an advanced feature called ] which allows users to define the distance between keywords. | |||

| Human-powered search engines rely on humans to submit information that is subsequently indexed and catalogued. Only information that is submitted is put into the index. | |||

| The usefulness of a search engine depends on the ] of the '''result set''' it gives back. While there may be millions of webpages that include a particular word or phrase, some pages may be more relevant, popular, or authoritative than others. Most search engines employ methods to ] the results to provide the "best" results first. How a search engine decides which pages are the best matches, and what order the results should be shown in, varies widely from one engine to another. The methods also change over time as Internet usage changes and new techniques evolve. | |||

| Most Web search engines are commercial ventures supported by ] revenue and, as a result, some employ the controversial practice of allowing advertisers to pay money to have their listings ranked higher in search results. Those search engines which do not accept money for their search engine results make money by running search related ads alongside the regular search engine results. The search engines make money every time someone clicks on one of these ads. | |||

| In both cases, when you query a search engine to locate information, you're actually searching through the index that the search engine has created —you are not actually searching the Web. These indices are giant databases of information that is collected and stored and subsequently searched. This explains why sometimes a search on a commercial search engine, such as Yahoo! or Google, will return results that are, in fact, dead links. Since the search results are based on the index, if the index has not been updated since a Web page became invalid the search engine treats the page as still an active link even though it no longer is. It will remain that way until the index is updated. | |||

| The vast majority of search engines are run by private companies using proprietary algorithms and closed databases, though ] are open source.{{Fact|date=February 2007}} | |||

| So why will the same search on different search engines produce different results? Part of the answer to that question is because not all indices are going to be exactly the same. It depends on what the spiders find or what the humans submitted. But more important, not every search engine uses the same algorithm to search through the indices. The algorithm is what the search engines use to determine the ] of the information in the index to what the user is searching for. | |||

| ==Storage costs and crawling time== | |||

| {{Original research|date=September 2007}} | |||

| <!-- this section is rather naive... --> | |||

| Storage costs are not the limiting resource in search engine implementation. Simply storing 10 billion pages of 10 kbytes each (compressed) requires 100] and another 100 TB or so for indexes, giving a total hardware cost of under $200k: 100 cheap PCs each with four 500] disk drives.{{Fact|date=February 2007}} | |||