| Revision as of 11:38, 9 July 2007 editKSmrq (talk | contribs)5,323 edits →Lebesgue Integral: remove non sequitur← Previous edit | Revision as of 13:43, 9 July 2007 edit undoLoom91 (talk | contribs)2,505 edits Add a paragraph to the lead, various small tweaks, reorganise sectionsNext edit → | ||

| Line 1: | Line 1: | ||

| {{dablink|This article is about the concept of integrals in ]. For other meanings, see ] and ].}} | {{dablink|This article is about the concept of integrals in ]. For other meanings, see ] and ].}} | ||

| .]] | .]] | ||

| '''Integral''' is a core concept of advanced ], specifically, in the fields of ] and ]. Given a ] ''f''(''x'') of a real ] ''x'' and an ] <nowiki></nowiki> of the ], the integral | '''Integral''' is a core concept of advanced ], specifically, in the fields of ] and ]. Given a ] ''f''(''x'') of a real ] ''x'' and an ] <nowiki></nowiki> of the ], the integral (in its most basic form) | ||

| : <math>\int_a^b f(x)dx </math> | : <math>\int_a^b f(x)dx </math> | ||

| is equal to the ] of a region in the ''xy''-plane bounded by the ] of ''f'', the ''x''-axis, and the vertical lines ''x''=''a'' and ''x''=''b''. | |||

| The idea of integration was formulated in the late seventeenth century by ] and ]. Together with the concept of a ], integral |

The concept of adding together discreet quantities has existed from a very early point of human civilisation. Later tis was extended to the concept of ], but nature is essentially continuous and a general method of dealing with this continuity was lacking. The idea of integration was formulated in the late seventeenth century by ] and ]. Together with the concept of a ], integral formed the basic tools of calculus. These concepts could deal in a natural and powerful way with continuous change. They revolutionised science and today find near universal use in almost every branch of the physical sciences, mathematics, computer science etc. Integrals usually provide, in some form, a measure of totality of quantities varying continuously across a domain. | ||

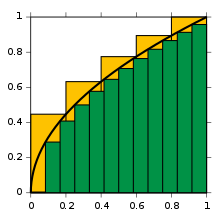

| The first ] mathematical definition of the integral was developed by ]. It is based on a ] procedure which was based on the geometric technique of approximating the area of a curvilinear region by breaking the region into succesively thinner vertical slabs (see ]). While this definition is adequate for most ordinary functions, many special types of functions can not be covered by it. Beginning in the nineteenth century, more sophisticated notions of integral began to appear, where the type of the function as well as the domain over which the integration is performed was greatly generalised. Apart from generalisations of the Riemann integral into higher dimensions (see ]), the most important fundamental development was a far more genral and powerful definition, the ], developed by ] that found use in ]. Development of ] was another fundamental leap, with wide applications in modern physics. | |||

| Integrals of ]s play a fundamental role in modern ]. These generalizations of integral first arose from the needs of ], and they play an important role in the formulation of many physical laws, notably, in ]. An abstract mathematical theory known as ] was developed by ]. | |||

| {{TOCleft}} | {{TOCleft}} | ||

| {{Calculus}} | {{Calculus}} | ||

| The term "integral" may also refer to the notion of ], a function ''F'' whose derivative is the given function ''f''. In this case it is called an indefinite integral, while the integrals discussed in this article are termed definite integrals. The ] |

The term "integral" may also refer to the notion of ], a function ''F'' whose derivative is the given function ''f''. In this case it is called an indefinite integral, while the integrals discussed in this article are termed definite integrals. The ] in its various forms relates definite integrals with derivatives. Some authors, for example ], maintain a distinction between antiderivatives and indefinite integrals. | ||

| <!--- Edited out because of technical nature (for the lead) as well as poor placing with the surrounding boxes | |||

| That is, if ''F''′(''x'') = ''f''(''x''), where ''F''′ denotes the ] of ''F'' with respect to ''x'', then ''F'' is the indefinite integral of ''f'', | |||

| ⚫ | :<math> |

||

| and the definite integral from ''a'' to ''b'' is | |||

| :<math> \int_a^b f(x) \, dx = F(b) - F(a) . </math> | |||

| ---> | |||

| ⚫ | == History == | ||

| ⚫ | {{main|History of calculus}} | ||

| ⚫ | === Pre-calculus integration === | ||

| ⚫ | Integration can be traced as far back as ancient Egypt, ''circa'' 1800 BC, with the ] demonstrating knowledge of a formula for the volume of a ]al ]. The first documented systematic technique capable of determining integrals is the ] of ] (''circa'' 370 BC), which sought to find areas and volumes by breaking them up into an infinite number of shapes for which the area or volume was known. This method was further developed and employed by ] and used to calculate areas for parabolas and an approximation to the area of a circle. Similar methods were independently developed in China around the 3rd Century AD by ], who used it to find the area of the circle. This method was later used by ] to find the volume of a sphere. | ||

| ⚫ | Significant advances on techniques such as the method of exhaustion did not begin to appear until the 16th Century AD. At this time the work of ] with his ''method of indivisibles'', and work by ], began to lay the foundations of modern calculus. Further steps were made in the early 17th Century by ] and ], who provided the first hints of a connection between integration and ]. | ||

| ⚫ | === Newton and Leibniz === | ||

| ⚫ | The major advance in integration came in the 17th Century with the independent discovery of the ] by ] and ]. The theorem demonstrates a connection between integration and differentiation. This connection, combined with the comparative ease of differentiation, can be exploited to calculate integrals. In particular, the fundamental theorem of calculus allows one to solve a much broader class of problems. Equal in importance is the comprehensive mathematical framework that both Newton and Leibniz developed. Given the name infinitesimal calculus, it allowed for precise analysis of functions within continuous domains. This framework eventually became modern ], whose notation for integrals is drawn directly from the work of Leibniz. | ||

| <!--- Please, do not remove: helpful for verification | |||

| The last sentence originally said 'work of Newton and Leibniz', but for integrals, only Leibniz's notation is used. | |||

| ---> | |||

| ⚫ | === Formalising integrals === | ||

| ⚫ | While Newton and Leibniz provided systematic approach to integration, their work lacked a degree of rigour. ] memorably attacked ]s as "the ghosts of departed quantity". Calculus acquired a firmer footing with the development of ] and was given a suitable foundation by ] in the first half of the 19th century. Integration was first rigorously formalised, using limits, by ]. Although all continuous functions on a closed and bounded interval are Riemann integrable, subsequently more general functions were considered, to which Riemann's definition does not apply, and ] formulated a different definition of integral, founded in ]. Other definitions of integral, extending Riemann's and Lebesgue's approaches, were proposed. | ||

| ⚫ | === Notation === | ||

| ⚫ | ] used a small vertical bar above a variable to indicate integration, or placed the variable inside a box. The vertical bar was easily confused with <math>\dot{x}</math> or <math>x'\,\!</math>, which Newton used to indicate differentiation, and the box notation was difficult for printers to reproduce, so these notations were not widely adopted. | ||

| ⚫ | The modern notation for the indefinite integral was introduced by ] in 1675 {{Harv|Burton|1988|loc=p. 359}}{{Harv|Leibniz|1899|loc=p. 154}}. He derived the integral symbol, "∫", from an ], standing for ''summa'' (Latin for "sum" or "total"). The modern notation for the definite integral, with limits above and below the integral sign, was first used by ] in ''Mémoires'' of the French Academy around 1819–20, reprinted in his book of 1822 {{Harv|Cajori|1929|loc=pp. 249–250}}{{Harv|Fourier|1822|loc=§231}}. | ||

| ⚫ | In ] which is written from right to left, an inverted integral symbol ] is used {{Harv|W3C|2006}}. | ||

| ⚫ | == Terminology and notation == | ||

| ⚫ | If a function has an integral, it is said to be '''integrable'''. The function for which the integral is calculated is called the '''integrand'''. The region over which a function is being integrated is called the '''domain of integration'''. In general the integrand may be a function of more than one variable, and the domain of integration may be an area, volume, a higher dimensional region, or even an abstract space that does not have a geometric structure in any usual sense. | ||

| ⚫ | The simplest case, the integral of a real-valued function ''f'' of one real variable ''x'' on the interval , is denoted by | ||

| :<math>\int_a^b f(x)\,dx . </math> | |||

| ⚫ | The ∫ sign, an elongated "S", represents integration; ''a'' and ''b'' are the ''lower limit'' and ''upper limit'' of integration, defining the domain of integration; ''f'' is the integrand, to be evaluated as ''x'' varies over the interval ; and ''dx'' can be seen as merely a notation indicating that ''x'' is the integration variable, or as a reflection of the weights in the |

||

| == Introduction == | == Introduction == | ||

| Line 70: | Line 34: | ||

| Although there are differences between these conceptions of integral, there is considerable overlap. Thus the area of the surface of the oval swimming pool can be handled as a geometric ellipse, as a sum of infinitesimals, as a Riemann integral, as a Lebesgue integral, or as a manifold with a differential form. The calculated result will be the same for all. | Although there are differences between these conceptions of integral, there is considerable overlap. Thus the area of the surface of the oval swimming pool can be handled as a geometric ellipse, as a sum of infinitesimals, as a Riemann integral, as a Lebesgue integral, or as a manifold with a differential form. The calculated result will be the same for all. | ||

| ⚫ | == Terminology and notation == | ||

| ⚫ | If a function has an integral, it is said to be '''integrable'''. The function for which the integral is calculated is called the '''integrand'''. The region over which a function is being integrated is called the '''domain of integration'''. In general the integrand may be a function of more than one variable, and the domain of integration may be an area, volume, a higher dimensional region, or even an abstract space that does not have a geometric structure in any usual sense. | ||

| ⚫ | The simplest case, the integral of a real-valued function ''f'' of one real variable ''x'' on the interval , is denoted by | ||

| ⚫ | :<math>\int_a^b f(x)\,dx . </math> | ||

| ⚫ | The ∫ sign, an elongated "S", represents integration; ''a'' and ''b'' are the ''lower limit'' and ''upper limit'' of integration, defining the domain of integration; ''f'' is the integrand, to be evaluated as ''x'' varies over the interval ; and ''dx'' can have different interpretations depending on the theory being used. It can be seen as merely a notation indicating that ''x'' is the integration variable, or as a reflection of the weights in the Riemann sum, a measure (in Lebesgue integration and its extensions), an infinitesimal (in non-standard analysis) or as an independent mathematical quantity: a ]. More complicated cases may vary the notation slightly. | ||

| == Formal definitions == | == Formal definitions == | ||

| Line 129: | Line 100: | ||

| === Other Integrals === | === Other Integrals === | ||

| Although the Riemann and Lebesgue integrals are the most |

Although the Riemann and Lebesgue integrals are the most commonly used definitions of the integral, a number of others have been developed, including: | ||

| * The ], an extension of the Riemann integral. | * The ], an important extension of the Riemann integral useful in physics. | ||

| * The ], further developed by ], which generalizes the ] and ]s. | * The ], further developed by ], which generalizes the ] and ]s. | ||

| * The ], which subsumes the ] and ] without the dependence on ]s. | * The ], which subsumes the ] and ] without the dependence on ]s. | ||

| Line 136: | Line 107: | ||

| <!--* The ], equivalent to the Riemann integral.--> | <!--* The ], equivalent to the Riemann integral.--> | ||

| <!--* The ], which is the Lebesgue integral with ].--> | <!--* The ], which is the Lebesgue integral with ].--> | ||

| ⚫ | == History == | ||

| ⚫ | {{main|History of calculus}} | ||

| ⚫ | === Pre-calculus integration === | ||

| ⚫ | Integration can be traced as far back as ancient Egypt, ''circa'' 1800 BC, with the ] demonstrating knowledge of a formula for the volume of a ]al ]. The first documented systematic technique capable of determining integrals is the ] of ] (''circa'' 370 BC), which sought to find areas and volumes by breaking them up into an infinite number of shapes for which the area or volume was known. This method was further developed and employed by ] and used to calculate areas for parabolas and an approximation to the area of a circle. Similar methods were independently developed in China around the 3rd Century AD by ], who used it to find the area of the circle. This method was later used by ] to find the volume of a sphere. | ||

| ⚫ | Significant advances on techniques such as the method of exhaustion did not begin to appear until the 16th Century AD. At this time the work of ] with his ''method of indivisibles'', and work by ], began to lay the foundations of modern calculus. Further steps were made in the early 17th Century by ] and ], who provided the first hints of a connection between integration and ]. | ||

| ⚫ | === Newton and Leibniz === | ||

| ⚫ | The major advance in integration came in the 17th Century with the independent discovery of the ] by ] and ]. The theorem demonstrates a connection between integration and differentiation. This connection, combined with the comparative ease of differentiation, can be exploited to calculate integrals. In particular, the fundamental theorem of calculus allows one to solve a much broader class of problems. Equal in importance is the comprehensive mathematical framework that both Newton and Leibniz developed. Given the name infinitesimal calculus, it allowed for precise analysis of functions within continuous domains. This framework eventually became modern ], whose notation for integrals is drawn directly from the work of Leibniz. Their revolutionary work paved the way for later-day physics. In fact a common legend holds that Newton developed integration so that he could prove some very important results in his theory of gravitation (specifically, that the gravitational field of a spherically symmetric mass distribution is identical to that of a point mass placed at its center). | ||

| ⚫ | === Formalising integrals === | ||

| ⚫ | While Newton and Leibniz provided systematic approach to integration, their work lacked a degree of rigour. ] memorably attacked ]s as "the ghosts of departed quantity". Calculus acquired a firmer footing with the development of ] and was given a suitable foundation by ] in the first half of the 19th century. Integration was first rigorously formalised, using limits, by ]. Although all piecewise continuous functions on a closed and bounded interval are Riemann integrable, subsequently more general functions were considered, to which Riemann's definition does not apply, and ] formulated a different definition of integral, founded in ]. Other definitions of integral, extending Riemann's and Lebesgue's approaches, were proposed. | ||

| ⚫ | === Notation === | ||

| ⚫ | ] used a small vertical bar above a variable to indicate integration, or placed the variable inside a box. The vertical bar was easily confused with <math>\dot{x}</math> or <math>x'\,\!</math>, which Newton used to indicate differentiation, and the box notation was difficult for printers to reproduce, so these notations were not widely adopted. | ||

| ⚫ | The modern notation for the indefinite integral was introduced by ] in 1675 {{Harv|Burton|1988|loc=p. 359}}{{Harv|Leibniz|1899|loc=p. 154}}. He derived the integral symbol, "∫", from an ], standing for ''summa'' (Latin for "sum" or "total"). Just like the notation for derivatives, Leibniz's notation proved to be more easily extendable to more modern theories (see ]). The modern notation for the definite integral, with limits above and below the integral sign, was first used by ] in ''Mémoires'' of the French Academy around 1819–20, reprinted in his book of 1822 {{Harv|Cajori|1929|loc=pp. 249–250}}{{Harv|Fourier|1822|loc=§231}}. | ||

| ⚫ | In ] which is written from right to left, an inverted integral symbol ] is used {{Harv|W3C|2006}}. | ||

| ==Properties of the integral== | ==Properties of the integral== | ||

| In this section, ''f'' and ''g'' are ]valued integrable ]s defined on the real interval , where ''a'' ≤ ''b''. | In this section, ''f'' and ''g'' are ]valued integrable ]s defined on the real interval , where ''a'' ≤ ''b''. This section discusses properties general to all definitions of the integral. | ||

| ===Functional=== | |||

| An integral is essentially a linear ], a mapping from the space of integrable functions onto a scalar field (usually the real or the complex numbers). It can also be treated as an ] in a vector space composed of the integrable functions (see ] for an example of a complex inner-product vector space with an orthogonal basis). | |||

| ===Linearity=== | ===Linearity=== | ||

| Line 293: | Line 287: | ||

| where ω is a general ''k''-form, and ∂Ω denotes the ] of the region Ω. Thus in the case that ω is a 0-form and Ω is a closed interval of the real line, this reduces to the ]. In the case that ω is a 1-form and Ω is a 2-dimensional region in the plane, the theorem reduces to ]. Similarly, using 2-forms, and 3-forms and ], we can arrive at ] and the ]. In this way we can see that differential forms provide a powerful unifying view of integration. | where ω is a general ''k''-form, and ∂Ω denotes the ] of the region Ω. Thus in the case that ω is a 0-form and Ω is a closed interval of the real line, this reduces to the ]. In the case that ω is a 1-form and Ω is a 2-dimensional region in the plane, the theorem reduces to ]. Similarly, using 2-forms, and 3-forms and ], we can arrive at ] and the ]. In this way we can see that differential forms provide a powerful unifying view of integration. | ||

| == |

== Techniques and applications == | ||

| === Computing integrals === | === Computing integrals === | ||

| The most basic technique for computing integrals of one real variable is based on the ]. It proceeds like this: | The most basic technique for computing integrals of one real variable is based on the ]. It proceeds like this: | ||

| Line 329: | Line 323: | ||

| === Numerical quadrature === | === Numerical quadrature === | ||

| <!-- This section is disproportionately large, it should be summarised --> | |||

| {{main|numerical integration}} | {{main|numerical integration}} | ||

Revision as of 13:43, 9 July 2007

This article is about the concept of integrals in calculus. For other meanings, see integration and integral (disambiguation).

Integral is a core concept of advanced mathematics, specifically, in the fields of calculus and mathematical analysis. Given a function f(x) of a real variable x and an interval of the real line, the integral (in its most basic form)

is equal to the area of a region in the xy-plane bounded by the graph of f, the x-axis, and the vertical lines x=a and x=b.

The concept of adding together discreet quantities has existed from a very early point of human civilisation. Later tis was extended to the concept of infinite sums, but nature is essentially continuous and a general method of dealing with this continuity was lacking. The idea of integration was formulated in the late seventeenth century by Isaac Newton and Gottfried Wilhelm Leibniz. Together with the concept of a derivative, integral formed the basic tools of calculus. These concepts could deal in a natural and powerful way with continuous change. They revolutionised science and today find near universal use in almost every branch of the physical sciences, mathematics, computer science etc. Integrals usually provide, in some form, a measure of totality of quantities varying continuously across a domain.

The first rigorous mathematical definition of the integral was developed by Bernhard Riemann. It is based on a limiting procedure which was based on the geometric technique of approximating the area of a curvilinear region by breaking the region into succesively thinner vertical slabs (see method of exhaustion). While this definition is adequate for most ordinary functions, many special types of functions can not be covered by it. Beginning in the nineteenth century, more sophisticated notions of integral began to appear, where the type of the function as well as the domain over which the integration is performed was greatly generalised. Apart from generalisations of the Riemann integral into higher dimensions (see vector calculus), the most important fundamental development was a far more genral and powerful definition, the Lebesgue integral, developed by Henri Lebesgue that found use in probability theory. Development of differential geometry was another fundamental leap, with wide applications in modern physics.

| Part of a series of articles about | ||||||

| Calculus | ||||||

|---|---|---|---|---|---|---|

Differential

|

||||||

Integral

|

||||||

Series

|

||||||

Vector

|

||||||

Multivariable

|

||||||

|

Advanced |

||||||

| Specialized | ||||||

| Miscellanea | ||||||

The term "integral" may also refer to the notion of antiderivative, a function F whose derivative is the given function f. In this case it is called an indefinite integral, while the integrals discussed in this article are termed definite integrals. The fundamental theorem of calculus in its various forms relates definite integrals with derivatives. Some authors, for example Tom Apostol, maintain a distinction between antiderivatives and indefinite integrals.

Introduction

Integrals thrust themselves upon us in many practical situations. Consider a swimming pool. If it is rectangular, then from its length, width, and depth we can easily determine the volume of water it can contain (to fill it), the area of its surface (to cover it), and the length of its edge (to rope it). But if it is oval with a rounded bottom, all of these quantities call for integrals. Practical approximations may suffice at first, but eventually we demand exact and rigorous answers to such problems.

Measuring depends on counting, with the unit of measurement as a key link. For example, recall the famous theorem in geometry which says that if a, b, and c are the side lengths of a right triangle, with c the longest, then a+b = c. If we take the side of a square as one unit, its diagonal is √2 units long, a measurement which is greater than one and less than two. If we take the side as five units, the diagonal is at least seven units long, but still not perfectly measured. A side of 12 units will have a diagonal of about 17 units, but slightly less. In fact, no choice of unit that exactly counts off the side length can ever give an exact count for the diagonal length. Thus the real number system is born, permitting an exact answer with no trace of approximating counts. It is as if the units are infinitely fine.

So it is with integrals. For example, consider the curve y = f(x) between x = 0 and x = 1, with f(x) = √x. In the simpler case where y = 1, the region under the "curve" would be a unit square, so its area would be exactly 1. As it is, the area must be somewhat less. A smaller "unit of measure" should do better; so cross the interval in five steps, from 0 to ⁄5, from ⁄5 to ⁄5, and so on to 1. Fit a box for each step using the right end height of each curve piece, thus √⁄5, √⁄5, and so on to √1 = 1. The total area of these boxes is about 0.7497; but we can easily see that this is still too large. Using 12 steps in the same way, but with the left end height of each piece, yields about 0.6203, which is too small. As before, using more steps produces a closer approximation, but will never be exact. Thus the integral is born, permitting an exact answer of ⁄3, with no trace of approximating sums. It is as if the steps are infinitely fine (infinitesimal).

The most powerful new insight of Newton and Leibniz was that, under suitable conditions, the value of an integral over a region can be determined by looking at the region's boundary alone. For example, the surface area of the pool can be determined by an integral along its edge. This is the essence of the fundamental theorem of calculus. Applied to the square root curve, it says to look at the related function F(x) = ⁄3√x, and simply take F(1)−F(0), where 0 and 1 are the boundaries of the interval . (This is an example of a general rule, that for f(x) = x, with q ≠ −1, the related function is F(x) = (x)/(q+1).)

In Leibniz's own notation — so well chosen it is still common today — the meaning of

was conceived as a weighted sum (denoted by the elongated "S"), with function values (such as the heights, y = f(x)) multiplied by infinitesimal step widths (denoted by dx). After the failure of early efforts to rigorously define infinitesimals, Riemann formally defined integrals as a limit of ordinary weighted sums, so that the dx suggested the limit of a difference (namely, the interval width). Shortcomings of Riemann's dependence on intervals and continuity motivated newer definitions, especially the Lebesgue integral, which is founded on an ability to extend the idea of "measure" in much more flexible ways. Thus the notation

refers to a weighted sum in which the function values are partitioned, with μ measuring the weight to be assigned to each value. (Here A denotes the region of integration.) Differential geometry, with its "calculus on manifolds", gives the familiar notation yet another interpretation. Now f(x) and dx meld together to become something new, a differential form, ω = f(x)dx; a differential operator d appears; and the fundamental theorem evolves into Stokes' theorem,

More recently, infinitesimals have reappeared with rigor, through modern innovations such as non-standard analysis. Not only do these methods vindicate the intuitions of the pioneers, they also lead to new mathematics.

Although there are differences between these conceptions of integral, there is considerable overlap. Thus the area of the surface of the oval swimming pool can be handled as a geometric ellipse, as a sum of infinitesimals, as a Riemann integral, as a Lebesgue integral, or as a manifold with a differential form. The calculated result will be the same for all.

Terminology and notation

If a function has an integral, it is said to be integrable. The function for which the integral is calculated is called the integrand. The region over which a function is being integrated is called the domain of integration. In general the integrand may be a function of more than one variable, and the domain of integration may be an area, volume, a higher dimensional region, or even an abstract space that does not have a geometric structure in any usual sense.

The simplest case, the integral of a real-valued function f of one real variable x on the interval , is denoted by

The ∫ sign, an elongated "S", represents integration; a and b are the lower limit and upper limit of integration, defining the domain of integration; f is the integrand, to be evaluated as x varies over the interval ; and dx can have different interpretations depending on the theory being used. It can be seen as merely a notation indicating that x is the integration variable, or as a reflection of the weights in the Riemann sum, a measure (in Lebesgue integration and its extensions), an infinitesimal (in non-standard analysis) or as an independent mathematical quantity: a differential form. More complicated cases may vary the notation slightly.

Formal definitions

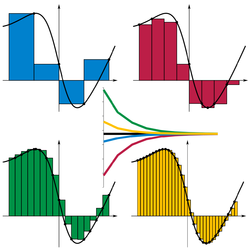

There are many ways of formally defining an integral, not all of which are equivalent. The differences exist mostly to deal with differing special cases which may not be integrable under other definitions, but also occasionally for pedagogical reasons. The most commonly used definitions of integral are Riemann integrals and Lebesgue integrals.

Riemann Integral

Main article: Riemann integral

The Riemann integral is defined in terms of Riemann sums of functions with respect to tagged partitions of an interval. Let be a closed interval of the real line; then a tagged partition of is a finite sequence

This partitions the interval into i sub-intervals , each of which is "tagged" with a distinguished point ti ∈ . Let Δi = xi−xi−1 be the width of sub-interval i; then the mesh of such a tagged partition is the width of the largest sub-interval formed by the partition, maxi=1…n Δi. A Riemann sum of a function f with respect to such a tagged partition is defined as

thus each term of the sum is the area of a rectangle with height equal to the function value at the distinguished point of the given sub-interval, and width the same as the sub-interval width. The Riemann integral of a function f over the interval is equal to S if:

- For all ε > 0 there exists δ > 0 such that, for any tagged partition with mesh less than δ, we have

When the chosen tags give the maximum (respectively, minimum) value of each interval, the Riemann sum becomes an upper (respectively, lower) Darboux sum, suggesting the close connection between the Riemann integral and the Darboux integral.

Lebesgue Integral

Main article: Lebesgue integralThe Riemann integral is not defined for a wide range of functions and situations of importance in applications (and of interest in theory). For example, the Riemann integral can easily integrate density to find the mass of a steel beam, but cannot accomodate a steel ball resting on it. This motivates other definitions, under which a broader assortment of functions is integrable (Rudin 1987). The Lebesgue integral, in particular, achieves great flexibility by directing attention to the weights in the weighted sum.

The definition of the Lebesgue integral thus begins with a measure, μ. In the simplest case, the Lebesgue measure μ(A) of an interval A = is its width, b−a, so that the Lebesgue integral agrees with the (proper) Riemann integral when both exist. In more complicated cases, the sets being measured can be highly fragmented, with no continuity and no resemblence to intervals.

To exploit this flexibility, Lebesgue integrals reverse the approach to the weighted sum. As Folland (1984, p. 56) puts it, "To compute the Riemann integral of f, one partitions the domain into subintervals", while in the Lebesgue integral, "one is in effect partitioning the range of f".

One common approach first defines the integral of the indicator function of a measurable set A by:

- .

This extends by linearity to a measurable simple function s, which attains only a finite number, n, of distinct non-negative values:

(where the image of Ai under the simple function s is the constant value ai). Thus if E is a measurable set one defines

Then for any non-negative measurable function f one defines

that is, the integral of f is set to be the supremum of all the integrals of simple functions that are less than or equal to f. A general measurable function f, is split into its positive and negative values by defining

Finally, f is Lebesgue integrable if

and then the integral is defined by

When the measure space on which the functions are defined is also a locally compact topological space (as is the case with the real numbers R), measures compatible with the topology in a suitable sense (Radon measures, of which the Lebesgue measure is an example) and integral with respect to them can be defined differently, starting from the integrals of continuous functions with compact support. More precisely, the compactly supported functions form a vector space that carries a natural topology, and a (Radon) measure can be defined as any continuous linear functional on this space; the value of a measure at a compactly supported function is then also by definition the integral of the function. One then proceeds to expand the measure (the integral) to more general functions by continuity, and defines the measure of a set as the integral of its indicator function. This is the approach taken by Bourbaki (2004) and a certain number of other authors. For details see Radon measures.

Other Integrals

Although the Riemann and Lebesgue integrals are the most commonly used definitions of the integral, a number of others have been developed, including:

- The Riemann-Stieltjes integral, an important extension of the Riemann integral useful in physics.

- The Lebesgue-Stieltjes integral, further developed by Johann Radon, which generalizes the Riemann-Stieltjes and Lebesgue integrals.

- The Daniell integral, which subsumes the Lebesgue integral and Lebesgue-Stieltjes integral without the dependence on measures.

- The Henstock-Kurzweil integral, variously defined by Arnaud Denjoy, Oskar Perron, and (most elegantly, as the gauge integral) Jaroslav Kurzweil, and developed by Ralph Henstock.

History

Main article: History of calculusPre-calculus integration

Integration can be traced as far back as ancient Egypt, circa 1800 BC, with the Moscow Mathematical Papyrus demonstrating knowledge of a formula for the volume of a pyramidal frustrum. The first documented systematic technique capable of determining integrals is the method of exhaustion of Eudoxus (circa 370 BC), which sought to find areas and volumes by breaking them up into an infinite number of shapes for which the area or volume was known. This method was further developed and employed by Archimedes and used to calculate areas for parabolas and an approximation to the area of a circle. Similar methods were independently developed in China around the 3rd Century AD by Liu Hui, who used it to find the area of the circle. This method was later used by Zu Chongzhi to find the volume of a sphere.

Significant advances on techniques such as the method of exhaustion did not begin to appear until the 16th Century AD. At this time the work of Cavalieri with his method of indivisibles, and work by Fermat, began to lay the foundations of modern calculus. Further steps were made in the early 17th Century by Barrow and Torricelli, who provided the first hints of a connection between integration and differentiation.

Newton and Leibniz

The major advance in integration came in the 17th Century with the independent discovery of the fundamental theorem of calculus by Newton and Leibniz. The theorem demonstrates a connection between integration and differentiation. This connection, combined with the comparative ease of differentiation, can be exploited to calculate integrals. In particular, the fundamental theorem of calculus allows one to solve a much broader class of problems. Equal in importance is the comprehensive mathematical framework that both Newton and Leibniz developed. Given the name infinitesimal calculus, it allowed for precise analysis of functions within continuous domains. This framework eventually became modern Calculus, whose notation for integrals is drawn directly from the work of Leibniz. Their revolutionary work paved the way for later-day physics. In fact a common legend holds that Newton developed integration so that he could prove some very important results in his theory of gravitation (specifically, that the gravitational field of a spherically symmetric mass distribution is identical to that of a point mass placed at its center).

Formalising integrals

While Newton and Leibniz provided systematic approach to integration, their work lacked a degree of rigour. Bishop Berkeley memorably attacked infinitesimals as "the ghosts of departed quantity". Calculus acquired a firmer footing with the development of limits and was given a suitable foundation by Cauchy in the first half of the 19th century. Integration was first rigorously formalised, using limits, by Riemann. Although all piecewise continuous functions on a closed and bounded interval are Riemann integrable, subsequently more general functions were considered, to which Riemann's definition does not apply, and Lebesgue formulated a different definition of integral, founded in measure theory. Other definitions of integral, extending Riemann's and Lebesgue's approaches, were proposed.

Notation

Isaac Newton used a small vertical bar above a variable to indicate integration, or placed the variable inside a box. The vertical bar was easily confused with or , which Newton used to indicate differentiation, and the box notation was difficult for printers to reproduce, so these notations were not widely adopted.

The modern notation for the indefinite integral was introduced by Gottfried Leibniz in 1675 (Burton 1988, p. 359) harv error: no target: CITEREFBurton1988 (help)(Leibniz 1899, p. 154). He derived the integral symbol, "∫", from an elongated letter S, standing for summa (Latin for "sum" or "total"). Just like the notation for derivatives, Leibniz's notation proved to be more easily extendable to more modern theories (see differential forms). The modern notation for the definite integral, with limits above and below the integral sign, was first used by Joseph Fourier in Mémoires of the French Academy around 1819–20, reprinted in his book of 1822 (Cajori 1929, pp. 249–250)(Fourier 1822, §231). In Arabic which is written from right to left, an inverted integral symbol File:ArabicIntegralSign.png is used (W3C 2006).

Properties of the integral

In this section, f and g are real-valued integrable functions defined on the real interval , where a ≤ b. This section discusses properties general to all definitions of the integral.

Functional

An integral is essentially a linear functional, a mapping from the space of integrable functions onto a scalar field (usually the real or the complex numbers). It can also be treated as an inner product in a vector space composed of the integrable functions (see fourier series for an example of a complex inner-product vector space with an orthogonal basis).

Linearity

If λ and κ are any real numbers, then the function λf + κg defined by (λf + κg)(x) = λf(x) + κg(x) for each x in is integrable on , with

This shows that the operation of integration is linear, and that the set of integrable functions on a closed interval is a real vector space, since each of the scalar product and sum of integrable functions is itself an integrable function. If λ, κ, f, and g are complex-valued, linearity still holds, implying a complex vector space.

Basic inequalities

If m ≤ f(x) ≤ M for each x in then

If f(x) ≤ g(x) for each x in then

The functions fg, f , g , and | f |, defined by, respectively,

for each x in are integrable, with

and

Conventions

If a > b then define

which implies that if a = b then

The first convention is necessary in consideration of subintervals of , (explained below); the second says that an integral taken over a degenerate interval, or a point, should be zero.

One reason for the first convention is that the integrability of f on an interval implies that f is integrable on any subinterval , but in particular, if c is any element of , then

With the first convention the resulting relation

is then well-defined for any cyclic permutation of a, b, and c.

Extensions

Improper integrals

Main article: Improper integral

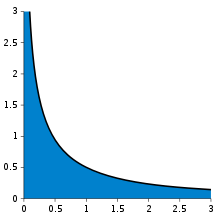

has unbounded intervals for both domain and range.

A "proper" Riemann integral assumes the integrand is defined and finite on a closed and bounded interval, bracketed by the limits of integration. An improper integral occurs when one or more of these conditions is not satisfied. In some cases such integrals may be defined by considering the limit of a sequence of proper Riemann integrals on progressively larger intervals.

If the interval is unbounded, for instance at its upper end, then the improper integral is the limit as that endpoint goes to infinity.

If the integrand is only defined or finite on a half-open interval, for instance (a,b], then again a limit may provide a finite result.

That is, the improper integral is the limit of proper integrals as one endpoint of the interval of integration approaches either a specified real number, or ∞, or −∞. In more complicated cases, limits are required at both endpoints, or at interior points.

Consider, for example, the function 1/((x+1)√x) integrated from 0 to ∞ (shown right). At the lower bound, as x goes to 0 the function goes to ∞; and the upper bound is itself ∞, though the function goes to 0. Thus this is a doubly improper integral. Integrated, say, from 1 to 3, an ordinary Riemann sum suffices to produce a result of π/6. To integrate from 1 to ∞, a Riemann sum is not possible. However, any finite upper bound, say t (with t > 1), gives a well-defined result, π/2 − 2 arctan(1/√t). This has a finite limit as t goes to infinity, namely π/2. Similarly, the integral from ⁄3 to 1 allows a Riemann sum as well, coincidentally again producing π/6. Replacing ⁄3 by an arbitrary positive value s (with s < 1) is equally safe, giving −π/2 + 2 arctan(1/√s). This, too, has a finite limit as s goes to zero, namely π/2. Combining the limits of the two fragments, the result of this improper integral is

This process is not guaranteed success; a limit may fail to exist, or may be unbounded. For example, over the bounded interval 0 to 1 the integral of 1/x does not converge; and over the unbounded interval 1 to ∞ the integral of 1/√x does not converge.

It may also happen that an integrand is unbounded at an interior point, in which case the integral must be split at that point, and the limit integrals on both sides must exist and must be bounded. Thus

But the similar integral

cannot be assigned a value in this way, as the integrals above and below zero do not independently converge. (However, see Cauchy principal value.)

Multiple integration

Main article: Multiple integralIntegrals can be taken over regions other than intervals. In general, an integral over a set E of a function f is written:

Here x need not be a real number, but can be other suitable algebraic quantities. For instance, a vector in R. Fubini's theorem shows that such integrals can be rewritten as an iterated integral. In other words, the integral can be calculated by integrating one coordinate at a time.

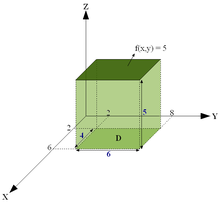

Just as the definite integral of a positive function of one variable represents the area of the region between the graph of the function and the x-axis, the double integral of a positive function of two variables represents the volume of the region between the surface defined by the function and the plane which contains its domain. (Note that the same volume can be obtained via the triple integral — the integral of a function in three variables — of the constant function f(x, y, z) = 1 over the above-mentioned region between the surface and the plane.) If the number of variables is higher, one will calculate "hypervolumes" (volumes of solid of more than three dimensions) that cannot be graphed.

For example, the volume of the parallelepiped of sides 4×6×5 may be obtained in two ways:

- By the double integral

- of the function f(x, y) = 5 calculated in the region D in the xy-plane which is the base of the parallelepiped.

- By the triple integral

- of the constant function 1 calculated on the parallelepiped itself.

Because it is impossible to calculate the antiderivative of a function of more than one variable, indefinite multiple integrals do not exist so they are all definite integrals.

Line integrals

Main article: Line integral

The concept of an integral can be extended to more general domains of integration, such as curved lines and surfaces. Such integrals are known as line integrals and surface integrals respectively. These have important applications in physics, as when dealing with vector fields.

A line integral (sometimes called a path integral) is an integral where the function to be integrated is evaluated along a curve. Various different line integrals are in use. In the case of a closed curve it is also called a contour integral.

The function to be integrated may be a scalar field or a vector field. The value of the line integral is the sum of values of the field at all points on the curve, weighted by some scalar function on the curve (commonly arc length or, for a vector field, the scalar product of the vector field with a differential vector in the curve). This weighting distinguishes the line integral from simpler integrals defined on intervals. Many simple formulas in physics have natural continuous analogs in terms of line integrals; for example, the fact that work is equal to force multiplied by distance may be expressed (in terms of vector quantities) as:

- ;

which is paralleled by the line integral:

- ;

which sums up vector components along a continuous path, and thus finds the work done on an object moving through a field, such as an electric or gravitational field

Surface integrals

Main article: Surface integral

A surface integral is a definite integral taken over a surface (which may be a curved set in space); it can be thought of as the double integral analog of the line integral. The function to be integrated may be a scalar field or a vector field. The value of the surface integral is the sum of the field at all points on the surface. This can be achieved by splitting the surface into surface elements, which provide the partitioning for Riemann sums.

For an example of applications of surface integrals, consider a vector field v on a surface S; that is, for each point x in S, v(x) is a vector. Imagine that we have a fluid flowing through S, such that v(x) determines the velocity of the fluid at x. The flux is defined as the quantity of fluid flowing through S in unit amount of time. To find the flux, we need to take the dot product of v with the unit surface normal to S at each point, which will give us a scalar field, which we integrate over the surface:

- .

The fluid flux in this example may be from a physical fluid such as water or air, or from electrical or magnetic flux. Thus surface integrals have applications in physics, particularly with the classical theory of electromagnetism.

Integrals of differential forms

A differential form is a mathematical concept in the fields of multivariate calculus, differential topology and tensors. The modern notation for the differential form, as well as the idea of the differential forms as being the wedge products of exterior derivatives forming an exterior algebra, was introduced by Élie Cartan.

We initially work in an open set in R. A 0-form is defined to be a smooth function f. When we integrate a function f over an m-dimensional subspace S of R, we write it as

(The superscripts are indices, not exponents.) We can consider dx through dx to be formal objects themselves, rather than tags appended to make integrals look like Riemann sums. Alternatively, we can view them as covectors, and thus a measure of "density" (hence integrable in a general sense). We call the dx, …,dx basic 1-forms.

We define the wedge product, "∧", a bilinear "multiplication" operator on these elements, with the alternating property that

for all indices a. Note that alternation along with linearity implies dx∧dx = −dx∧dx. This also ensures that the result of the wedge product has an orientation.

We define the set of all these products to be basic 2-forms, and similarly we define the set of products of the form dx∧dx∧dx to be basic 3-forms. A general k-form is then a weighted sum of basic k-forms, where the weights are the smooth functions f. Together these form a vector space with basic k-forms as the basis vectors, and 0-forms (smooth functions) as the field of scalars. The wedge product then extends to k-forms in the natural way. Over R at most n covectors can be linearly independent, thus a k-form with k > n will always be zero, by the alternating property.

In addition to the wedge product, there is also the exterior derivative operator d. This operator maps k-forms to (k+1)-forms. For a k-form ω = f dx over R, we define the action of d by:

with extension to general k-forms occurring linearly.

This more general approach allows for a more natural coordinate-free approach to integration on manifolds. It also allows for a natural generalisation of the fundamental theorem of calculus, called Stoke's theorem, which we may state as

where ω is a general k-form, and ∂Ω denotes the boundary of the region Ω. Thus in the case that ω is a 0-form and Ω is a closed interval of the real line, this reduces to the fundamental theorem of calculus. In the case that ω is a 1-form and Ω is a 2-dimensional region in the plane, the theorem reduces to Green's theorem. Similarly, using 2-forms, and 3-forms and Hodge duality, we can arrive at Stoke's theorem and the divergence theorem. In this way we can see that differential forms provide a powerful unifying view of integration.

Techniques and applications

Computing integrals

The most basic technique for computing integrals of one real variable is based on the fundamental theorem of calculus. It proceeds like this:

- Choose a function f(x) and an interval .

- Find an antiderivative of f, that is, a function F such that F' = f.

- By the fundamental theorem of calculus, provided the integrand and integral have no singularities on the path of integration,

- Therefore the value of the integral is F(b) − F(a).

Note that the integral is not actually the antiderivative, but the fundamental theorem allows us to use antiderivatives to evaluate definite integrals.

The difficult step is often finding an antiderivative of f. It is rarely possible to glance at a function and write down its antiderivative. More often, it is necessary to use one of the many techniques that have been developed to evaluate integrals. Most of these techniques rewrite one integral as a different one which is hopefully more tractable. Techniques include:

- Integration by substitution

- Integration by parts

- Integration by trigonometric substitution

- Integration by partial fractions

Even if these techniques fail, it may still be possible to evaluate a given integral. The next most common technique is residue calculus, whilst for nonelementary integrals Taylor series can sometimes be used to find the antiderivative. There are also many less common ways of calculating definite integrals; for instance, Parseval's identity can be used to transform an integral over a rectangular region into an infinite sum. Occasionally, an integral can be evaluated by a trick; for an example of this, see Gaussian integral.

Computations of volumes of solids of revolution can usually be done with disk integration or shell integration.

Specific results which have been worked out by various techniques are collected in the list of integrals.

Symbolic algorithms

Main article: Symbolic integrationMany problems in mathematics, physics, and engineering involve integration where an explicit formula for the integral is desired. Extensive tables of integrals have been compiled and published over the years for this purpose. With the spread of computers, many professionals, educators, and students have turned to computer algebra systems that are specifically designed to perform difficult or tedious tasks, including integration. Symbolic integration presents a special challenge in the development of such systems.

A major mathematical difficulty in symbolic integration is that in many cases, a closed formula for the antiderivative of a rather innocently looking function simply does not exist. For instance, it is known that that the antiderivatives of the functions e, x and sin x /x cannot be expressed in the closed form involving only rational and exponential functions, logarithm, trigonometric and inverse trigonometric functions, and the operations of multiplication and composition; in other words, none of the three given functions is integrable in elementary functions. Differential Galois theory provides general criteria that allow one to determine whether the antiderivative of an elementary function is elementary. Unfortunately, it turns out that functions with closed expressions of antiderivatives are the exception rather than the rule. Consequently, computerized algebra systems have no hope of being able to find an antiderivative for a randomly constructed elementary function. On the positive side, if the 'building blocks' for antiderivatives are fixed in advance, it may be still be possible to decide whether the antiderivative of a given function can be expressed using these blocks and operations of multiplication and composition, and to find the symbolic answer whenever it exists. The Risch-Norman algorithm, implemented in Mathematica and the Maple computer algebra systems, does just that for functions and antiderivatives built from rational functions, radicals, logarithm, and exponential functions.

Some special integrands occur often enough to warrant special study. In particular, it may be useful to have, in the set of antiderivatives, the special functions of physics (like the Legendre functions, the hypergeometric function, the Gamma function and so on). Extending the Risch-Norman algorithm so that it includes these functions is possible but challenging.

Most humans are not able to integrate such general formulae, so in a sense computers are more skilled at integrating highly complicated formulae. Very complex formulae are unlikely to have closed-form antiderivatives, so how much of an advantage does this present is a philosophical question that is open for debate.

Numerical quadrature

Main article: numerical integrationThe integrals encountered in a basic calculus course are deliberately chosen for simplicity; those found in real applications are not always so accommodating. Some integrals cannot be found exactly, some require special functions which themselves are a challenge to compute, and others are so complex that finding the exact answer is too slow. This motivates the study and application of numerical methods for approximating integrals, which today use floating point arithmetic on digital electronic computers. Many of the ideas arose much earlier, for hand calculations; but the speed of general-purpose computers like the ENIAC created a need for improvements.

The goals of numerical integration are accuracy, reliability, efficiency, and generality. Sophisticated methods can vastly outperform a naive method by all four measures (Dahlquist, Björck & forthcoming) harv error: no target: CITEREFDahlquistBjörckforthcoming (help)(Kahaner, Moler & Nash 1989)(Stoer & Bulirsch 2002). Consider, for example, the integral

which has the exact answer ⁄25 = 3.76. (In ordinary practice the answer is not known in advance, so an important task — not explored here — is to decide when an approximation is good enough.) A “calculus book” approach divides the integration range into, say, 16 equal pieces, and computes function values.

Spaced function values x −2.00 −1.50 −1.00 −0.50 0.00 0.50 1.00 1.50 2.00 f(x) 2.22800 2.45663 2.67200 2.32475 0.64400 −0.92575 −0.94000 −0.16963 0.83600 x −1.75 −1.25 −0.75 −0.25 0.25 0.75 1.25 1.75 f(x) 2.33041 2.58562 2.62934 1.64019 −0.32444 −1.09159 −0.60387 0.31734

Using the left end of each piece, the rectangle method sums 16 function values and multiplies by the step width, h, here 0.25, to get an approximate value of 3.94325 for the integral. The accuracy is not impressive, but calculus formally uses pieces of infinitesimal width, so initially this may seem little cause for concern. Indeed, repeatedly doubling the number of steps eventually produces an approximation of 3.76001. However 2 pieces are required, a great computational expense for so little accuracy; and a reach for greater accuracy can force steps so small that arithmetic precision becomes an obstacle.

A better approach replaces the horizontal tops of the rectangles with slanted tops touching the function at the ends of each piece. This trapezoidal rule is almost as easy to calculate; it sums all 17 function values, but weights the first and last by one half, and again multiplies by the step width. This immediately improves the approximation to 3.76925, which is noticeably more accurate. Furthermore, only 2 pieces are needed to achieve 3.76000, substantially less computation than the rectangle method for comparable accuracy.

The Romberg method builds on the trapezoid method to great effect. First, the step lengths are halved incrementally, giving trapezoid approximations denoted by T(h0), T(h1), and so on, where hk+1 is half of hk. For each new step size, only half the new function values need to be computed; the others carry over from the previous size. But the really powerful idea is to interpolate a polynomial through the approximations, and extrapolate to T(0). With this method a numerically exact answer here requires only four pieces (five function values)! The Lagrange polynomial interpolating {hk,T(hk)}k=0…2 = {(4.00,6.128), (2.00,4.352), (1.00,3.908)} is 3.76+0.148h, producing the extrapolated value 3.76 at h = 0.

Gaussian quadrature puts all these to shame. In this example, it computes the function values at just two x positions, ±⁄√3, then doubles each value and sums to get the numerically exact answer. The explanation for this dramatic success lies in error analysis, and a little luck. An n-point Gaussian method is exact for polynomials of degree up to 2n−1. The function in this example is a degree 3 polynomial, plus a term that cancels because the chosen endpoints are symmetric around zero. (Cancellation also benefits the Romberg method.)

Shifting the range left a little, so the integral is from −2.25 to 1.75, removes the symmetry. Nevertheless, the trapezoid method is rather slow, the polynomial interpolation method of Romberg is acceptable, and the Gaussian method requires the least work — if the number of points is known in advance. As well, rational interpolation can use the same trapezoid evaluations as the Romberg method to greater effect.

Quadrature method cost comparison Method Trapezoid Romberg Rational Gauss Points 1048577 257 129 36 Rel. Err. −5.3×10 −6.3×10 8.8×10 3.1×10 Value

In practice, each method must use extra evaluations to ensure an error bound on an unknown function; this tends to offset some of the advantage of the pure Gaussian method, and motivates the popular Gauss–Kronrod hybrid. Symmetry can still be exploited by splitting this integral into two ranges, from −2.25 to −1.75 (no symmetry), and from −1.75 to 1.75 (symmetry). More broadly, adaptive quadrature partitions a range into pieces based on function properties, so that data points are concentrated where they are needed most.

This brief introduction omits higher-dimensional integrals (for example, area and volume calculations), where alternatives such as Monte Carlo integration have great importance.

A calculus text is no substitute for numerical analysis, but the reverse is also true. Even the best adaptive numerical code sometimes requires a user to help with the more demanding integrals. For example, improper integrals may require a change of variable or methods that can avoid infinite function values; and known properties like symmetry and periodicity may provide critical leverage.

See also

- Table of integrals - integrals of the most common functions.

- Lists of integrals

- Multiple integral

- Antiderivative

- Numerical integration

- Integral equation

- Riemann integral

- Riemann sum

- Differentiation under the integral sign

- Product integral

References

- Apostol, Tom M. (1967), Calculus, Vol. 1: One-Variable Calculus with an Introduction to Linear Algebra (2nd ed.), Wiley, ISBN 978-0-471-00005-1

- Bourbaki, Nicolas (2004), Integration I, Springer Verlag, ISBN 3-540-41129-1. In particular chapters III and IV.

- Burton, David M. (2005), The History of Mathematics: An Introduction (6 ed.), McGraw-Hill, p. p. 359, ISBN 978-0-07-305189-5

{{citation}}:|page=has extra text (help) - Cajori, Florian (1929), A History Of Mathematical Notations Volume II, Open Court Publishing, pp. 247–252, ISBN 978-0-486-67766-8

- Dahlquist, Germund; Björck, Åke (forthcoming), "Chapter 5: Numerical Integration", Numerical Methods in Scientific Computing, Philadelphia: SIAM

{{citation}}: Check date values in:|year=(help)CS1 maint: year (link) - Folland, Gerald B. (1984), Real Analysis: Modern Techniques and Their Applications (1st ed.), Wiley-Interscience, ISBN 978-0-471-80958-6

- Fourier, Jean Baptiste Joseph (1822), Théorie analytique de la chaleur, Chez Firmin Didot, père et fils, p. §231

Available in translation as Fourier, Joseph (1878), The analytical theory of heat, Freeman, Alexander (trans.), Cambridge University Press, pp. pp. 200–201{{citation}}:|pages=has extra text (help) - Kahaner, David; Moler, Cleve; Nash, Stephen (1989), "Chapter 5: Numerical Quadrature", Numerical Methods and Software, Prentice-Hall, ISBN 978-0-13-627258-8

- Leibniz, Gottfried Wilhelm (1899), Gerhardt, Karl Immanuel (ed.), Der Briefwechsel von Gottfried Wilhelm Leibniz mit Mathematikern. Erster Band, Berlin: Mayer & Müller

- Miller, Jeff, Earliest Uses of Symbols of Calculus, retrieved 2007-06-02

- Rudin, Walter (1987), "Chapter 1: Abstract Integration", Real and Complex Analysis (International ed.), McGraw-Hill, ISBN 978-0-07-100276-9

- Saks, Stanisław (1964), Theory of the integral (English translation by L. C. Young. With two additional notes by Stefan Banach. Second revised ed.), New York: Dover

- Stoer, Josef; Bulirsch, Roland (2002), "Chapter 3: Topics in Integration", Introduction to Numerical Analysis (3rd ed.), Springer, ISBN 978-0-387-95452-3.

- W3C (2006), Arabic mathematical notation

{{citation}}: CS1 maint: numeric names: authors list (link)

External links

- The Integrator by Wolfram Research

- Function Calculator from WIMS

- P.S. Wang, Evaluation of Definite Integrals by Symbolic Manipulation (1972) - a cookbook of definite integral techniques

Online books

- Keisler, H. Jerome, Elementary Calculus: An Approach Using Infinitesimals, University of Wisconsin

- Stroyan, K.D., A Brief Introduction to Infinitesimal Calculus, University of Iowa

- Mauch, Sean, Sean's Applied Math Book, CIT, an online textbook that includes a complete introduction to calculus

- Crowell, Benjamin, Calculus, Fullerton College, an online textbook

- Garrett, Paul, Notes on First-Year Calculus

- Hussain, Faraz, Understanding Calculus, an online textbook

- Sloughter, Dan, Difference Equations to Differential Equations, an introduction to calculus

- Wikibook of Calculus

- Numerical Methods of Integration at Holistic Numerical Methods Institute

.

.

or

or  , which Newton used to indicate differentiation, and the box notation was difficult for printers to reproduce, so these notations were not widely adopted.

, which Newton used to indicate differentiation, and the box notation was difficult for printers to reproduce, so these notations were not widely adopted.

;

; ;

; .

.