| Revision as of 11:03, 23 November 2018 edit196.8.123.44 (talk)No edit summary← Previous edit | Latest revision as of 15:41, 17 December 2024 edit undoTheNerdzilla (talk | contribs)Extended confirmed users1,528 edits Reverted 1 edit by 64.107.190.90 (talk): Unexplained removalTags: Twinkle Undo | ||

| (451 intermediate revisions by more than 100 users not shown) | |||

| Line 1: | Line 1: | ||

| {{pp-pc|small=yes}} | |||

| {{distinguish|text=the unrelated adage ]}} | |||

| {{Use American English|date=July 2021}} | |||

| {{Short description|Observation that in many real-life datasets, the leading digit is likely to be small}} | |||

| {{For|the unrelated adage|Benford's law of controversy}} | |||

| ] | ] | ||

| ] | ] | ||

| {{Use dmy dates|date= |

{{Use dmy dates|date=September 2020}} | ||

| '''Benford's law''', also |

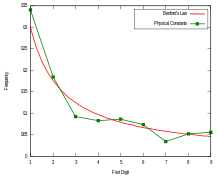

'''Benford's law''', also known as the '''Newcomb–Benford law''', the '''law of anomalous numbers''', or the '''first-digit law''', is an observation that in many real-life sets of numerical ], the ] is likely to be small.<ref name=BergerHill2011>Arno Berger and Theodore P. Hill, , 2011.</ref> In sets that obey the law, the number 1 appears as the leading significant digit about 30% of the time, while 9 appears as the leading significant digit less than 5% of the time. Uniformly distributed digits would each occur about 11.1% of the time.<ref>{{cite web |url=http://mathworld.wolfram.com/BenfordsLaw.html |author = Weisstein, Eric W. |title=Benford's Law |website = MathWorld, A Wolfram web resource |access-date = 7 June 2015}}</ref> Benford's law also makes predictions about the distribution of second digits, third digits, digit combinations, and so on. | ||

| The graph to the right shows Benford's law for ], one of infinitely many cases of a generalized law regarding numbers expressed in arbitrary (integer) bases, which rules out the possibility that the phenomenon might be an artifact of the base-10 number system. Further generalizations published in 1995<ref>{{cite journal |url=https://projecteuclid.org/euclid.ss/1177009869 |author=Hill, Theodore |title=A Statistical Derivation of the Significant-Digit Law |journal=Statistical Science |year=1995 |volume=10 |issue=4 |doi=10.1214/ss/1177009869|doi-access=free }}</ref> included analogous statements for both the ''n''th leading digit and the joint distribution of the leading ''n'' digits, the latter of which leads to a corollary wherein the significant digits are shown to be a ] quantity. | |||

| The graph to the right shows Benford's law for ]. There is a generalization of the law to numbers expressed in other bases (for example, ]), and also a generalization from leading 1 digit to leading ''n'' digits. | |||

| It has been shown that this result applies to a wide variety of data sets, including electricity bills, street addresses, stock prices, house prices, population numbers, death rates, lengths of rivers, ] and ]s<ref>Paul H. Kvam, Brani Vidakovic, ''Nonparametric Statistics with Applications to Science and Engineering'', p. |

It has been shown that this result applies to a wide variety of data sets, including electricity bills, street addresses, stock prices, house prices, population numbers, death rates, lengths of rivers, and ] and ]s.<ref>Paul H. Kvam, Brani Vidakovic, ''Nonparametric Statistics with Applications to Science and Engineering'', p. 158.</ref> Like other general principles about natural data—for example, the fact that many data sets are well approximated by a ]—there are illustrative examples and explanations that cover many of the cases where Benford's law applies, though there are many other cases where Benford's law applies that resist simple explanations.<ref name=BergerHill2020>{{cite journal |last1=Berger |first1=Arno |last2=Hill |first2=Theodore P. |title=The mathematics of Benford's law: a primer |journal=Stat. Methods Appl. |date=June 30, 2020 |volume=30 |issue=3 |pages=779–795 |doi=10.1007/s10260-020-00532-8 |arxiv=1909.07527 |s2cid=202583554 |url=https://doi.org/10.1007/s10260-020-00532-8 }}</ref><ref>{{Cite journal |last1=Cai |first1=Zhaodong |last2=Faust |first2=Matthew |last3=Hildebrand |first3=A. J. |last4=Li |first4=Junxian |last5=Zhang |first5=Yuan |date=2020-03-15 |title=The Surprising Accuracy of Benford's Law in Mathematics |url=https://doi.org/10.1080/00029890.2020.1690387 |journal=The American Mathematical Monthly |volume=127 |issue=3 |pages=217–237 |doi=10.1080/00029890.2020.1690387 |arxiv=1907.08894 |s2cid=198147766 |issn=0002-9890}}</ref> Benford's law tends to be most accurate when values are distributed across multiple ], especially if the process generating the numbers is described by a ] (which is common in nature). | ||

| The law is named after physicist ], who stated it in 1938 in an article titled "The Law of Anomalous Numbers",<ref name=Benford>{{Cite journal | author = Frank Benford | author-link = Frank Benford | title = The law of anomalous numbers | journal = ] | volume = 78 | issue = 4 |date=March 1938 | pages = 551–572 | jstor=984802 | bibcode = 1938PAPhS..78..551B }}</ref> although it had been previously stated by ] in 1881.<ref name=Newcomb /><ref name=Formann2010 /> | |||

| The law is similar in concept, though not identical in distribution, to ]. | |||

| ==Definition== | ==Definition== | ||

| ] bar. Picking a random ''x'' position ] on this number line, roughly 30% of the time the first digit of the number will be 1.]] | ] bar. Picking a random ''x'' position ] on this number line, roughly 30% of the time the first digit of the number will be 1.]] | ||

| A set of numbers is said to satisfy Benford's law if the leading digit {{mvar|d}} ({{math|{{var|d}} ∈ {{mset|1, ..., 9}}}}) occurs with ] | A set of numbers is said to satisfy Benford's law if the leading digit {{mvar|d}} ({{math|{{var|d}} ∈ {{mset|1, ..., 9}}}}) occurs with ]<ref name="Miller2015" /> | ||

| : <math>P(d)=\log_{10}(d+1)-\log_{10}(d)=\log_{10} |

: <math>P(d) = \log_{10}(d + 1) - \log_{10}(d) = \log_{10}\left(\frac{d + 1}{d}\right) = \log_{10}\left(1 + \frac{1}{d}\right).</math> | ||

| The leading digits in such a set thus have the following distribution: | The leading digits in such a set thus have the following distribution: | ||

| {| class="wikitable" | {| class="wikitable" | ||

| |- | |||

| ! {{nobold|{{mvar|d}}}} !! {{tmath|P(d)}} !! Relative size of {{tmath|P(d)}} | ! {{nobold|{{mvar|d}}}} !! {{tmath|P(d)}} !! Relative size of {{tmath|P(d)}} | ||

| |- | |- | ||

| | 1 || |

| 1 ||{{right}} {{bartable|30.1|%|10}} | ||

| |- | |- | ||

| | 2 || |

| 2 ||{{right}} {{bartable|17.6|%|10}} | ||

| |- | |- | ||

| | 3 || |

| 3 ||{{right}} {{bartable|12.5|%|10}} | ||

| |- | |- | ||

| | 4 || |

| 4 ||{{right}} {{bartable| 9.7|%|10}} | ||

| |- | |- | ||

| | 5 || |

| 5 ||{{right}} {{bartable| 7.9|%|10}} | ||

| |- | |- | ||

| | 6 || |

| 6 ||{{right}} {{bartable| 6.7|%|10}} | ||

| |- | |- | ||

| | 7 || |

| 7 ||{{right}} {{bartable| 5.8|%|10}} | ||

| |- | |- | ||

| | 8 || |

| 8 ||{{right}} {{bartable| 5.1|%|10}} | ||

| |- | |- | ||

| | 9 || |

| 9 ||{{right}} {{bartable| 4.6|%|10}} | ||

| |} | |} | ||

| The quantity {{tmath|P(d)}} is proportional to the space between {{mvar|d}} and {{math|{{var|d}} + 1}} on a ]. Therefore, this is the distribution expected if |

The quantity {{tmath|P(d)}} is proportional to the space between {{mvar|d}} and {{math|{{var|d}} + 1}} on a ]. Therefore, this is the distribution expected if the ''logarithms'' of the numbers (but not the numbers themselves) are ]. | ||

| For example, a number {{mvar|x}}, constrained to lie between 1 and 10, starts with the digit 1 if {{math|1 ≤ {{var|x}} < 2}}, and starts with the digit 9 if {{math|9 ≤ {{var|x}} < 10}}. Therefore, |

For example, a number {{mvar|x}}, constrained to lie between 1 and 10, starts with the digit 1 if {{math|1 ≤ {{var|x}} < 2}}, and starts with the digit 9 if {{math|9 ≤ {{var|x}} < 10}}. Therefore, {{mvar|x}} starts with the digit 1 if {{math|log 1 ≤ log {{var|x}} < log 2}}, or starts with 9 if {{math|log 9 ≤ log ''x'' < log 10}}. The interval {{math|}} is much wider than the interval {{math|}} (0.30 and 0.05 respectively); therefore if log {{mvar|x}} is uniformly and randomly distributed, it is much more likely to fall into the wider interval than the narrower interval, i.e. more likely to start with 1 than with 9; the probabilities are proportional to the interval widths, giving the equation above (as well as the generalization to other bases besides decimal). | ||

| Benford's law is sometimes stated in a stronger form, asserting that the ] of the logarithm of data is typically close to uniformly distributed between 0 and 1; from this, the main claim about the distribution of first digits can be derived. | Benford's law is sometimes stated in a stronger form, asserting that the ] of the logarithm of data is typically close to uniformly distributed between 0 and 1; from this, the main claim about the distribution of first digits can be derived.<ref name=BergerHill2020/> | ||

| === |

===In other bases=== | ||

| , hover over a graph to show the value for each point.]] | , hover over a graph to show the value for each point.)]] | ||

| An extension of Benford's law predicts the distribution of first digits in other ] besides ]; in fact, any base {{math|''b'' ≥ 2}}. The general form is: | An extension of Benford's law predicts the distribution of first digits in other ] besides ]; in fact, any base {{math|''b'' ≥ 2}}. The general form is<ref>{{Cite web |last=Pimbley |first=J. M. |date=2014 |title=Benford's Law as a Logarithmic Transformation |url=http://www.maxwell-consulting.com/Benford_Logarithmic_Transformation.pdf |archive-url=https://ghostarchive.org/archive/20221009/http://www.maxwell-consulting.com/Benford_Logarithmic_Transformation.pdf |archive-date=2022-10-09 |url-status=live |access-date=2020-11-15 |website=Maxwell Consulting, LLC}}</ref> | ||

| : <math>P(d)=\ |

: <math>P(d) = \log_b(d + 1) - \log_b(d) = \log_b\left(1 + \frac{1}{d}\right).</math> | ||

| For {{math|1=''b'' |

For {{math|1=''b'' = 2, 1}} (the ] and ]) number systems, Benford's law is true but trivial: All binary and unary numbers (except for 0 or the empty set) start with the digit 1. (On the other hand, the ] is not trivial, even for binary numbers.<ref>{{Cite book |last=Khosravani |first=A. |title=Transformation Invariance of Benford Variables and their Numerical Modeling |publisher=Recent Researches in Automatic Control and Electronics |year=2012 |isbn=978-1-61804-080-0 |pages=57–61}}</ref>) | ||

| == |

==Examples== | ||

| ] of the world as of July 2010. Black dots indicate the distribution predicted by Benford's law.|thumb|right]] | ] of the world as of July 2010. Black dots indicate the distribution predicted by Benford's law.|thumb|right]] | ||

| Examining a list of the heights of the ] shows that 1 is by far the most common leading digit, ''irrespective of the unit of measurement'' ( |

Examining a list of the heights of the ] shows that 1 is by far the most common leading digit, ''irrespective of the unit of measurement'' (see "scale invariance" below): | ||

| {| class="wikitable" | {| class="wikitable" style="text-align:right" | ||

| !rowspan="2"| Leading<br/> digit | |||

| !colspan=2| m | |||

| !colspan=2| ft | |||

| !rowspan="2"| Per<br/> Benford's law | |||

| |- | |- | ||

| ! Count | |||

| ! rowspan="2" style="width:17%;"| Leading digit | |||

| ! Share | |||

| ! colspan=2 | meters | |||

| ! Count | |||

| ! colspan=2 | feet | |||

| ! Share | |||

| ! rowspan="2" style="width:17%;"| In Benford's law | |||

| |- | |||

| ! style="width:16%;"| Count | |||

| ! style="width:17%;"| % | |||

| ! style="width:16%;"| Count | |||

| ! style="width:17%;"| % | |||

| |- | |- | ||

| | 1 | | 1 | ||

| | |

| 23 | ||

| | |

| 39.7 % | ||

| | |

| 15 | ||

| | |

| 25.9 % | ||

| | 30. |

| 30.1 % | ||

| |- | |- | ||

| | 2 | | 2 | ||

| | |

| 12 | ||

| | |

| 20.7 % | ||

| | 8 | | 8 | ||

| | 13. |

| 13.8 % | ||

| | 17. |

| 17.6 % | ||

| |- | |- | ||

| | 3 | | 3 | ||

| | |

| 6 | ||

| | |

| 10.3 % | ||

| | |

| 5 | ||

| | |

| 8.6 % | ||

| | 12. |

| 12.5 % | ||

| |- | |- | ||

| | 4 | | 4 | ||

| | |

| 5 | ||

| | |

| 8.6 % | ||

| | |

| 7 | ||

| | |

| 12.1 % | ||

| | 9. |

| 9.7 % | ||

| |- | |- | ||

| | 5 | | 5 | ||

| | 2 | |||

| | 3.4 % | |||

| | 9 | |||

| | 15.5 % | |||

| | 7.9 % | |||

| |- | |||

| | 6 | |||

| | 5 | |||

| | 8.6 % | |||

| | 4 | | 4 | ||

| | 6. |

| 6.9 % | ||

| | |

| 6.7 % | ||

| | 16.7% | |||

| | 7.9% | |||

| |- | |- | ||

| | 7 | |||

| | 1 | |||

| | 1.7 % | |||

| | 3 | |||

| | 5.2 % | |||

| | 5.8 % | |||

| |- | |||

| | 8 | |||

| | 4 | |||

| | 6.9 % | |||

| | 6 | | 6 | ||

| | 10.3 % | |||

| | 5.1 % | |||

| |- | |||

| | 9 | |||

| | 0 | |||

| | 0 % | |||

| | 1 | | 1 | ||

| | 1. |

| 1.7 % | ||

| | 4.6 % | |||

| |} | |||

| Another example is the leading digit of {{math|2<sup>''n''</sup>}}. The sequence of the first 96 leading digits (1, 2, 4, 8, 1, 3, 6, 1, 2, 5, 1, 2, 4, 8, 1, 3, 6, 1, ... {{OEIS|A008952}}) exhibits closer adherence to Benford’s law than is expected for random sequences of the same length, because it is derived from a geometric sequence.<ref name=":0" /> | |||

| {| class="wikitable" style="text-align:right" | |||

| !rowspan="2"| Leading<br/> digit | |||

| !colspan=2| Occurrence | |||

| !rowspan="2"| Per<br/> Benford's law | |||

| |- | |||

| ! Count | |||

| ! Share | |||

| |- | |||

| | 1 | |||

| | 29 | |||

| | 30.2 % | |||

| | 30.1 % | |||

| |- | |||

| | 2 | |||

| | 17 | |||

| | 17.7 % | |||

| | 17.6 % | |||

| |- | |||

| | 3 | |||

| | 12 | |||

| | 12.5 % | |||

| | 12.5 % | |||

| |- | |||

| | 4 | |||

| | 10 | |||

| | 10.4 % | |||

| | 9.7 % | |||

| |- | |||

| | 5 | | 5 | ||

| | |

| 7 | ||

| | |

| 7.3 % | ||

| | 7.9 % | |||

| |- | |||

| | 6 | |||

| | 6 | |||

| | 6.3 % | |||

| | 6.7 % | |||

| |- | |- | ||

| | 7 | | 7 | ||

| | |

| 5 | ||

| | |

| 5.2 % | ||

| | |

| 5.8 % | ||

| | 3.3% | |||

| | 5.8% | |||

| |- | |- | ||

| | 8 | | 8 | ||

| | 5 | | 5 | ||

| | |

| 5.2 % | ||

| | |

| 5.1 % | ||

| | 1.7% | |||

| | 5.1% | |||

| |- | |- | ||

| | 9 | | 9 | ||

| | |

| 5 | ||

| | |

| 5.2 % | ||

| | |

| 4.6 % | ||

| | 3.3% | |||

| | 4.6% | |||

| |} | |} | ||

| Another example is the leading digit of {{math|2<sup>''n''</sup>}}: | |||

| :1, 2, 4, 8, 1, 3, 6, 1, 2, 5, 1, 2, 4, 8, 1, 3, 6, 1... {{OEIS|A008952}} | |||

| ==History== | ==History== | ||

| The discovery of Benford's law goes back to 1881, when the American astronomer ] noticed that in ] |

The discovery of Benford's law goes back to 1881, when the Canadian-American astronomer ] noticed that in ]s the earlier pages (that started with 1) were much more worn than the other pages.<ref name=Newcomb>{{Cite journal | author = Simon Newcomb | s2cid = 124556624 | author-link = Simon Newcomb | title = Note on the frequency of use of the different digits in natural numbers | journal = ] | volume = 4 | issue = 1/4 | year = 1881 | pages = 39–40 | doi = 10.2307/2369148 | jstor = 2369148 | bibcode = 1881AmJM....4...39N }}</ref> Newcomb's published result is the first known instance of this observation and includes a distribution on the second digit as well. Newcomb proposed a law that the probability of a single number ''N'' being the first digit of a number was equal to log(''N'' + 1) − log(''N''). | ||

| The phenomenon was again noted in 1938 by the physicist ],<ref name=Benford/> who tested it on data from 20 different domains and was credited for it. His data set included the surface areas of 335 rivers, the sizes of 3259 US populations, 104 ]s, 1800 ]s, 5000 entries from a mathematical handbook, 308 numbers contained in an issue of '']'', the street addresses of the first 342 persons listed in ''American Men of Science'' and 418 death rates. The total number of observations used in the paper was 20,229. This discovery was later named after Benford (making it an example of ]). | The phenomenon was again noted in 1938 by the physicist ],<ref name=Benford/> who tested it on data from 20 different domains and was credited for it. His data set included the surface areas of 335 rivers, the sizes of 3259 US populations, 104 ]s, 1800 ]s, 5000 entries from a mathematical handbook, 308 numbers contained in an issue of '']'', the street addresses of the first 342 persons listed in ''American Men of Science'' and 418 death rates. The total number of observations used in the paper was 20,229. This discovery was later named after Benford (making it an example of ]). | ||

| In 1995, ] proved the result about mixed distributions mentioned ].<ref name=Hill1995/> | In 1995, ] proved the result about mixed distributions mentioned ].<ref name=Hill1995/><ref name="Hill1995b">{{cite journal |last1=Hill |first1=Theodore P. |title=Base-invariance implies Benford's law |journal=Proceedings of the American Mathematical Society |volume=123 |issue=3 |year=1995 |pages=887–895 |issn=0002-9939 |doi=10.1090/S0002-9939-1995-1233974-8 |doi-access=free}}</ref> | ||

| ==Explanations== | ==Explanations== | ||

| Benford's law tends to apply most accurately to data that span several ]. As a rule of thumb, the more orders of magnitude that the data evenly covers, the more accurately Benford's law applies. For instance, one can expect that Benford's law would apply to a list of numbers representing the populations of United Kingdom settlements. But if a "settlement" is defined as a village with population between 300 and 999, then Benford's law will not apply.<ref name="dspguide">{{cite book |chapter-url=http://www.dspguide.com/ch34.htm |title=The Scientist and Engineer's Guide to Digital Signal Processing |section=Chapter 34: Explaining Benford's Law. The Power of Signal Processing |author=Steven W. Smith |access-date=15 December 2012}}</ref><ref name="fewster">{{Cite journal|first=R. M. |last=Fewster |author-link=Rachel Fewster|s2cid=39595550 |title=A simple explanation of Benford's Law |journal=The American Statistician |year=2009 |volume=63 |issue=1 |pages=26–32 |doi=10.1198/tast.2009.0005 |url=https://www.stat.auckland.ac.nz/~fewster/RFewster_Benford.pdf |archive-url=https://ghostarchive.org/archive/20221009/https://www.stat.auckland.ac.nz/~fewster/RFewster_Benford.pdf |archive-date=2022-10-09 |url-status=live |citeseerx=10.1.1.572.6719}}</ref> | |||

| Consider the probability distributions shown below, referenced to a ]. In each case, the total area in red is the relative probability that the first digit is 1, and the total area in blue is the relative probability that the first digit is 8. For the first distribution, the size of the areas of red and blue are approximately proportional to the widths of each red and blue bar. Therefore, the numbers drawn from this distribution will approximately follow Benford's law. On the other hand, for the second distribution, the ratio of the areas of red and blue is very different from the ratio of the widths of each red and blue bar. Rather, the relative areas of red and blue are determined more by the heights of the bars than the widths. Accordingly, the first digits in this distribution do not satisfy Benford's law at all.<ref name=fewster /> | |||

| ===Overview=== | |||

| Benford's law tends to apply most accurately to data that are distributed uniformly across several orders of magnitude. As a rule of thumb, the more orders of magnitude that the data evenly covers, the more accurately Benford's law applies. For instance, one can expect that Benford's law would apply to a list of numbers representing the populations of UK settlements, or representing the values of small insurance claims. But if a "village" is defined as a settlement with population between 300 and 999, or a "small insurance claim" is defined as a claim between $50 and $99, then Benford's law will not apply.<ref name="dspguide">{{cite web|url=http://www.dspguide.com/ch34.htm |title=The Scientist and Engineer's Guide to Digital Signal Processing, chapter 34, Explaining Benford's Law |author=Steven W. Smith |accessdate=15 December 2012}} (especially ).</ref><ref name="fewster">{{Cite journal|first=R. M. |last=Fewster |title=A simple explanation of Benford's Law |journal=The American Statistician |year=2009 |volume=63 |issue=1 |pages=26–32 |doi=10.1198/tast.2009.0005 |postscript=<!--None--> |url=https://www.stat.auckland.ac.nz/~fewster/RFewster_Benford.pdf }}</ref> | |||

| Consider the probability distributions shown below, referenced to a ].<ref name="logscale">This section discusses and plots probability distributions of the logarithms of a variable. This is not the same as taking a regular probability distribution of a variable, and simply plotting it on a log scale. Instead, one multiplies the distribution by a certain function. The log scale distorts the horizontal distances, so the height has to be changed also, in order for the area under each section of the curve to remain true to the original distribution. See, for example, . Specifically: <math>P(\log x) d(\log x) = (1/x) P(\log x) dx</math>.</ref> | |||

| In each case, the total area in red is the relative probability that the first digit is 1, and the total area in blue is the relative probability that the first digit is 8. | |||

| {| | {| | ||

| ] | |] | ||

| | | | | ||

| ] | ] | ||

| |} | |} | ||

| Thus, real-world distributions that span several ] rather uniformly (e.g., stock-market prices and populations of villages, towns, and cities) are likely to satisfy Benford's law very accurately. On the other hand, a distribution mostly or entirely within one order of magnitude (e.g., ]s or heights of human adults) is unlikely to satisfy Benford's law very accurately, if at all.<ref name=dspguide /><ref name=fewster /> However, the difference between applicable and inapplicable regimes is not a sharp cut-off: as the distribution gets narrower, the deviations from Benford's law increase gradually. | |||

| For the left distribution, the size of the ''areas'' of red and blue are approximately proportional to the ''widths'' of each red and blue bar. Therefore, the numbers drawn from this distribution will approximately follow Benford's law. On the other hand, for the right distribution, the ratio of the areas of red and blue is very different from the ratio of the widths of each red and blue bar. Rather, the relative areas of red and blue are determined more by the ''height'' of the bars than the widths. Accordingly, the first digits in this distribution do not satisfy Benford's law at all.<ref name=fewster /> | |||

| (This discussion is not a full explanation of Benford's law, because it has not explained why data sets are so often encountered that, when plotted as a probability distribution of the logarithm of the variable, are relatively uniform over several orders of magnitude.<ref name=BergerHillExplain>Arno Berger and Theodore P. Hill, , 2011. The authors describe this argument but say it "still leaves open the question of why it is reasonable to assume that the logarithm of the spread, as opposed to the spread itself—or, say, the log log spread—should be large" and that "assuming large spread on a logarithmic scale is ''equivalent'' to assuming an approximate conformance with " (italics added), something which they say lacks a "simple explanation".</ref>) | |||

| Thus, real-world distributions that span several ] rather uniformly (e.g. populations of villages / towns / cities, stock-market prices), are likely to satisfy Benford's law to a very high accuracy. On the other hand, a distribution that is mostly or entirely within one order of magnitude (e.g. heights of human adults, or IQ scores) is unlikely to satisfy Benford's law very accurately, or at all.<ref name=dspguide /><ref name=fewster /> However, it is not a sharp line: As the distribution gets narrower, the discrepancies from Benford's law ''typically'' increase gradually. | |||

| ===Krieger–Kafri entropy explanation=== | |||

| In terms of conventional ] (referenced to a linear scale rather than log scale, i.e. P(x)dx rather than P(log x) d(log x)), the equivalent criterion is that Benford's law will be very accurately satisfied when P(x) is approximately proportional to 1/x over several orders-of-magnitude variation in x.<ref name="logscale" /> | |||

| In 1970 ] proved what is now called the Krieger generator theorem.<ref name="Krieger1970">{{cite journal |last1=Krieger |first1=Wolfgang |title=On entropy and generators of measure-preserving transformations |journal=Transactions of the American Mathematical Society |volume=149 |issue=2 |year=1970 |pages=453 |issn=0002-9947 |doi=10.1090/S0002-9947-1970-0259068-3 |doi-access=free}}</ref><ref name="Downarowicz2011">{{cite book |author=Downarowicz, Tomasz |title=Entropy in Dynamical Systems |url=https://books.google.com/books?id=avUGMc787v8C&pg=PA106 |date=12 May 2011 |publisher=Cambridge University Press |isbn=978-1-139-50087-6 |page=106}}</ref> The Krieger generator theorem might be viewed as a justification for the assumption in the Kafri ball-and-box model that, in a given base <math>B</math> with a fixed number of digits 0, 1, ..., ''n'', ..., <math>B - 1</math>, digit ''n'' is equivalent to a Kafri box containing ''n'' non-interacting balls. Other scientists and statisticians have suggested entropy-related explanations{{which|date=October 2023}} for Benford's law.<ref>{{cite book |author=Smorodinsky, Meir |year=1971 |chapter=Chapter IX. Entropy and generators. Krieger's theorem |title=Ergodic Theory, Entropy |series=Lecture Notes in Mathematics |volume=214 |pages=54–57 |publisher=Springer |location=Berlin, Heidelberg |doi=10.1007/BFb0066096|isbn=978-3-540-05556-3 }}</ref><ref name="Jolion2001">{{cite journal |last1=Jolion |first1=Jean-Michel |title=Images and Benford's Law |journal=Journal of Mathematical Imaging and Vision |volume=14 |issue=1 |year=2001 |pages=73–81 |issn=0924-9907 |doi=10.1023/A:1008363415314 |bibcode=2001JMIV...14...73J |s2cid=34151059}}</ref><ref name="Miller2015">{{cite book |editor=Miller, Steven J. |editor-link=Steven J. Miller |title=Benford's Law: Theory and Applications |url=https://books.google.com/books?id=J_NnBgAAQBAJ&pg=309 |page=309 |date=9 June 2015 |publisher=Princeton University Press |isbn=978-1-4008-6659-5}}</ref><ref name="Lemons2019">{{cite journal |last1=Lemons |first1=Don S. |title=Thermodynamics of Benford's first digit law |journal=American Journal of Physics |volume=87 |issue=10 |year=2019 |pages=787–790 |issn=0002-9505 |doi=10.1119/1.5116005 |arxiv=1604.05715 |bibcode=2019AmJPh..87..787L |s2cid=119207367}}</ref> | |||

| This discussion is not a ''full'' explanation of Benford's law, because we have not explained ''why'' we so often come across data-sets that, when plotted as a probability distribution of the logarithm of the variable, are relatively uniform over several orders of magnitude.<ref name=BergerHillExplain>Arno Berger and Theodore P Hill, . The authors describe this argument, but say it "still leaves open the question of why it is reasonable to assume that the logarithm of the spread, as opposed to the spread itself—or, say, the log log spread—should be large." Moreover, they say: "assuming large spread on a logarithmic scale is ''equivalent'' to assuming an approximate conformance with " (italics added), something which they say lacks a "simple explanation".</ref> | |||

| ===Multiplicative fluctuations=== | ===Multiplicative fluctuations=== | ||

| Many real-world examples of Benford's law arise from multiplicative fluctuations.<ref name=Pietronero>{{cite journal|title=Explaining the uneven distribution of numbers in nature: the laws of Benford and Zipf |author=L. Pietronero |author2=E. Tosatti |author3=V. Tosatti|author4=A. Vespignani |journal=Physica A |year=2001 |volume=293 |pages=297–304 |doi=10.1016/S0378-4371(00)00633-6|bibcode = 2001PhyA..293..297P |

Many real-world examples of Benford's law arise from multiplicative fluctuations.<ref name=Pietronero>{{cite journal |title=Explaining the uneven distribution of numbers in nature: the laws of Benford and Zipf |author=L. Pietronero |author2=E. Tosatti |author3=V. Tosatti |author4=A. Vespignani |journal=Physica A |year=2001 |volume=293 |issue=1–2 |pages=297–304 |doi=10.1016/S0378-4371(00)00633-6 |bibcode = 2001PhyA..293..297P |arxiv=cond-mat/9808305}}</ref> For example, if a stock price starts at $100, and then each day it gets multiplied by a randomly chosen factor between 0.99 and 1.01, then over an extended period the probability distribution of its price satisfies Benford's law with higher and higher accuracy. | ||

| The reason is that the ''logarithm'' of the stock price is undergoing a ], so over time its probability distribution will get more and more broad and smooth (see ]).<ref name=Pietronero/> (More technically, the ] says that multiplying more and more random variables will create a ] with larger and larger variance, so eventually it covers many orders of magnitude almost uniformly.) To be sure of approximate agreement with Benford's |

The reason is that the ''logarithm'' of the stock price is undergoing a ], so over time its probability distribution will get more and more broad and smooth (see ]).<ref name=Pietronero/> (More technically, the ] says that multiplying more and more random variables will create a ] with larger and larger variance, so eventually it covers many orders of magnitude almost uniformly.) To be sure of approximate agreement with Benford's law, the distribution has to be approximately invariant when scaled up by any factor up to 10; a ]ly distributed data set with wide dispersion would have this approximate property. | ||

| Unlike multiplicative fluctuations, ''additive'' fluctuations do not lead to Benford's law: They lead instead to ]s (again by the ]), which do not satisfy |

Unlike multiplicative fluctuations, ''additive'' fluctuations do not lead to Benford's law: They lead instead to ]s (again by the ]), which do not satisfy Benford's law. By contrast, that hypothetical stock price described above can be written as the ''product'' of many random variables (i.e. the price change factor for each day), so is ''likely'' to follow Benford's law quite well. | ||

| ===Multiple probability distributions=== | ===Multiple probability distributions=== | ||

| ] provided an alternative explanation by directing attention to the interrelation between the ] of the significant digits and the distribution of the ]. He showed in a simulation study that long |

] provided an alternative explanation by directing attention to the interrelation between the ] of the significant digits and the distribution of the ]. He showed in a simulation study that long-right-tailed distributions of a ] are compatible with the Newcomb–Benford law, and that for distributions of the ratio of two random variables the fit generally improves.<ref>{{cite journal |last1=Formann |first1=A. K. |year=2010 |title=The Newcomb–Benford law in its relation to some common distributions |journal=PLOS ONE |volume=5 |issue=5 |page=e10541 |doi=10.1371/journal.pone.0010541 |pmid=20479878 |pmc=2866333 |bibcode=2010PLoSO...510541F |doi-access=free}}</ref> For numbers drawn from certain distributions (]s, human heights) the Benford's law fails to hold because these variates obey a normal distribution, which is known not to satisfy Benford's law,<ref name=Formann2010>{{Cite journal | ||

| | last1 = Formann | first1 = A. K. | | last1 = Formann | first1 = A. K. | ||

| | title = The |

| title = The Newcomb–Benford Law in Its Relation to Some Common Distributions | ||

| | doi = 10.1371/journal.pone.0010541 | | doi = 10.1371/journal.pone.0010541 | ||

| | journal = |

| journal = PLOS ONE | ||

| | volume = 5 | | volume = 5 | ||

| | issue = 5 | | issue = 5 | ||

| Line 186: | Line 236: | ||

| | editor1-last = Morris | | editor1-last = Morris | ||

| | editor1-first = Richard James | | editor1-first = Richard James | ||

| | bibcode = 2010PLoSO...510541F | |||

| |bibcode = 2010PLoSO...510541F }}</ref> since normal distributions can't span several orders of magnitude and the ] of their logarithms will not be (even approximately) uniformly distributed. | |||

| | doi-access = free | |||

| However, if one "mixes" numbers from those distributions, for example by taking numbers from newspaper articles, Benford's law reappears. This can also be proven mathematically: if one repeatedly "randomly" chooses a ] (from an uncorrelated set) and then randomly chooses a number according to that distribution, the resulting list of numbers will obey Benford's Law.<ref name=Hill1995>{{Cite journal | |||

| }}</ref> since normal distributions can't span several orders of magnitude and the ] of their logarithms will not be (even approximately) uniformly distributed. However, if one "mixes" numbers from those distributions, for example, by taking numbers from newspaper articles, Benford's law reappears. This can also be proven mathematically: if one repeatedly "randomly" chooses a ] (from an uncorrelated set) and then randomly chooses a number according to that distribution, the resulting list of numbers will obey Benford's law.<ref name=Hill1995>{{Cite journal | |||

| | author = Theodore P. Hill | | author = Theodore P. Hill | ||

| | author-link = Theodore P. Hill | | author-link = Theodore P. Hill | ||

| Line 193: | Line 244: | ||

| | journal = Statistical Science | | journal = Statistical Science | ||

| | volume = 10 | | volume = 10 | ||

| | issue = 4 | |||

| | pages = 354–363 | | pages = 354–363 | ||

| | year = 1995 | | year = 1995 | ||

| Line 198: | Line 250: | ||

| | doi = 10.1214/ss/1177009869 | | doi = 10.1214/ss/1177009869 | ||

| | mr = 1421567 | | mr = 1421567 | ||

| | doi-access = free | |||

| |format=PDF}}</ref><ref name=Hill1998>{{Cite journal | |||

| }}</ref><ref name=Hill1998>{{Cite journal | |||

| | author = Theodore P. Hill | | author = Theodore P. Hill | ||

| | author-link = Theodore P. Hill | | author-link = Theodore P. Hill | ||

| Line 204: | Line 257: | ||

| | journal = ] | | journal = ] | ||

| | volume = 86 | | volume = 86 | ||

| |date=July–August 1998 | | date = July–August 1998 | ||

| | page = 358 | | page = 358 | ||

| | url = http://people.math.gatech.edu/~hill/publications/PAPER%20PDFS/TheFirstDigitPhenomenonAmericanScientist1996.pdf | | url = http://people.math.gatech.edu/~hill/publications/PAPER%20PDFS/TheFirstDigitPhenomenonAmericanScientist1996.pdf | ||

| |format=PDF | |||

| | bibcode = 1998AmSci..86..358H | | bibcode = 1998AmSci..86..358H | ||

| | doi = 10.1511/1998.4.358 | | doi = 10.1511/1998.4.358 | ||

| | issue = 4| s2cid = 13553246 | |||

| | issue = 4}}</ref> A similar probabilistic explanation for the appearance of Benford's Law in everyday-life numbers has been advanced by showing that it arises naturally when one considers mixtures of uniform distributions.<ref>Élise Janvresse and Thierry de la Rue (2004), "From Uniform Distributions to Benford's Law", ''Journal of Applied Probability'', 41 1203–1210 {{doi|10.1239/jap/1101840566}} {{MR|2122815}} </ref> | |||

| }}</ref> A similar probabilistic explanation for the appearance of Benford's law in everyday-life numbers has been advanced by showing that it arises naturally when one considers mixtures of uniform distributions.<ref>{{cite journal | last1 = Janvresse | first1 = Élise | last2 = Thierry | year = 2004 | title = From Uniform Distributions to Benford's Law | url = http://lmrs.univ-rouen.fr/Persopage/Delarue/Publis/PDF/uniform_distribution_to_Benford_law.pdf | journal = Journal of Applied Probability | volume = 41 | issue = 4 | pages = 1203–1210 | doi = 10.1239/jap/1101840566 | mr = 2122815 | access-date = 13 August 2015 | archive-url = https://web.archive.org/web/20160304125725/http://lmrs.univ-rouen.fr/Persopage/Delarue/Publis/PDF/uniform_distribution_to_Benford_law.pdf | archive-date = 4 March 2016 | url-status = dead }}</ref> | |||

| === |

===Invariance=== | ||

| In a list of lengths, the distribution of first digits of numbers in the list may be generally similar regardless of whether all the lengths are expressed in metres, yards, feet, inches, etc. The same applies to monetary units. | |||

| This is not |

This is not always the case. For example, the height of adult humans almost always starts with a 1 or 2 when measured in metres and almost always starts with 4, 5, 6, or 7 when measured in feet. But in a list of lengths spread evenly over many orders of magnitude—for example, a list of 1000 lengths mentioned in scientific papers that includes the measurements of molecules, bacteria, plants, and galaxies—it is reasonable to expect the distribution of first digits to be the same no matter whether the lengths are written in metres or in feet. | ||

| When the distribution of the first digits of a data set is ] (independent of the units that the data are expressed in), it is always given by Benford's law.<ref name=Pinkham>{{cite journal | last1 = Pinkham | first1 = Roger S. | year = 1961 | title = On the Distribution of First Significant Digits | url = http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aoms/1177704862 | journal = Ann. Math. Statist. | volume = 32 | issue = 4 | pages = 1223–1230 | doi = 10.1214/aoms/1177704862 | doi-access = free }}</ref><ref name="wolfram">{{Cite web |url=https://mathworld.wolfram.com/BenfordsLaw.html |title=Benford's Law |first=Eric W. |last=Weisstein |website=mathworld.wolfram.com}}</ref> | |||

| But consider a list of lengths that is spread evenly over many orders of magnitude. For example, a list of 1000 lengths mentioned in scientific papers will include the measurements of molecules, bacteria, plants, and galaxies. If one writes all those lengths in meters, or writes them all in feet, it is reasonable to expect that the distribution of first digits should be the same on the two lists. | |||

| For example, the first (non-zero) digit on the aforementioned list of lengths should have the same distribution whether the unit of measurement is feet or yards. But there are three feet in a yard, so the probability that the first digit of a length in yards is 1 must be the same as the probability that the first digit of a length in feet is 3, 4, or 5; similarly, the probability that the first digit of a length in yards is 2 must be the same as the probability that the first digit of a length in feet is 6, 7, or 8. Applying this to all possible measurement scales gives the logarithmic distribution of Benford's law. | |||

| In these situations, where the distribution of first digits of a data set is ] (or independent of the units that the data are expressed in), the distribution of first digits is always given by Benford's Law.<ref name=Pinkham>Roger S. Pinkham, , Ann. Math. Statist. Volume 32, Number 4 (1961), 1223-1230.</ref><ref name=wolfram></ref> | |||

| Benford's law for first digits is ] invariant for number systems. There are conditions and proofs of sum invariance, inverse invariance, and addition and subtraction invariance.<ref>{{Cite web |last=Jamain |first=Adrien |date=September 2001 |title=Benford's Law |url=https://wwwf.imperial.ac.uk/~nadams/classificationgroup/Benfords-Law.pdf |archive-url=https://ghostarchive.org/archive/20221009/https://wwwf.imperial.ac.uk/~nadams/classificationgroup/Benfords-Law.pdf |archive-date=2022-10-09 |url-status=live |access-date=2020-11-15 |website=Imperial College of London}}</ref><ref>{{Cite journal |last=Berger |first=Arno |date=June 2011 |title=A basic theory of Benford's Law |url=https://projecteuclid.org/download/pdfview_1/euclid.ps/1311860830 |journal=Probability Surveys |volume=8 (2011) |pages=1–126}}</ref> | |||

| For example, the first (non-zero) digit on this list of lengths should have the same distribution whether the unit of measurement is feet or yards. But there are three feet in a yard, so the probability that the first digit of a length in yards is 1 must be the same as the probability that the first digit of a length in feet is 3, 4, or 5; similarly the probability that the first digit of a length in yards is 2 must be the same as the probability that the first digit of a length in feet is 6, 7, or 8. Applying this to all possible measurement scales gives the logarithmic distribution of Benford's law. | |||

| ==Applications== | ==Applications== | ||

| ===Accounting fraud detection=== | ===Accounting fraud detection=== | ||

| In 1972, ] suggested that the law could be used to detect possible ] in lists of socio-economic data submitted in support of public planning decisions. Based on the plausible assumption that people who |

In 1972, ] suggested that the law could be used to detect possible ] in lists of socio-economic data submitted in support of public planning decisions. Based on the plausible assumption that people who fabricate figures tend to distribute their digits fairly uniformly, a simple comparison of first-digit frequency distribution from the data with the expected distribution according to Benford's law ought to show up any anomalous results.<ref>{{Cite journal|first=Hal |last=Varian |author-link=Hal Varian |title=Benford's Law (Letters to the Editor) |journal=]|year=1972 |issue=3 |volume=26 |page=65 |doi=10.1080/00031305.1972.10478934}}</ref> | ||

| === |

===Use in criminal trials=== | ||

| In the United States, evidence based on Benford's law has been admitted in criminal cases at the federal, state, and local levels.<ref>{{cite episode| url = |

In the United States, evidence based on Benford's law has been admitted in criminal cases at the federal, state, and local levels.<ref>{{cite episode| url = https://www.wnycstudios.org/story/91699-from-benford-to-erdos| title =From Benford to Erdös | series = Radio Lab | series-link = Radio Lab | airdate = 2009-09-30 | number = 2009-10-09}}</ref> | ||

| ===Election data=== | ===Election data=== | ||

| ], a political scientist and statistician at the University of Michigan, was the first to apply the second-digit Benford's law-test (2BL-test) in ].<ref>Walter R. Mebane, Jr., "" (July 18, 2006).</ref> Such analysis is considered a simple, though not foolproof, method of identifying irregularities in election results.<ref>"", '']'' (February 22, 2007).</ref> Scientific consensus to support the applicability of Benford's law to elections has not been reached in the literature. A 2011 study by the political scientists Joseph Deckert, Mikhail Myagkov, and ] argued that Benford's law is problematic and misleading as a statistical indicator of election fraud.<ref>{{cite journal |last1=Deckert |first1=Joseph |last2=Myagkov |first2=Mikhail |last3=Ordeshook |first3=Peter C. |title=Benford's Law and the Detection of Election Fraud |journal=Political Analysis |date=2011 |volume=19 |issue=3 |pages=245–268 |doi=10.1093/pan/mpr014 |url=https://www.cambridge.org/core/journals/political-analysis/article/benfords-law-and-the-detection-of-election-fraud/3B1D64E822371C461AF3C61CE91AAF6D |language=en |issn=1047-1987|doi-access=free }}</ref> Their method was criticized by Mebane in a response, though he agreed that there are many caveats to the application of Benford's law to election data.<ref>{{cite journal |last1=Mebane |first1=Walter R. |title=Comment on "Benford's Law and the Detection of Election Fraud" |journal=Political Analysis |date=2011 |volume=19 |issue=3 |pages=269–272 |doi=10.1093/pan/mpr024 |url=https://www.cambridge.org/core/journals/political-analysis/article/comment-on-benfords-law-and-the-detection-of-election-fraud/BC29680D8B5469A54C7C9D865029FE7C |language=en |doi-access=free }}</ref> | |||

| Benford's Law has been invoked as evidence of fraud in the ],<ref>Stephen Battersby ''New Scientist'' 24 June 2009</ref> and also used to analyze other election results. However, other experts consider Benford's Law essentially useless as a statistical indicator of election fraud in general.<ref>Joseph Deckert, Mikhail Myagkov and Peter C. Ordeshook, (2010) '' {{webarchive |url=https://web.archive.org/web/20140517120934/http://vote.caltech.edu/sites/default/files/benford_pdf_4b97cc5b5b.pdf |date=17 May 2014 }}'', Caltech/MIT Voting Technology Project Working Paper No. 9</ref><ref>Charles R. Tolle, Joanne L. Budzien, and Randall A. LaViolette (2000) '']'', Chaos 10, 2, pp.331–336 (2000); {{doi|10.1063/1.166498}}</ref> | |||

| Benford's law ] in the ].<ref>Stephen Battersby ''New Scientist'' 24 June 2009</ref> An analysis by Mebane found that the second digits in vote counts for President ], the winner of the election, tended to differ significantly from the expectations of Benford's law, and that the ballot boxes with very few ] had a greater influence on the results, suggesting widespread ].<ref>Walter R. Mebane, Jr., "" (University of Michigan, June 29, 2009), pp. 22–23.</ref> Another study used ] simulations to find that the candidate ] received almost twice as many vote counts beginning with the digit 7 as would be expected according to Benford's law,<ref>{{cite journal |doi=10.1080/02664763.2013.838664 |arxiv=0906.2789|title=A first-digit anomaly in the 2009 Iranian presidential election|year=2014|last1=Roukema|first1=Boudewijn F.|journal=Journal of Applied Statistics|volume=41|pages=164–199|bibcode=2014JApS...41..164R|s2cid=88519550}}</ref> while an analysis from ] concluded that the probability that a fair election would produce both too few non-adjacent digits and the suspicious deviations in last-digit frequencies as found in the 2009 Iranian presidential election is less than 0.5 percent.<ref>Bernd Beber and Alexandra Scacco, "", '']'' (June 20, 2009).</ref> Benford's law has also been applied for forensic auditing and fraud detection on data from the ],<ref>Mark J. Nigrini, ''Benford's Law: Applications for Forensic Accounting, Auditing, and Fraud Detection'' (Hoboken, NJ: Wiley, 2012), pp. 132–35.</ref> the ] and ]s,<ref name="election-forensics">Walter R. Mebane, Jr., "Election Forensics: The Second-Digit Benford's Law Test and Recent American Presidential Elections" in ''Election Fraud: Detecting and Deterring Electoral Manipulation'', edited by R. Michael Alvarez et al. (Washington, D.C.: Brookings Institution Press, 2008), pp. 162–81. </ref> and the ];<ref>{{cite journal|first1=Susumu |last1=Shikano |first2=Verena |last2=Mack |title=When Does the Second-Digit Benford's Law-Test Signal an Election Fraud? Facts or Misleading Test Results |journal=Jahrbücher für Nationalökonomie und Statistik |date=2011 |pages=719–732 |volume=231 |issue=5–6 |doi=10.1515/jbnst-2011-5-610 |s2cid=153896048 }}</ref> the Benford's Law Test was found to be "worth taking seriously as a statistical test for fraud," although "is not sensitive to distortions we know significantly affected many votes."<ref name="election-forensics"/>{{Explain|date=November 2020|reason=What were the results? Was fraud detected?}} | |||

| Benford's law has also been misapplied to claim election fraud. When applying the law to ]'s election returns for ], ], and other localities in the ], the distribution of the first digit did not follow Benford's law. The misapplication was a result of looking at data that was tightly bound in range, which violates the assumption inherent in Benford's law that the range of the data be large. The first digit test was applied to precinct-level data, but because precincts rarely receive more than a few thousand votes or fewer than several dozen, Benford's law cannot be expected to apply. According to Mebane, "It is widely understood that the first digits of precinct vote counts are not useful for trying to diagnose election frauds."<ref>{{cite news|url=https://www.reuters.com/article/uk-factcheck-benford/fact-check-deviation-from-benfords-law-does-not-prove-election-fraud-idUSKBN27Q3AI|title=Fact check: Deviation from Benford's Law does not prove election fraud|date=November 10, 2020|work=]}}</ref><ref>{{cite web|url=https://physicsworld.com/a/benfords-law-and-the-2020-us-presidential-election-nothing-out-of-the-ordinary/|title=Benford's law and the 2020 US presidential election: nothing out of the ordinary|first= James|last= Dacey|date=November 19, 2020|publisher=]}}</ref> | |||

| ===Macroeconomic data=== | ===Macroeconomic data=== | ||

| Similarly, the macroeconomic data the Greek government reported to the European Union before entering the ] was shown to be probably fraudulent using Benford's law, albeit years after the country joined.<ref> |

Similarly, the macroeconomic data the Greek government reported to the European Union before entering the ] was shown to be probably fraudulent using Benford's law, albeit years after the country joined.<ref>William Goodman, , '']'', Royal Statistical Society (June 2016), p. 38.</ref><ref name="Goldacre">{{cite news | title= The special trick that helps identify dodgy stats |url= https://www.theguardian.com/commentisfree/2011/sep/16/bad-science-dodgy-stats| last= Goldacre| first= Ben| author-link= Ben Goldacre | date= 16 September 2011| work= ] | access-date= 1 February 2019}}</ref> | ||

| === Price digit analysis === | === Price digit analysis === | ||

| Benford's law |

Researchers have used Benford's law to detect ] patterns, in a Europe-wide study in consumer product prices before and after euro was introduced in 2002.<ref>{{Cite journal|last1=Sehity|first1=Tarek el|last2=Hoelzl|first2=Erik|last3=Kirchler|first3=Erich|date=2005-12-01|title=Price developments after a nominal shock: Benford's Law and psychological pricing after the euro introduction|journal=International Journal of Research in Marketing|volume=22|issue=4|pages=471–480|doi=10.1016/j.ijresmar.2005.09.002|s2cid=154273305 }}</ref> The idea was that, without psychological pricing, the first two or three digits of price of items should follow Benford's law. Consequently, if the distribution of digits deviates from Benford's law (such as having a lot of 9's), it means merchants may have used psychological pricing. | ||

| When ], for a brief period of time, the price of goods in euro was simply converted from the price of goods in local currencies before the replacement. As it is essentially impossible to use psychological pricing simultaneously on both price-in-euro and price-in-local-currency, during the transition period, psychological pricing would be disrupted even if it used to be present. It can only be re-established once consumers have gotten used to prices in a single currency again, this time in euro. | |||

| As the researchers expected, the distribution of first price digit followed Benford's law, but the distribution of the second and third digits deviated significantly from Benford's law before the introduction, then deviated less during the introduction, then deviated more again after the introduction. | |||

| ===Genome data=== | ===Genome data=== | ||

| The number of ]s and their relationship to genome size differs between ]s and ]s with the former showing a log-linear relationship and the latter a linear relationship. Benford's law has been used to test this observation with an excellent fit to the data in both cases.<ref name=Friar2012>{{cite journal | last1 = Friar | first1 = JL | last2 = Goldman | first2 = T | last3 = Pérez-Mercader | first3 = J | year = 2012 | title = Genome sizes and the benford distribution | journal = PLOS ONE | volume = 7 | issue = 5| page = e36624 | doi = 10.1371/journal.pone.0036624 |arxiv = 1205.6512 |bibcode = 2012PLoSO...736624F | pmid=22629319 | pmc=3356352}}</ref> | The number of ]s and their relationship to genome size differs between ]s and ]s with the former showing a log-linear relationship and the latter a linear relationship. Benford's law has been used to test this observation with an excellent fit to the data in both cases.<ref name=Friar2012>{{cite journal | last1 = Friar | first1 = JL | last2 = Goldman | first2 = T | last3 = Pérez-Mercader | first3 = J | year = 2012 | title = Genome sizes and the benford distribution | journal = PLOS ONE | volume = 7 | issue = 5| page = e36624 | doi = 10.1371/journal.pone.0036624 |arxiv = 1205.6512 |bibcode = 2012PLoSO...736624F | pmid=22629319 | pmc=3356352| doi-access = free }}</ref> | ||

| ===Scientific fraud detection=== | ===Scientific fraud detection=== | ||

| A test of regression coefficients in published papers showed agreement with Benford's law.<ref name=Diekmann2007>Diekmann A |

A test of regression coefficients in published papers showed agreement with Benford's law.<ref name=Diekmann2007>{{cite journal | last1 = Diekmann | first1 = A | s2cid = 117402608 | year = 2007 | title = Not the First Digit! Using Benford's Law to detect fraudulent scientific data | journal = J Appl Stat | volume = 34 | issue = 3| pages = 321–329 | doi = 10.1080/02664760601004940 | bibcode = 2007JApSt..34..321D | hdl = 20.500.11850/310246 | hdl-access = free }}</ref> As a comparison group subjects were asked to fabricate statistical estimates. The fabricated results conformed to Benford's law on first digits, but failed to obey Benford's law on second digits. | ||

| === Academic publishing networks === | |||

| Testing the number of published scientific papers of all registered researchers in Slovenia's national database was shown to strongly conform to Benford's law.<ref>{{Cite journal |last1=Tošić |first1=Aleksandar |last2=Vičič |first2=Jernej |date=2021-08-01 |title=Use of Benford's law on academic publishing networks |url=https://www.sciencedirect.com/science/article/pii/S1751157721000341 |journal=Journal of Informetrics |volume=15 |issue=3 |pages=101163 |doi=10.1016/j.joi.2021.101163 |issn=1751-1577}}</ref> Moreover, the authors were grouped by scientific field, and tests indicate natural sciences exhibit greater conformity than social sciences. | |||

| ==Statistical tests== | ==Statistical tests== | ||

| Although the ] |

Although the ] has been used to test for compliance with Benford's law it has low statistical power when used with small samples. | ||

| The ] and the ] are more powerful when the sample size is small particularly when Stephens's corrective factor is used.<ref name=Stephens1970>{{cite journal |last=Stephens |first=M. A. |year=1970 |title=Use of the Kolmogorov–Smirnov, |

The ] and the ] are more powerful when the sample size is small, particularly when Stephens's corrective factor is used.<ref name=Stephens1970>{{cite journal |last=Stephens |first=M. A. |year=1970 |title=Use of the Kolmogorov–Smirnov, Cramér–von Mises and related statistics without extensive tables |journal=] |volume=32 |issue=1 |pages=115–122 |doi=10.1111/j.2517-6161.1970.tb00821.x }}</ref> These tests may be unduly conservative when applied to discrete distributions. Values for the Benford test have been generated by Morrow.<ref name=Morrow2014>{{cite book |last = Morrow |first = John |title = Benford's Law, families of distributions and a test basis |location = London, UK |access-date = 2022-03-11 |date = August 2014 |url = http://cep.lse.ac.uk/_new/publications/series.asp?prog=CEP}}</ref> The critical values of the test statistics are shown below: | ||

| {| class="wikitable" | :{| class="wikitable" style="text-align:center;" | ||

| ! {{diagonal split header|Test| {{mvar|⍺}} }} | |||

| |- | |||

| ! {{diagonal split header|Test|''α''}} | |||

| ! 0.10 | ! 0.10 | ||

| ! 0.05 | ! 0.05 | ||

| ! 0.01 | ! 0.01 | ||

| |- | |- | ||

| | Kuiper |

| Kuiper | ||

| | 1.191 | | 1.191 | ||

| | 1.321 | | 1.321 | ||

| Line 271: | Line 334: | ||

| These critical values provide the minimum test statistic values required to reject the hypothesis of compliance with Benford's law at the given ]s. | These critical values provide the minimum test statistic values required to reject the hypothesis of compliance with Benford's law at the given ]s. | ||

| Two alternative tests specific to this law have been published: |

Two alternative tests specific to this law have been published: First, the max ({{mvar|m}}) statistic<ref name=Leemis2000>{{cite journal |last1=Leemis |first1=L. M. |last2=Schmeiser |first2=B. W. |last3=Evans |first3=D. L. |year=2000 |title=Survival distributions satisfying Benford's Law |journal=The American Statistician |volume=54 |issue=4 |pages=236–241 |doi=10.1080/00031305.2000.10474554 |s2cid=122607770}}</ref> is given by | ||

| :<math>m = \sqrt{N}\cdot\operatorname*\max_{i=1}^{9} \Big\{|\Pr (X \text{ has FSD}=i)-\log_{10}(1+1/i)| \Big\}\,</math> | |||

| and secondly, the distance (''d'') statistic<ref name=Cho2007>{{cite journal |last1=Cho |first1=W. K. T. |last2=Gaines |first2=B. J. |year=2007 |title=Breaking the (Benford) law: Statistical fraud detection in campaign finance |journal=The American Statistician |volume=61 |pages=218–223 |doi=10.1198/000313007X223496 |issue=3}}</ref> is given by | |||

| :<math>d= \sqrt{N \cdot \sum_{i=1}^{9}\Big^{2}},</math> | |||

| where FSD is the First Significant Digit and {{mvar|N}} is the sample size. Morrow has determined the critical values for both these statistics, which are shown below:<ref name="Morrow2010" /> | |||

| : <math>m = \sqrt{N} \cdot \max_{k=1}^9 \left\{\left|\Pr\left(X \text{ has FSD} = k\right) - \log_{10}\left(1 + \frac{1}{k}\right)\right|\right\}.</math> | |||

| {| class="wikitable" | |||

| |- | |||

| The leading factor <math>\sqrt{N}</math> does not appear in the original formula by Leemis;<ref name=Leemis2000/> it was added by Morrow in a later paper.<ref name=Morrow2014/> | |||

| ! {{diagonal split header|Statistic|''⍺''}} | |||

| Secondly, the distance ({{mvar|d}}) statistic<ref name=Cho2007>{{cite journal |last1=Cho |first1=W. K. T. |last2=Gaines |first2=B. J. |year=2007 |title=Breaking the (Benford) law: Statistical fraud detection in campaign finance |journal=The American Statistician |volume=61 |issue=3 |pages=218–223 |doi=10.1198/000313007X223496 |s2cid=7938920 }}</ref> is given by | |||

| : <math>d = \sqrt{N \cdot \sum_{l=1}^9 \left^2},</math> | |||

| where FSD is the first significant digit and {{mvar|N}} is the sample size. Morrow has determined the critical values for both these statistics, which are shown below:<ref name=Morrow2014/> | |||

| :{| class="wikitable" style="text-align:center;" | |||

| ! {{diagonal split header|Statistic|{{mvar|⍺}}}} | |||

| ! 0.10 | ! 0.10 | ||

| ! 0.05 | ! 0.05 | ||

| ! 0.01 | ! 0.01 | ||

| |- | |- | ||

| | Leemis' |

| Leemis's {{mvar|m}} | ||

| | 0.851 | | 0.851 | ||

| | 0.967 | | 0.967 | ||

| | 1.212 | | 1.212 | ||

| |- | |- | ||

| | Cho & Gaines's {{mvar|d}} | |||

| | Cho–Gaines' ''d'' | |||

| | 1.212 | | 1.212 | ||

| | 1.330 | | 1.330 | ||

| Line 295: | Line 363: | ||

| |} | |} | ||

| Morrow has also shown that for any random variable {{mvar|X}} (with a continuous ]) divided by its standard deviation ({{mvar|σ}}), some value {{mvar|A}} can be found so that the probability of the distribution of the first significant digit of the random variable <math>|X/\sigma|^A</math> will differ from Benford's law by less than {{nobr|{{mvar|ε}} > 0.}}<ref name=Morrow2014/> The value of {{mvar|A}} depends on the value of {{mvar|ε}} and the distribution of the random variable. | |||

| Nigrini<ref name=Nigrini1996>{{cite journal |last=Nigrini |first=M. |year=1996 |title=A taxpayer compliance application of Benford's Law |journal=J Amer Tax Assoc |volume=18 |pages=72–91}}</ref> has suggested the use of a ] | |||

| A method of accounting fraud detection based on bootstrapping and regression has been proposed.<ref name=Suh2011>{{cite journal |last1=Suh |first1=I. S. |last2=Headrick |first2=T. C. |last3=Minaburo |first3=S. |year=2011 |title=An effective and efficient analytic technique: A bootstrap regression procedure and Benford's Law |journal=J. Forensic & Investigative Accounting |volume=3 |issue=3}}</ref> | |||

| : <math> z = \frac { \, | p_o - p_e | - \frac{1}{2n} \, } { s_i } </math> | |||

| with | |||

| : <math> s_i = \left^{1/2}, </math> | |||

| If the goal is to conclude agreement with the Benford's law rather than disagreement, then the ]s mentioned above are inappropriate. In this case the specific ] should be applied. An empirical distribution is called equivalent to the Benford's law if a distance (for example total variation distance or the usual Euclidean distance) between the probability mass functions is sufficiently small. This method of testing with application to Benford's law is described in Ostrovski.<ref>{{cite journal |last=Ostrovski |first=Vladimir |date=May 2017 |title=Testing equivalence of multinomial distributions |journal=Statistics & Probability Letters |volume=124 |pages=77–82 |doi=10.1016/j.spl.2017.01.004 |s2cid=126293429 |url=https://www.researchgate.net/publication/312481284 }}</ref> | |||

| where |''x''| is the absolute value of ''x'', ''n'' is the sample size, {{sfrac|1|2''n''}} is a continuity correction factor, ''p''<sub>e</sub> is the proportion expected from Benford's law and ''p''<sub>o</sub> is the observed proportion in the sample. | |||

| ==Range of applicability== | |||

| Morrow has also shown that for any random variable ''X'' (with a continuous pdf) divided by its standard deviation (''σ''), a value ''A'' can be found such that the probability of the distribution of the first significant digit of the random variable ({{sfrac|''X''|''σ''}})<sup>''A''</sup> will differ from Benford's Law by less than ''ε'' > 0.<ref name="Morrow2010" /> The value of ''A'' depends on the value of ''ε'' and the distribution of the random variable. | |||

| ===Distributions known to obey Benford's law=== | |||

| Some well-known infinite ]s {{not a typo|provably}} satisfy Benford's law exactly (in the ] as more and more terms of the sequence are included). Among these are the ]s,<ref>{{cite journal | last1 = Washington | first1 = L. C. | year = 1981 | title = Benford's Law for Fibonacci and Lucas Numbers | journal = ] | volume = 19 | issue = 2| pages = 175–177| doi = 10.1080/00150517.1981.12430109 }}</ref><ref>{{cite journal | last1 = Duncan | first1 = R. L. | year = 1967 | title = An Application of Uniform Distribution to the Fibonacci Numbers | journal = ] | volume = 5 | issue = 2 | pages = 137–140| doi = 10.1080/00150517.1967.12431312 }}</ref> the ]s,<ref>{{cite journal | last1 = Sarkar | first1 = P. B. | year = 1973 | title = An Observation on the Significant Digits of Binomial Coefficients and Factorials | journal = Sankhya B | volume = 35 | pages = 363–364}}</ref> the powers of 2,<ref name=powers>In general, the sequence ''k''<sup>1</sup>, ''k''<sup>2</sup>, ''k''<sup>3</sup>, etc., satisfies Benford's law exactly, under the condition that log<sub>10</sub> ''k'' is an ]. This is a straightforward consequence of the ].</ref><ref name=":0">That the first 100 powers of 2 approximately satisfy Benford's law is mentioned by Ralph Raimi. {{cite journal | last1 = Raimi | first1 = Ralph A. | year = 1976 | title = The First Digit Problem | journal = ] | volume = 83 | issue = 7| pages = 521–538 | doi=10.2307/2319349| jstor = 2319349}}</ref> and the powers of almost any other number.<ref name=powers /> | |||

| Likewise, some continuous processes satisfy Benford's law exactly (in the asymptotic limit as the process continues through time). One is an ] or ] process: If a quantity is exponentially increasing or decreasing in time, then the percentage of time that it has each first digit satisfies Benford's law asymptotically (i.e. increasing accuracy as the process continues through time). | |||

| A method of accounting fraud detection based on bootstrapping and regression has been proposed.<ref name=Suh2011>{{cite journal |last1=Suh |first1=I. S. |last2=Headrick |first2=T. C. |last3=Minaburo |first3=S. |year=2011 |title=An effective and efficient analytic technique: A bootstrap regression procedure and Benford's Law |journal=J Forensic & Investigative Accounting |volume=3 |issue=3}}</ref> | |||

| ===Distributions known to disobey Benford's law=== | |||

| If the goal is to conclude agreement with the Benford's law rather than disagreement, then the ]s mentioned above are inappropriate. In this case the specific ] should be applied. An empirical distribution is called equivalent to the Benford's law if a distance (for example total variation distance or the usual Euclidean distance) between the probability mass functions is sufficiently small. This method of testing with application to Benford's law is described in Ostrovski (2017).<ref>{{cite journal|last1=Ostrovski|first1=Vladimir|date=May 2017|title=Testing equivalence of multinomial distributions|ssrn=2907258|journal=Statistics & Probability Letters|volume=124|pages=77–82|doi=10.1016/j.spl.2017.01.004|via=}}</ref> | |||

| The ]s and ]s of successive natural numbers do not obey this law.<ref name=Raimi1976>{{cite journal |last=Raimi |first=Ralph A. |date=Aug–Sep 1976 |title=The first digit problem |journal=] |volume=83 |issue=7 |pages=521–538 |doi=10.2307/2319349|jstor=2319349}}</ref> Prime numbers in a finite range follow a Generalized Benford’s law, that approaches uniformity as the size of the range approaches infinity.<ref>{{Cite web|last1=Zyga|first1=Lisa|last2=Phys.org|title=New Pattern Found in Prime Numbers|url=https://phys.org/news/2009-05-pattern-prime.html|access-date=2022-01-23|website=phys.org|language=en}}</ref> Lists of local telephone numbers violate Benford's law.<ref>{{Cite journal| issn = 0003-1305| volume = 61| issue = 3| pages = 218–223| last1 = Cho| first1 = Wendy K. Tam| last2 = Gaines| first2 = Brian J.| title = Breaking the (Benford) Law: Statistical Fraud Detection in Campaign Finance| journal = The American Statistician| accessdate = 2022-03-08| date = 2007| doi = 10.1198/000313007X223496| url = https://www.jstor.org/stable/27643897| jstor = 27643897| s2cid = 7938920}}</ref> Benford's law is violated by the populations of all places with a population of at least 2500 individuals from five US states according to the 1960 and 1970 censuses, where only 19 % began with digit 1 but 20 % began with digit 2, because truncation at 2500 introduces statistical bias.<ref name=Raimi1976/> The terminal digits in pathology reports violate Benford's law due to rounding.<ref name=Beer2009>{{cite journal |last1=Beer |first1=Trevor W. |s2cid=206987736 |year=2009 |title=Terminal digit preference: beware of Benford's law |journal=] |volume=62 |issue=2 |page=192 |doi=10.1136/jcp.2008.061721|pmid=19181640}}</ref> | |||

| Distributions that do not span several orders of magnitude will not follow Benford's law. Examples include height, weight, and IQ scores.<ref name=Formann2010/><ref>Singleton, Tommie W. (May 1, 2011). "", ''ISACA Journal'', ]. Retrieved Nov. 9, 2020.</ref> | |||

| ==Generalization to digits beyond the first== | |||

| , hover over a point to show its values.]] | |||

| It is possible to extend the law to digits beyond the first.<ref name=Hill1995sigdig>], "The Significant-Digit Phenomenon", The American Mathematical Monthly, Vol. 102, No. 4, (Apr., 1995), pp. 322–327. . .</ref> In particular, the probability of encountering a number starting with the string of digits ''n'' is given by: | |||

| ===Criteria for distributions expected and not expected to obey Benford's law=== | |||

| :<math> \log_{ 10 } \left( n + 1 \right )- \log_{ 10 } \left( n \right ) = \log_{ 10 } \left( 1 + \frac{ 1 }{ n } \right)</math> | |||

| A number of criteria, applicable particularly to accounting data, have been suggested where Benford's law can be expected to apply.<ref name=Durtschi2004>{{cite journal | last1 = Durtschi | first1 = C | last2 = Hillison | first2 = W | last3 = Pacini | first3 = C | year = 2004 | title = The effective use of Benford's law to assist in detecting fraud in accounting data | journal = J Forensic Accounting | volume = 5 | pages = 17–34}}</ref> | |||

| ;Distributions that can be expected to obey Benford's law | |||

| For example, the probability that a number starts with the digits 3, 1, 4 is {{math|log<sub>10</sub>(1 + 1/314) ≈ 0.00138}}, as in the figure on the right. | |||

| * When the mean is greater than the median and the skew is positive | |||

| * Numbers that result from mathematical combination of numbers: e.g. quantity × price | |||

| * Transaction level data: e.g. disbursements, sales | |||

| ;Distributions that would not be expected to obey Benford's law | |||

| This result can be used to find the probability that a particular digit occurs at a given position within a number. For instance, the probability that a "2" is encountered as the second digit is<ref name=Hill1995sigdig /> | |||

| * Where numbers are assigned sequentially: e.g. check numbers, invoice numbers | |||

| * Where numbers are influenced by human thought: e.g. prices set by psychological thresholds ($9.99) | |||

| * Accounts with a large number of firm-specific numbers: e.g. accounts set up to record $100 refunds | |||

| * Accounts with a built-in minimum or maximum | |||

| * Distributions that do not span an order of magnitude of numbers. | |||

| ===Benford’s law compliance theorem=== | |||

| :<math> \log_{ 10 } \left( 1 + \frac{ 1 }{ 12 } \right ) + \log_{ 10 } \left( 1 + \frac{ 1 }{ 22 } \right )+ \cdots + \log_{ 10 } \left( 1 + \frac{ 1 }{ 92 } \right) \approx 0.109 </math> | |||

| Mathematically, Benford’s law applies if the distribution being tested fits the "Benford’s law compliance theorem".<ref name="dspguide"/> The derivation says that Benford's law is followed if the ] of the logarithm of the probability density function is zero for all integer values. Most notably, this is satisfied if the Fourier transform is zero (or negligible) for ''n'' ≥ 1. This is satisfied if the distribution is wide (since wide distribution implies a narrow Fourier transform). Smith summarizes thus (p. 716): | |||

| <blockquote> | |||

| Benford's law is followed by distributions that are wide compared with unit distance along the logarithmic scale. Likewise, the law is not followed by distributions that are narrow compared with unit distance … If the distribution is wide compared with unit distance on the log axis, it means that the spread in the set of numbers being examined is much greater than ten. | |||

| </blockquote> | |||

| In short, Benford’s law requires that the numbers in the distribution being measured have a spread across at least an order of magnitude. | |||

| And the probability that ''d'' (''d'' = 0, 1, ..., 9) is encountered as the ''n''-th (''n'' > 1) digit is | |||

| ==Tests with common distributions== | |||

| :<math> \sum_{ k = 10^{ n - 2 } }^{ 10^{ n - 1 } - 1 } \log_{ 10 } \left( 1 + \frac{ 1 }{ 10k + d } \right )</math> | |||

| Benford's law was empirically tested against the numbers (up to the 10th digit) generated by a number of important distributions, including the ], the ], the ], and others.<ref name=Formann2010 /> | |||

| The uniform distribution, as might be expected, does not obey Benford's law. In contrast, the ] of ] is well-described by Benford's law. | |||

| The distribution of the ''n''-th digit, as ''n'' increases, rapidly approaches a uniform distribution with 10% for each of the ten digits, as shown below.<ref name=Hill1995sigdig /> Four digits is often enough to assume a uniform distribution of 10% as '0' appears 10.0176% of the time in the fourth digit while '9' appears 9.9824% of the time. | |||

| {| class="wikitable" | |||

| !Digit | |||

| !0 | |||

| !1 | |||

| !2 | |||

| !3 | |||

| !4 | |||

| !5 | |||

| !6 | |||

| !7 | |||

| !8 | |||

| !9 | |||

| |- | |||

| !1st | |||

| | {{N/A}} | |||

| |30.1% | |||

| |17.6% | |||

| |12.5% | |||

| |9.7% | |||

| |7.9% | |||

| |6.7% | |||

| |5.8% | |||

| |5.1% | |||

| |4.6% | |||

| |- | |||

| !2nd | |||

| |12% | |||

| |11.4% | |||

| |10.9% | |||

| |10.4% | |||

| |10% | |||

| |9.7% | |||

| |9.3% | |||

| |9% | |||

| |8.8% | |||

| |8.5% | |||

| |- | |||

| !3rd | |||

| |10.2% | |||

| |10.1% | |||

| |10.1% | |||

| |10.1% | |||

| |10% | |||

| |10% | |||

| |9.9% | |||

| |9.9% | |||

| |9.9% | |||

| |9.8% | |||

| |} | |||

| Neither the normal distribution nor the ratio distribution of two normal distributions (the ]) obey Benford's law. Although the ] does not obey Benford's law, the ratio distribution of two half-normal distributions does. Neither the right-truncated normal distribution nor the ratio distribution of two right-truncated normal distributions are well described by Benford's law. This is not surprising as this distribution is weighted towards larger numbers. | |||

| ==Tests with common distributions== | |||

| Benford's law was empirically tested against the numbers (up to the 10th digit) generated by a number of important distributions, including the ], the ], the ], the ], the ], the ] and the ].<ref name=Formann2010 /> In addition to these the ] of two uniform distributions, the ratio distribution of two exponential distributions, the ratio distribution of two half-normal distributions, the ratio distribution of two right-truncated normal distributions, the ratio distribution of two chi-square distributions (the ]) and the ] distribution were tested. | |||

| Benford's law also describes the exponential distribution and the ratio distribution of two exponential distributions well. The fit of chi-squared distribution depends on the ] (df) with good agreement with df = 1 and decreasing agreement as the df increases. The ''F''-distribution is fitted well for low degrees of freedom. With increasing dfs the fit decreases but much more slowly than the chi-squared distribution. The fit of the log-normal distribution depends on the ] and the ] of the distribution. The variance has a much greater effect on the fit than does the mean. Larger values of both parameters result in better agreement with the law. The ratio of two log normal distributions is a log normal so this distribution was not examined. | |||

| Other distributions that have been examined include the |

Other distributions that have been examined include the Muth distribution, ], ], ], ] and the ] all of which show reasonable agreement with the law.<ref name=Leemis2000 /><ref name="Dümbgen2008">{{cite journal|last1=Dümbgen|first1=L|last2=Leuenberger|first2=C|s2cid =2596996|year=2008|title=Explicit bounds for the approximation error in Benford's Law|journal =Electronic Communications in Probability|volume=13|pages=99–112|doi=10.1214/ECP.v13-1358 |arxiv=0705.4488}}</ref> The ] – a density increases with increasing value of the random variable – does not show agreement with this law.<ref name="Dümbgen2008"/> | ||

| ==Generalization to digits beyond the first== | |||

| ==Distributions known to obey Benford's law== | |||

| }}, hover over a point to show its values.)]] | |||

| Some well-known infinite ]s {{not a typo|provably}} satisfy Benford's Law exactly (in the ] as more and more terms of the sequence are included). Among these are the ]s,<ref>{{cite journal | last1 = Washington | first1 = L. C. | year = 1981 | title = Benford's Law for Fibonacci and Lucas Numbers | url = | journal = ] | volume = 19 | issue = 2| pages = 175–177 }}</ref><ref>{{cite journal | last1 = Duncan | first1 = R. L. | year = 1967 | title = An Application of Uniform Distribution to the Fibonacci Numbers | url = | journal = ] | volume = 5 | issue = | pages = 137–140 }}</ref> the ]s,<ref>{{cite journal | last1 = Sarkar | first1 = P. B. | year = 1973 | title = An Observation on the Significant Digits of Binomial Coefficients and Factorials | url = | journal = ] B | volume = 35 | issue = | pages = 363–364 }}</ref> the powers of 2,<ref name=powers>In general, the sequence ''k''<sup>1</sup>, ''k''<sup>2</sup>, ''k''<sup>3</sup>, etc., satisfies Benford's Law exactly, under the condition that log<sub>10</sub> ''k'' is an ]. This is a straightforward consequence of the ].</ref><ref>That the first 100 powers of 2 approximately satisfy Benford's Law is mentioned by Ralph Raimi. {{cite journal | last1 = Raimi | first1 = Ralph A. | year = 1976 | title = The First Digit Problem | url = | journal = ] | volume = 83 | issue = 7| pages = 521–538 | doi=10.2307/2319349}}</ref> and the powers of ''almost'' any other number.<ref name=powers /> | |||

| It is possible to extend the law to digits beyond the first.<ref name=Hill1995sigdig>{{cite journal | last1 = Hill | first1 = Theodore P. | author-link = Theodore P. Hill | year = 1995 | title = The Significant-Digit Phenomenon | url = http://digitalcommons.calpoly.edu/cgi/viewcontent.cgi?article=1041&context=rgp_rsr | journal = The American Mathematical Monthly | volume = 102 | issue = 4| pages = 322–327 | jstor = 2974952 | doi = 10.1080/00029890.1995.11990578}}</ref> In particular, for any given number of digits, the probability of encountering a number starting with the string of digits ''n'' of that length{{snd}} discarding leading zeros{{snd}} is given by | |||

| : <math>\log_{10}(n + 1) - \log_{10}(n) = \log_{10}\left(1 + \frac{1}{n}\right).</math> | |||